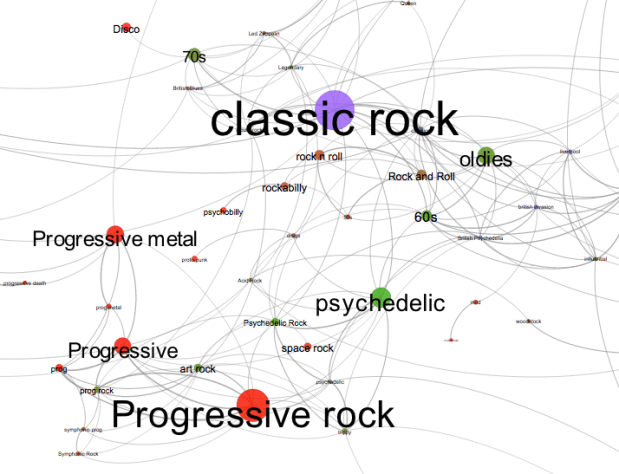

Map of Music Styles

Posted by Paul in code, data, events, fun, tags, The Echo Nest, visualization on April 22, 2012

I spent this weekend at Rethink Music Hackers’ Weekend building a music hack called Map of Music Styles (aka MOMS). This hack presents a visualization of over 1000 music styles. You can pan and zoom through the music space just like you can with Google maps. When you see an interesting style of music you can click on it to hear some samples of music of that style.

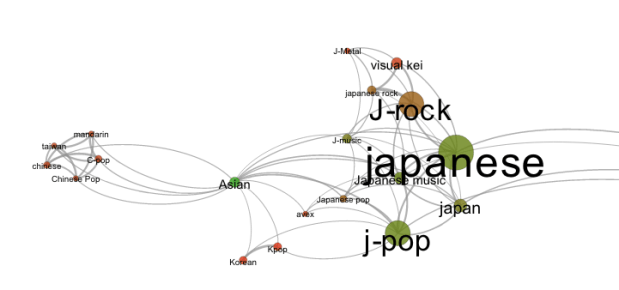

It is fun to explore all the different neighborhoods of music styles. Here’s the Asian corner:

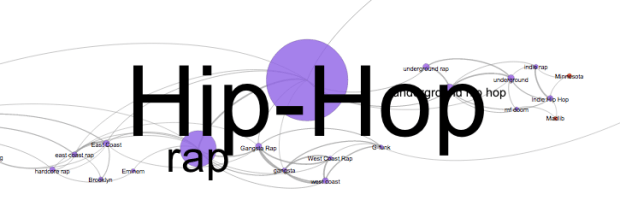

Here’s the Hip-Hop neighborhood:

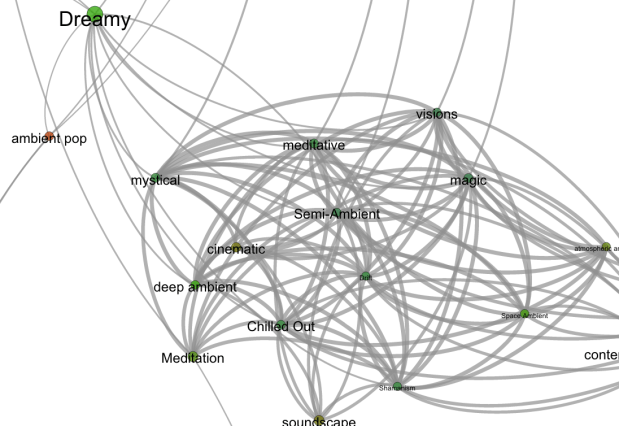

And a mega-cluster of ambient/chill-out music:

To build the app, I collected the top 2,000 or so terms via The Echo Nest API. For each term I calculated the most similar terms based upon artist overlap (for instance, the term ‘metal’ and ‘heavy metal’ are often applied to the same artists and so can be considered similar, where as ‘metal’ and ‘new age’ are rarely applied to the same artist and are, therefore, not similar). To layout the graph I used Gephi (Its like Photoshop for graphs) and exported the graph to SVG. After that it was just a bit of Javascript, HTML, and CSS to create the web page that will let you pan and zoom. When you click on a term, I fetch audio that matches the style via the Echo Nest and 7Digital APIs.

There are a few non-styles that snuck through – the occasional band name, or mood, but they don’t hurt anything so I let them hang out with the real styles. The app works best in Chrome. There’s a bug in the Firefox version that I need to work out.

Give it a try and let me know how you like it: Map of Music Styles

Why streaming recommendations are different than DVD recommendations at Netflix

From Why Netflix Never Implemented The Algorithm That Won The Netflix $1 Million Challenge

An interesting insight:

when people rent a movie that won’t arrive for a few days, they’re making a bet on what they want at some future point. And, people tend to have a more… optimistic viewpoint of their future selves. That is, they may be willing to rent, say, an “artsy” movie that won’t show up for a few days, feeling that they’ll be in the mood to watch it a few days (weeks?) in the future, knowing they’re not in the mood immediately. But when the choice is immediate, they deal with their present selves, and that choice can be quite different.

When I was a Netflix DVD subscriber the Seven Samurai sat on top of my TV for months. My present self never matched the optimistic view I had of my future self.

Xavier’s blog post on Netfix recommendation is worth the read. Dealing with a household with widely different tastes, the importance of the order of presentation of recommendations

The Hack Day Manifesto

What do you need to do to put on a good hack event like a Music Hack Day? Read The Hack Day Manifesto for insights on what it takes to make sure you don’t have hack event fail. Here’s some choice bits:

Your 4MB DSL isn’t enough

Hack days have special requirements: don’t just trust anyone who tells you that “it’ll be fine”. Think about the networking issues, and verify that they work for the kind of capacity you are going to have. People from the venue or their commercial partner will tell you all sorts of things you want to hear but keep in the back of your mind that they may not have any clue what they are talking about. Given the importance of network access, if you are operating a commercial event consider requiring network performance as part of your contract with venues and suppliers.

Rock solid WiFi

Many commercial WiFi providers plan for much lower use than actually occurs at hack days. The network should be capable of handling at least 4 devices per attendee.

Don’t make people feel unwelcome

Avoid sexism and other discriminatory language or attitudes. Don’t make any assumptions about your attendees. Get someone who is demographically very different from you to check your marketing material through to see if it makes sense and isn’t offensive to someone who doesn’t share your background.

Read The Hack Day Manifesto. If you agree with the sentiment, and you have enough hacker juice to fork the manifesto, edit it and send a pull request, you are invited to add yourself to the list of supporters.

Why do Music Hackers hack?

[vimeo http://vimeo.com/40027211 w=600]

A short film by Pauline de Zeew, with Paul King and Syd Lawrence

The Spotify Play Button – a lightening demo

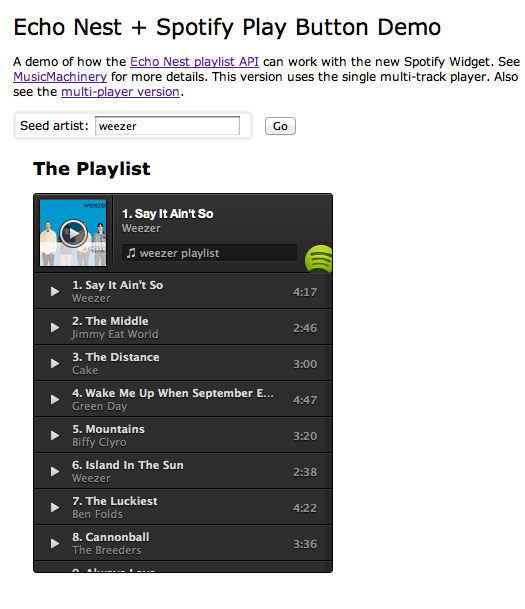

Spotify just released a nifty embeddable play button. With the play button you can easily embed Spotify tracks in any web page or blog. Since there’s really tight integration between the Spotify and Echo Nest IDs, I thought I’d make a quick demo that shows how we can use the Echo Nest playlist API and the new Spotify Play button to make playlists.

The demo took about 5 minutes to write (shorter than it is taking to write the blog post). It is simple artist radio on a web page. Give it a go at: Echo Nest + Spotify Play Button Demo

Here’s what it looks like.

Update: Charlie and Samuel pointed out that there is a multi-track player too. I made a demo that uses that too:

The Spotify Play button is really easy to use, looks great. Well done Spotify.

Syncing Echo Nest analysis to Spotify Playback

Posted by Paul in code, Music, The Echo Nest on April 9, 2012

With the recently announced Spotify integration into Rosetta Stone, The Echo Nest now makes available a detailed audio analysis for millions of Spotify tracks. This audio analysis includes summary features such as tempo, loudness, energy, danceability, key and mode, as well as a set of fine-grained segment features that describe details such as where each bar, beat and tatum fall and the detailed pitch, timbral and loudness content of each audio event in the song. These features can be very useful for driving Spotify applications that need to react to what the music sounds like – from advanced dynamic music visualizations like the MIDEM music machine or synchronized music games like Guitar Hero.

I put together a little Spotify App that demonstrates how to synchronize Spotify Playback with the Echo Nest analysis. There’s a short video here of the synchronization:

video on youtube: http://youtu.be/TqhZ2x86RXs

In this video you can see the audio summary for the currently playing song, as well as a display synchronized ‘bar’ and ‘beat’ labels and detailed loudness, timbre and pitch values for the current segment.

How it works:

To get the detailed audio analysis, call the track/profile API with the Spotify Track ID for the track of interest. For example, here’s how to get the track for Radiohead’s Karma Police using the Spotify track ID:

This returns audio summary info for the track, including the tempo, energy and danceability. It also includes a field called the analysis_url which contains an expiring URL to the detailed analysis data. (A very abbreviated excerpt of an analysis is contained in this gist).

To synchronize Spotify playback with the Echo Nest analysis we need to first get the detailed analysis for the now playing track. We can do this by calling the aforementioned track/profile call to get the analysis_url for the detailed analysis, and then retrieve the analysis (it is stored in JSON format, so no reformatting is necessary). There is one technical glitch though. There is no way to make a JSONP call to retrieve the analysis. This prevents you from retrieving the analysis directly into a web app or a Spotify app. To get around this issue, I built a little proxy at labs.echonest.com that supports a JSONP style call to retrieve the contents of the analysis URL. For example, the call:

http://labs.echonest.com/3dServer/analysis?callback=foo &url=http://url_to_the_analysis_json

will return the analysis json wrapped in the foo() callback function. The Echo Nest does plan to add JSONP support to retrieving analysis data, but until then feel free to use my proxy. No guarantees on support or uptime since it is not supported by engineering. Use at your own risk.

Once you have retrieved the analysis you can get the current bar, beat, tatum and segment info based upon the current track position, which you can retrieve from Spotify with: sp.getTrackPlayer().getNowPlayingTrack().position. Since all the events in the analysis are timestamped, it is straightforward to find a corresponding bar,beat, tatum and segment given any song timestamp. I’ve posted a bit of code on gist that shows how I pull out the current bar, beat and segment based on the current track position along with some code that shows how to retrieve the analysis data from the Echo Nest. Feel free to use the code to build your own synchronized Echo Nest/Spotify app.

The Spotify App platform is an awesome platform for building music apps. Now, with the ability to use Echo Nest analysis from within Spotify apps, it is a lot easier to build Spotify apps that synchronize to the music. This opens the door to a whole range of new apps. I’m really looking forward to seeing what developers will build on top of this combined Echo Nest and Spotify platform.

Writing an Echo Nest + Spotify App

Posted by Paul in code, Music, The Echo Nest on April 7, 2012

Last week The Echo Nest and Spotify announced an integration of APIs making it easy for developers to write Spotify Apps that take advantage of the deep music intelligence offered by the Echo Nest. The integration is via Project Rosetta Stone (PRS). PRS is an ID mapping layer in the API that allows developers to use the IDs from any supported music service with the Echo Nest API. For instance, a developer can request via the Echo Nest playlist API a playlist seeded with a Spotify artist ID and receive Spotify track IDs in the results.

This morning I created a Spotify App that demonstrates how to use the Spotify and Echo Nest APIs together. The app is a simple playlister with the following functions:

- Gets the artist for the currently playing song in Spotify

- Creates an artist radio playlist based upon the now playing artist

- Shows the playlist, allowing the user to listen to any of the playlist tracks

- Allows the user to save the generated playlist as a Spotify playlist.

The entire app, including all of the HTML, CSS and JavaScript, is 150 lines long.I’ve made all the code available in the github repository SpotifyEchoNestPlaylistDemo. Here are some of the salient bits. (apologies for the screenshots of code. WordPress.com has poor support for embedding sourcecode. I’ve been waiting for gist embeds for a year)

makePlaylistFromNowPlaying() – grabs the current track from spotify and fetches and displays the playlist from The Echo Nest.

fetchPlayst() – The bulk of the work is done in the fetchPlaylist method. This method makes a jsonp call to the Echo Nest API to generate a playlist seeded with the Spotify artist. The Spotify Artist ID needs to be massaged slightly. In the Echo Nest world Spotify artist IDs look like ‘spotify-WW:artist:12341234’ so we convert from the Spotify form to the Echo Nest form with the one liner:

var artist_id = artist.uri.replace('spotify', 'spotify-WW');

Here’s the code:

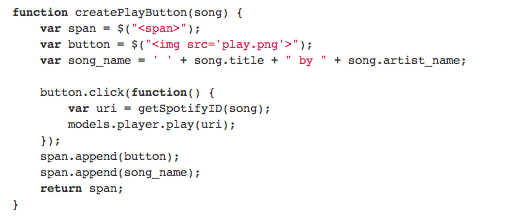

The function createPlayButton creates a doc element with a clickable play image, that when clicked, calls the playSong method, which grabs the Spotify Track ID from the song and tells Spotify to play it:

Update: I was using a deprecated method of playing tracks. I’ve updated the code and example to show the preferred method (Thanks @mager).

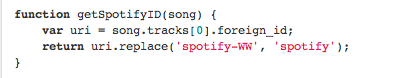

When we make the playlist call we include a buckets parameter requesting that spotify IDs are returned in the returned tracks. We need to reverse the ID mapping to go from the Echo Nest form of the ID to the Spotify form like so:

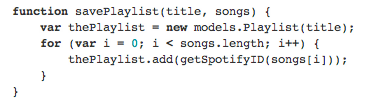

Saving the playlist as a spotify playlist is a 3 line function:

Installing and running the app

To install the app, follow these steps:

- make sure you have a Spotify Developer Account

- Make a ‘playlister’ directory in your Spotify apps folder (On a mac this is in ~/Spotify/playlister)

- Get the project files from github

- Copy the project files into the ‘playlister’ directory. The files are:

- index.html – the app (html and js)

- manifest.json – describes your app to Spotify. The most important bit is the ‘RequiredPermissions’ section that lists ‘http://*echonest.com’. Without this entry, your app won’t be able to talk to The Echo Nest.

- js/jquery.min.js – jquery

- styles.css – minimal css for the app

- play.png – the image for the play button

- icon.png – the icon for the app

To run the app type ‘spotify:app:playlister’ in the Spotify search bar. The app should appear in the main window.

Wrapping Up

Well, that’s it – a Spotify playlisting app that uses the Echo Nest playlist API to generate the playlist. Of course, this is just the tip of the iceberg. With the Spotify/Echo Nest connection you can easily make apps that use all of the Echo Nest artist data: artist news, reviews, blogs, images, bios etc, as well as all of the detailed Echo Nest song data: tempo, energy, danceability, loudness, key, mode etc. Spotify has created an awesome music app platform. With the Spotify/Echo Nest connection, this platform has just got more awesome.

Is music getting more profane?

This post has profanity in it. If you don’t like profanity, skip this post and instead just look at this picture of a cat. Otherwise, scroll on down to read about the rise and fall of profanity in music.

Now, on to the profanity …

It seems that every year the amount of profanity in music has increased. Today it seems that every other pop song drops the f-bomb, from P!nk’s ‘Fucking Perfect’ to Cee Lo’s ‘Fuck You’. I wondered if this apparent trend was real so I took a look at when certain obscene words started to show up in song titles to see if there are any obvious trends. Here’s the data:

The word ‘fuck’ doesn’t appear in a song title until 1977 when the band ‘The Way’ released ‘Fucking Police’ . This monumental song in music history seems to be lost to the Internet age. The only evidence that this song ever existed is this MusicBrainz entry. The second song with ‘fuck’ in the title, ‘To Fuck The Boss’ by Blowfly appeared in 1978. This sophmore effort is preserved on Youtube:

[youtube http://www.youtube.com/watch?v=J3wGresI0S4]The peak in usage of the word ‘fuck’ in song titles occurs in 2006 with 650 songs. Since then, peak usage has dropped off substantially, 2011 saw about the same ‘fuck’ frequency as 1999.

Usage of the word ‘shit’ has a similar profile:

The first usage of the word ‘shit’ in a song title was in 1966 in the song ‘I feel like homemade shit’ by The Fugs, which appeared on The Fugs first album (originally titled The Village Fugs Sing Ballads of Contemporary Protest, Point of Views, and General Dissatisfaction). Again the peak year of use is 2006 with 322 ‘shit’ songs that year.

Looking at these graphs, one would get the impression that use of profanity has grown substantially since the 70s and reached its peak a few years ago. However, there’s more to the data than that. Let’s look at a similar plot for a non-profane word:

This plot shows a very similar usage profile for the word ‘cat’, with substantial growth in use from the 70s until 2006 when it starts to taper off. (Yes, ‘cat’ was found in many songs before 1976, but I am not showing those in the plot). Why do ‘fuck’ and ‘cat’ have such similar profiles? It is not because their usage frequency has increased, it is because the total number of songs released has been increasing year-over-year until 2006, after which the number of new releases per year has been dropping off. We see more ‘fuck’s and ‘cat’s in 2006 because there were more songs released in 2006 than any other year. For a more accurate view we need to look at the relative usage changes. This plot shows the usage of the word ‘fuck’ relative to the usage of other words in song titles. Even when we look at the use of the word ‘fuck’ relative to other words there is a clear increasing trend.

Is music getting more profane? The answer is yes. The data show that the likelihood of a song with the word ‘fuck’ in the title has more than doubled since the 80s. And it doesn’t look like this trend has reached its peak yet. I think we shall continue to see a rise in use of language that gets a rise out of moms like Tipper Gore.

The Duke Listens! Archive

Before I joined the Echo Nest I worked in the research lab at Sun Microsystems. During my tenure at Sun I maintained a blog called ‘Duke Listens!’ where I wrote about things that I was interested in (mostly music recommendation, discovery, visualization, Music 2.0). When Oracle bought Sun a few years back they shut down the blogs for ex-employees and Duke Listens! was no more. However, a kind soul named John Henning spent quite a bit of time writing perl scripts to capture all the Duke Listens data. He stuck in on a CD and gave it to me. It has been sitting on my computer for about a year. This weekend, while hanging out on the Music Hack Day IRC I wrote some python (thanks BeautifulSoup), reformatted the blog posts, created some indices and pushed out a static version of the blog.

You can now visit the Duke Listens! Archive and look through more than a 1,000 blog posts that chronicle the 5 years of Music 2.0 history (from 2004 to 2009). Some favorite posts:

- My first MIR-related post (June 2004)

- My first hardcore MIR post (January 2005)

- A very wrong prediction about Apple (January 2005)

- I discover Radio Paradise (April 2005)

- First Google Music rumor (June 2005)

- First Amazon Music rumor (August 2005)

- First Pandora Post (September 2005)

- First mention of The Echo nest (October 2005)

- Why there’s no Google Music search (December 2005)

- First mention of Spotify (January 2007)

- My review of Spotify (November 2007)

- The Echo Nest goes live (March 2008)

- The Echo Nest launches their API (September 2008)

- My first look at iTunes genius recommendations (September 2008)

- My last post (February 2009)

Waltzify – turn any 4/4 song into a waltz with Echo Nest remix

Posted by Paul in code, fun, The Echo Nest on March 23, 2012

Tristan Jehan, one of the founders here at the Echo Nest, has created a Python script that will take a 4/4 song and turn it into a waltz. The script uses Echo Nest remix, a Python library that lets you algorithmically manipulate music. Here’s an example of the output of the script when applied to the song ‘Fame’:

Turning a 4/4 song into a 3/4 song while still keeping the song musical is no easy feat. But Tristan’s algorithm does a pretty good job. Here’s what he does:

- Start with a 4/4 measure

- Cut the 4/4 measure into 2 bars with 2 beats in each bar

- Stretch the first beat of each bar by 100%

- Adjust the tempo to a typical waltz tempo

Here’s a graphic that shows the progression:

Here are some more examples:

Tristan has made the waltzifier code available on github. If you want to make your own waltzes, get yourself an Echo Nest API key and grab Echo Nest remix and start enjoying the power of 3.