Archive for category research

The Playlist Survey

[tweetmeme source= ‘plamere’ only_single=false] Playlists have long been a big part of the music experience. But making a good playlist is not always easy. We can spend lots of time crafting the perfect mix, but more often than not, in this iPod age, we are likely to toss on a pre-made playlist (such as an album), have the computer generate a playlist (with something like iTunes Genius) or (more likely) we’ll just hit the shuffle button and listen to songs at random. I pine for the old days when Radio DJs would play well-crafted sets – mixes of old favorites and the newest, undiscovered tracks – connected in interesting ways. These professionally created playlists magnified the listening experience. The whole was indeed greater than the sum of its parts.

The tradition of the old-style Radio DJ continues on Internet Radio sites like Radio Paradise. RP founder/DJ Bill Goldsmith says of Radio Paradise: “Our specialty is taking a diverse assortment of songs and making them flow together in a way that makes sense harmonically, rhythmically, and lyrically — an art that, to us, is the very essence of radio.” Anyone who has listened to Radio Paradise will come to appreciate the immense value that a professionally curated playlist brings to the listening experience.

I wish I could put Bill Goldsmith in my iPod and have him craft personalized playlists for me – playlists that make sense harmonically, rhythmically and lyrically, and customized to my music taste, mood and context . That, of course, will never happen. Instead I’m going to rely on computer algorithms to generate my playlists. But how good are computer generated playlists? Can a computer really generate playlists as good as Bill Goldsmith, with his decades of knowledge about good music and his understanding of how to fit songs together?

To help answer this question, I’ve created a Playlist Survey – that will collect information about the quality of playlists generated by a human expert, a computer algorithm and a random number generator. The survey presents a set of playlists and the subject rates each playlist in terms of its quality and also tries to guess whether the playlist was created by a human expert, a computer algorithm or was generated at random.

Bill Goldsmith and Radio Paradise have graciously contributed 18 months of historical playlist data from Radio Paradise to serve as the expert playlist data. That’s nearly 50,000 playlists and a quarter million song plays spread over nearly 7,000 different tracks.

The Playlist Survey also servers as a Radio DJ Turing test. Can a computer algorithm (or a random number generator for that matter) create playlists that people will think are created by a living and breathing music expert? What will it mean, for instance, if we learn that people really can’t tell the difference between expert playlists and shuffle play?

Ben Fields and I will offer the results of this Playlist when we present Finding a path through the Jukebox – The Playlist Tutorial – at ISMIR 2010 in Utrecth in August. I’ll also follow up with detailed posts about the results here in this blog after the conference. I invite all of my readers to spend 10 to 15 minutes to take The Playlist Survey. Your efforts will help researchers better understand what makes a good playlist.

Take the Playlist Survey

Is Music Recommendation Broken? How can we fix it?

Posted by Paul in events, Music, recommendation, research on June 1, 2010

Save the date: 26th September 2010 for The Workshop on Music Recommendation and Discovery being held in conjunction with ACM RecSys in Barcelona, Spain. At this workshop, community members from the Recommender System, Music Information Retrieval, User Modeling, Music Cognition, and Music Psychology can meet, exchange ideas and collaborate.

Topics of interest

Topics of interest for Womrad 2010 include:

- Music recommendation algorithms

- Theoretical aspects of music recommender systems

- User modeling in music recommender systems

- Similarity Measures, and how to combine them

- Novel paradigms of music recommender systems

- Social tagging in music recommendation and discovery

- Social networks in music recommender systems

- Novelty, familiarity and serendipity in music recommendation and discovery

- Exploration and discovery in large music collections

- Evaluation of music recommender systems

- Evaluation of different sources of data/APIs for music recommendation and exploration

- Context-aware, mobile, and geolocation in music recommendation and discovery

- Case studies of music recommender system implementations

- User studies

- Innovative music recommendation applications

- Interfaces for music recommendation and discovery systems

- Scalability issues and solutions

- Semantic Web, Linking Open Data and Open Web Services for music recommendation and discovery

More info: Wormrad 2010 Call for papers

Sweet Child O’Mine – Vienna Style

Posted by Paul in Music, remix, research, The Echo Nest on May 24, 2010

I was wondering how far one could go with the time-stretching stuff and still make something musical. Here’s an attempt to turn a rock anthem into a waltz. It is a bit rough in a few places, especially the beginning – but I think it settles into a pretty nice groove.

Can you judge an album by its cover?

Album art has always been a big part of music. It is designed to catch your eye in a record store, and also perhaps to give you a hint as to what kind of music is inside. Music Information Retrieval scientists Jānis Lībeks and Douglas Turnbull from Swathmore are interested in learning more about how much information an album cover can give you about the music. They are conducting a simple study – they present the participants with a set of album art and the participants try to guess the genre of the artist based only upon what they see. It’s the genre identification task that uses album art as the feature set.

Album art has always been a big part of music. It is designed to catch your eye in a record store, and also perhaps to give you a hint as to what kind of music is inside. Music Information Retrieval scientists Jānis Lībeks and Douglas Turnbull from Swathmore are interested in learning more about how much information an album cover can give you about the music. They are conducting a simple study – they present the participants with a set of album art and the participants try to guess the genre of the artist based only upon what they see. It’s the genre identification task that uses album art as the feature set.

Anyone can participate in the study, it takes about 5 to 10 minutes – and its fun to look at an album cover and try to guess what’s inside. I’m looking forward to seeing the results of the study when they publish.

SoundBite for Songbird

Posted by Paul in Music, music information retrieval, research, visualization on March 23, 2010

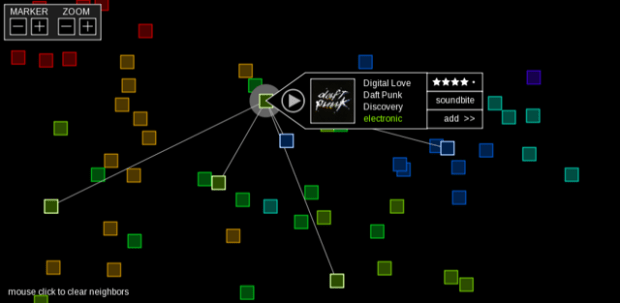

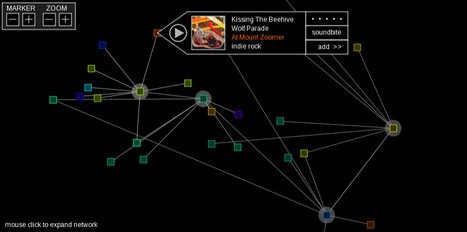

Steve Lloyd of Queen Mary University has released SongBite for Songbird. (Update – if the link is offline, and you are interested in trying SoundBite just email soundbite@repeatingbeats.com ). SongBite is a visual music explorer that uses music similarity to enable network-based music navigation and to create automatic “sounds like” playlists.

Here’s a video that shows SoundBite in action:

It’s a pretty neat plugin for Songbird. It’s great to see yet another project from the Music Information Retrieval community go mainstream.

How much is a song play worth?

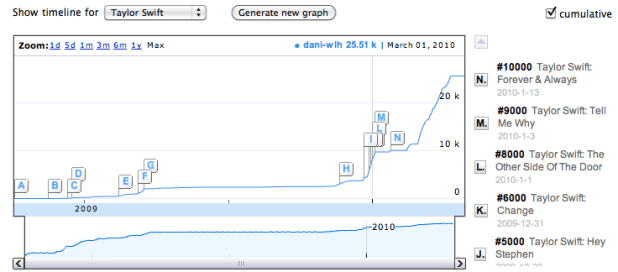

Posted by Paul in Music, research, The Echo Nest on March 1, 2010

Over the last 15 years or so, music listening has moved online. Now instead of putting a record on the turntable or a CD in the player, we fire up a music application like iTunes, Pandora or Spotify to listen to music. One interesting side-effect of this transition to online music is that there is now a lot of data about music listening behavior. Sites like Last.fm can keep track of every song that you listen to and offer you all sorts of statistics about your play history. Applications like iTunes can phone home your detailed play history. Listeners leave footprints on P2P networks, in search logs and every time they hit the play button on hundreds of music sites. People are blogging, tweeting and IMing about the concerts they attend, and the songs that they love (and hate). Every day, gigabytes of data about our listening habits are generated on the web.

With this new data come the entrepreneurs who sample the data, churn though it and offer it to those who are trying to figure out how best to market new music. Companies like Big Champagne, Bandmetrics, Musicmetric, Next Big Sound and The Echo Nest among others offer windows into this vast set of music data. However, there’s still a gap in our understanding of how to interpret this data. Yes, we have vast amounts data about music listening on the web, but that doesn’t mean we know how to interpret this data- or how to tie it to the music marketplace. How much is a track play on a computer in London related to a sale of that track in a traditional record store in Iowa? How do searches on a P2P network for a new album relate to its chart position? Is a track illegally made available for free on a music blog hurting or helping music sales? How much does a twitter mention of my song matter? There are many unanswered questions about how online music activity correlates with the more traditional ways of measuring artist success such as music sales and chart position. These are important questions to ask, yet they have been impossible to answer because the people who have the new data (data from the online music world) generally don’t talk to the people who own the old data and vice versa.

We think that understanding this relationship is key and so we are working to answer these questions via a research consortium between The Echo Nest, Yahoo Research and UMG unit Island Def Jam. In this consortium, three key elements are being brought together. Island Def Jam is contributing deep and detailed sales data for its music properties – sales data that is not usually released to the public, Yahoo! Research brings detailed search data (with millions and millions of queries for music) along with deep expertise in analyzing and understanding what search can predict while The Echo Nest brings our understanding of Intenet music activity such as playcount data, friend and fan counts, blog mentions, reviews, mp3 posts, p2p activity as well as second generation metrics as sentiment analysis, audio feature analysis and listener demographics . With the traditional sales data, combined with the online music activity and search data the consortium hopes to develop a predictive model for music by discovering correlations between Internet music activity and market reaction. With this model, we would be able to quantify the relative importance of a good review on a popular music website in terms of its predicted effect on sales or popularity. We would be able to pinpoint and rank various influential areas and platforms on the music web that artists should spend more of their time and energy to reach a bigger fanbase. Combining anonymously observable metrics with internal sales and trend data will give keen insight into the true effects of the internet music world.

There are some big brains working on building this model. Echo Nest co-founder Brian Whitman (He’s a Doctor!) and the team from Yahoo! Research that authored the paper “What Can Search Predict” which looks closely at how to use query volume to forecast openining box-office revenue for feature films. The Yahoo! research team includes a stellar lineup: Yahoo! Principal research scientist Duncan Watts whose research on the early-rater effect is a must read for anyone interested in recommendation and discovery; Yahoo! Principal Research Scientist David Pennock who focuses on algorithmic economics (be sure to read Greg Linden’s take on Seung-Taek Park and David’s paper Applying Collaborative Filtering Techniques to Movie Search for Better Ranking and Browsing); Jake Hoffman, expert in machine learning and data-driven modeling of complex systems; Research Scientist Sharad Goel (see his interesting paper on Anatomy of the Long Tail: Ordinary People with Extraordinary Tastes) and Research Scientist Sébastien Lahaie, expert in marketplace design, reputation systems (I’ve just added his paper Applying Learning Algorithms to Preference Elicitation to my reading list). This is a top-notch team

I look forward to the day when we have a predictive model for music that will help us understand how this:

affects this:

.

AdMIRe 2010 Call for Papers

Posted by Paul in Music, music information retrieval, research on January 20, 2010

The organizers for AdMire 2010 (The 2nd International Workshop on Advances in Music Information Research) have just issued the call for papers. Detail info can be found on the workshop website: AdMIRe: International Workshop on Advances in Music Information Research 2010.

Visualizing the Artist Space

Posted by Paul in events, research, The Echo Nest, visualization on November 26, 2009

Take a look at Kurt’s weekend hack to make a visualization of the Echo Nest artist similarity space. Very nice. Can’t wait for Kurt to make it interactive and show artist info. Neat!

A little help from my mutant muppet friends

Poolcasting: an intelligent technique to customise music programmes for their audience

Posted by Paul in Music, music information retrieval, research on November 2, 2009

In preparation for his defense, Claudio Baccigalupo has placed online his thesis: Poolcasting: an intelligent technique to customise music programmes for their audience. It looks to be an in depth look at playlisting.

Here’s the abstract:

Poolcasting is an intelligent technique to customise musical sequences for groups of listeners. Poolcasting acts like a disc jockey, determining and delivering songs that satisfy its audience. Satisfying an entire audience is not an easy task, especially when members of the group have heterogeneous preferences and can join and leave the group at different times. The approach of poolcasting consists in selecting songs iteratively, in real time, favouring those members who are less satisfied by the previous songs played.

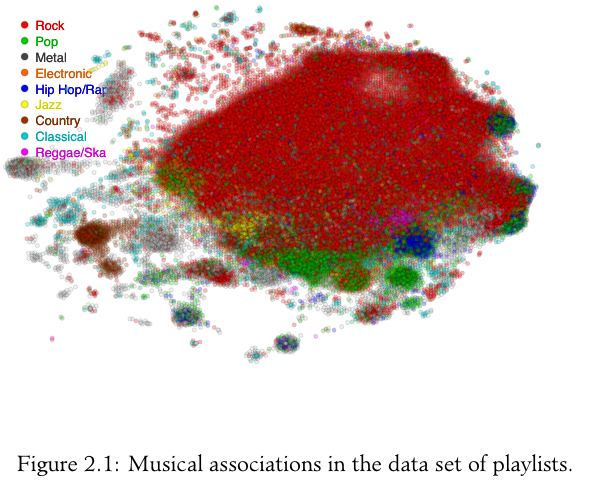

Poolcasting additionally ensures that the played sequence does not repeat the same songs or artists closely and that pairs of consecutive songs ‘flow’ well one after the other, in a musical sense. Good disc jockeys know from expertise which songs sound well in sequence; poolcasting obtains this knowledge from the analysis of playlists shared on the Web. The more two songs occur closely in playlists, the more poolcasting considers two songs as associated, in accordance with the human experiences expressed through playlists. Combining this knowledge and the music profiles of the listeners, poolcasting autonomously generates sequences that are varied, musically smooth and fairly adapted for a particular audience.

A natural application for poolcasting is automating radio programmes. Many online radios broadcast on each channel a random sequence of songs that is not affected by who is listening. Applying poolcasting can improve radio programmes, playing on each channel a varied, smooth and group-customised musical sequence. The integration of poolcasting into a Web radio has resulted in an innovative system called Poolcasting Web radio. Tens of people have connected to this online radio during one year providing first-hand evaluation of its social features. A set of experiments have been executed to evaluate how much the size of the group and its musical homogeneity affect the performance of the poolcasting technique.

I’m quite interested in this topic so it looks like my reading list is set for the week.