Archive for category The Echo Nest

How much is a song play worth?

Posted by Paul in Music, research, The Echo Nest on March 1, 2010

Over the last 15 years or so, music listening has moved online. Now instead of putting a record on the turntable or a CD in the player, we fire up a music application like iTunes, Pandora or Spotify to listen to music. One interesting side-effect of this transition to online music is that there is now a lot of data about music listening behavior. Sites like Last.fm can keep track of every song that you listen to and offer you all sorts of statistics about your play history. Applications like iTunes can phone home your detailed play history. Listeners leave footprints on P2P networks, in search logs and every time they hit the play button on hundreds of music sites. People are blogging, tweeting and IMing about the concerts they attend, and the songs that they love (and hate). Every day, gigabytes of data about our listening habits are generated on the web.

With this new data come the entrepreneurs who sample the data, churn though it and offer it to those who are trying to figure out how best to market new music. Companies like Big Champagne, Bandmetrics, Musicmetric, Next Big Sound and The Echo Nest among others offer windows into this vast set of music data. However, there’s still a gap in our understanding of how to interpret this data. Yes, we have vast amounts data about music listening on the web, but that doesn’t mean we know how to interpret this data- or how to tie it to the music marketplace. How much is a track play on a computer in London related to a sale of that track in a traditional record store in Iowa? How do searches on a P2P network for a new album relate to its chart position? Is a track illegally made available for free on a music blog hurting or helping music sales? How much does a twitter mention of my song matter? There are many unanswered questions about how online music activity correlates with the more traditional ways of measuring artist success such as music sales and chart position. These are important questions to ask, yet they have been impossible to answer because the people who have the new data (data from the online music world) generally don’t talk to the people who own the old data and vice versa.

We think that understanding this relationship is key and so we are working to answer these questions via a research consortium between The Echo Nest, Yahoo Research and UMG unit Island Def Jam. In this consortium, three key elements are being brought together. Island Def Jam is contributing deep and detailed sales data for its music properties – sales data that is not usually released to the public, Yahoo! Research brings detailed search data (with millions and millions of queries for music) along with deep expertise in analyzing and understanding what search can predict while The Echo Nest brings our understanding of Intenet music activity such as playcount data, friend and fan counts, blog mentions, reviews, mp3 posts, p2p activity as well as second generation metrics as sentiment analysis, audio feature analysis and listener demographics . With the traditional sales data, combined with the online music activity and search data the consortium hopes to develop a predictive model for music by discovering correlations between Internet music activity and market reaction. With this model, we would be able to quantify the relative importance of a good review on a popular music website in terms of its predicted effect on sales or popularity. We would be able to pinpoint and rank various influential areas and platforms on the music web that artists should spend more of their time and energy to reach a bigger fanbase. Combining anonymously observable metrics with internal sales and trend data will give keen insight into the true effects of the internet music world.

There are some big brains working on building this model. Echo Nest co-founder Brian Whitman (He’s a Doctor!) and the team from Yahoo! Research that authored the paper “What Can Search Predict” which looks closely at how to use query volume to forecast openining box-office revenue for feature films. The Yahoo! research team includes a stellar lineup: Yahoo! Principal research scientist Duncan Watts whose research on the early-rater effect is a must read for anyone interested in recommendation and discovery; Yahoo! Principal Research Scientist David Pennock who focuses on algorithmic economics (be sure to read Greg Linden’s take on Seung-Taek Park and David’s paper Applying Collaborative Filtering Techniques to Movie Search for Better Ranking and Browsing); Jake Hoffman, expert in machine learning and data-driven modeling of complex systems; Research Scientist Sharad Goel (see his interesting paper on Anatomy of the Long Tail: Ordinary People with Extraordinary Tastes) and Research Scientist Sébastien Lahaie, expert in marketplace design, reputation systems (I’ve just added his paper Applying Learning Algorithms to Preference Elicitation to my reading list). This is a top-notch team

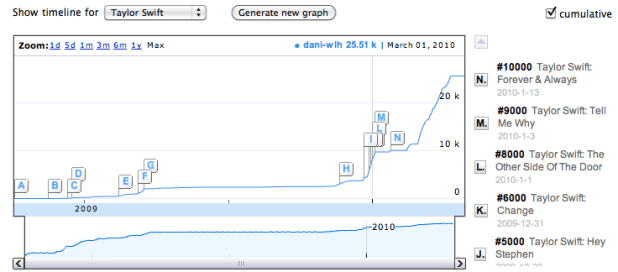

I look forward to the day when we have a predictive model for music that will help us understand how this:

affects this:

.

Here comes the antiphon

Posted by Paul in Music, remix, The Echo Nest on February 25, 2010

I’m gearing up for the SXSW panel on remix I’m giving in a couple of weeks. I thought I should veer away from ‘science experiments’ and try to create some remixes that sound musical. Here’s one where I’ve used remix to apply a little bit of a pre-echo to ‘Here Comes the Sun’. It gives it a little bit of a call and answer feel:

The core (choir?) code is thus:

for bar in enumerate(self.bar):

cur_data = self.input[bar]

if last:

last_data = self.input[last]

mixed_data = audio.mix(cur_data, last_data, mix=.3)

out.append(mixed_data)

else:

out.append(cur_data)

last = bar

Echo Nest Client Library for the Android Platform

Posted by Paul in code, Music, The Echo Nest on February 24, 2010

The Echo Nest is participating in annual mobdev contest for the Mobile Application Development (mobdev) course at Olin College offered by Mark L. Chang. Already, our participation is bearing fruit. Ilari Shafer, one of our course assistants created a version of the Echo Nest Java client library that runs on Android. You can fetch it here: echo-nest-android-java-api [zip].

The Echo Nest is participating in annual mobdev contest for the Mobile Application Development (mobdev) course at Olin College offered by Mark L. Chang. Already, our participation is bearing fruit. Ilari Shafer, one of our course assistants created a version of the Echo Nest Java client library that runs on Android. You can fetch it here: echo-nest-android-java-api [zip].

I spent a few hours yesterday talking to the mobdev class. The students had lots of great questions and lots of really interesting ideas on how to use the Echo Nest APIs to build interesting mobile apps. I can’t wait to see what they build in 10 days.

Python and Music at PyCon 2010

Posted by Paul in code, Music, The Echo Nest on February 15, 2010

If you are lucky enough to be heading to PyCon this week and are interested in hacking on music, there are two talks that you should check out:

If you are lucky enough to be heading to PyCon this week and are interested in hacking on music, there are two talks that you should check out:

DJing in Python: Audio processing fundamentals – In this talk Ed Abrams talks about how his experiences in building a real-time audio mixing application in Python. I caught a dry-run of this talk at the local Python SIG – lots of info packed into this 30 minute talk. One of the big takeaways from this talk is the results of Ed’s evaluation of a number of Pythonic audio processing libraries. Sunday 01:15pm, Centennial I

Remixing Music Pythonically – This is a talk by Echo Nest friend and über-developer Adam Lindsay. In this talk Adam talks about the Echo Nest remix library. Adam, a frequent contributor to remix, will offer details on the concise expressiveness offered when editing multimedia driven by content-based features, and some insights on what Pythonic magic did and didn’t work in the development of the modules. Audio and video examples of the fun-yet-odd outputs that are possible will be shown. Sunday 01:55pm, Centennial I

The schedulers at PyCon have done a really cool thing and have put the talks back to back in the same room. Also, keep your eye out for the Hacking on Music OpenSpace

Name That Artist

Posted by Paul in fun, Music, The Echo Nest, web services on February 14, 2010

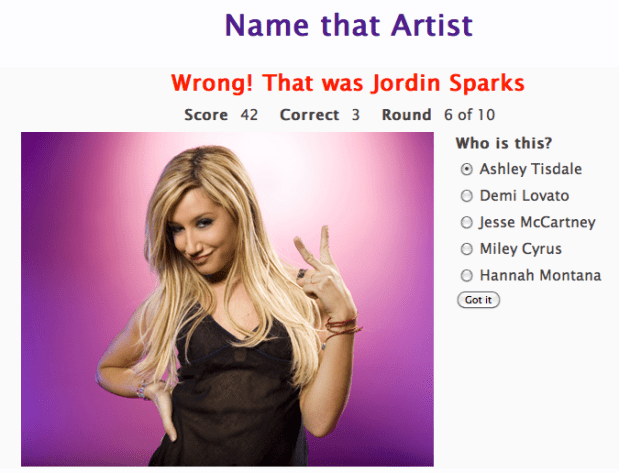

While watching the Olympics over the weekend, I wrote a little web-app game that uses the new Echo Nest get_images call. The game is dead simple. You have to identify the artists in a series of images. You get to chose a level of difficulty and the style of your favorite music, and if you get a high score, your name and score will appear on the Top Scores board. Instead of using a simple score of percent correct, the score gets adjusted by a number of factors. There’s a time bonus, so if you answer fast you get more points, there’s a difficulty bonus, so if you identify unfamiliar artists you get more points, and if you chose the ‘Hard’ level of difficulty you get also get more points for every correct answer. The absolute highest score possible is 600 but that any score above 200 is rather awesome.

The app is extremely ugly (I’m a horrible designer), but it is fun – and it is interesting to see how similar artists from a single genre appear. Give it a go, post some high scores and let me know how you like it.

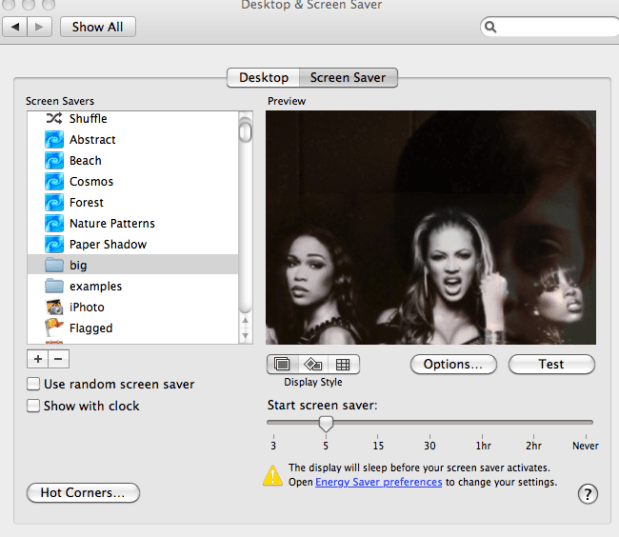

Jason’s cool screensaver

Posted by Paul in code, fun, Music, The Echo Nest on February 13, 2010

I noticed some really neat images flowing past Jason’s computer over the last week. Whenever Jason was away from his desk, our section of the Echo Nest office would be treated to a very interesting slideshow – mostly of musicians (with an occasional NSFW image (but hey, everything is SFW here at The Echo Nest)). Since Jason is a photographer I first assumed that these were pictures that he took of friends or shows he attended – but Jason is a classical musician and the images flowing by were definitely not of classical musicians – so I was puzzled enough to ask Jason about it. Turns out, Jason did something really cool. He wrote a Python program that gets the top hotttt artists from the Echo Nest, and then collects images for all of those artists and their similars – yielding a huge collection of artist images. He then filters them to include only high res images (thumbnails don’t look great when blown up to screen saver size). He then points is Mac OS Slideshow screensaver at the image folder and voilá – a nifty music-oriented screensaver.

Jason has added his code to the pyechonest examples. So if you are interested in having a nifty screen saver, grab Pyechonest, get an Echo Nest API key if you don’t already have one and run the get_images example. Depending upon how many images you want, it may take a few hours to run. To get 100K images plan to run it over night. Once you’ve done that, point your Pictures screensaver at the image folder and you’re done.

The 6th Beatle

Posted by Paul in Music, recommendation, The Echo Nest on February 12, 2010

When I test-drive a new music recommender I usually start by getting recommendations based upon ‘The Beatles’ (If you like the Beatles, you make like XX). Most recommenders give results that include artists like John Lennon, Paul McCartney, George Harrison, The Who, The Rolling Stones, Queen, Pink Floyd, Bob Dylan, Wings, The Kinks and Beach Boys. These recommendations are reasonable, but they probably won’t help you find any new music. The problem is that these recommenders rely on the wisdom of the crowds and so an extremely popular artist like The Beatles tends to get paired up with other popular artists – the results being that the recommender doesn’t tell you anything that you don’t already know. If you are trying to use a recommender to discover music that sounds like The Beatles, these recommenders won’t really help you – Queen may be an OK recommendation, but chances are good that you already know about them (and The Rolling Stones and Bob Dylan, etc.) so you are not finding any new music.

When I test-drive a new music recommender I usually start by getting recommendations based upon ‘The Beatles’ (If you like the Beatles, you make like XX). Most recommenders give results that include artists like John Lennon, Paul McCartney, George Harrison, The Who, The Rolling Stones, Queen, Pink Floyd, Bob Dylan, Wings, The Kinks and Beach Boys. These recommendations are reasonable, but they probably won’t help you find any new music. The problem is that these recommenders rely on the wisdom of the crowds and so an extremely popular artist like The Beatles tends to get paired up with other popular artists – the results being that the recommender doesn’t tell you anything that you don’t already know. If you are trying to use a recommender to discover music that sounds like The Beatles, these recommenders won’t really help you – Queen may be an OK recommendation, but chances are good that you already know about them (and The Rolling Stones and Bob Dylan, etc.) so you are not finding any new music.

At The Echo Nest we don’t base our artist recommendations solely on the wisdom of crowds, instead we draw upon a number of different sources (including a broad and deep crawl of the music web). This helps us avoid the popularity biases that lead to ineffectual recommendations. For example, looking at some of the Echo Nest recommendations based upon the Beatles we find some artists that you may not see with a wisdom of the crowds recommender – artists that actually sound like the Beatles – not just artists that happened to be popular at the same time as the Beatles. Echo Nest recommendations include artists such as The Beau Brummels , The Dukes of Stratosphear, Flamin’ Groovies and an artist named Emitt Rhodes. I had never ever seen Emitt Rhodes occur in any recommendation based on the Beatles, so I was a bit skeptical, but I took a listen and this is what I found:

Update: Don Tillman points to this Beatle-esque track:

Emitt could be the sixth Beatles. I think it’s a pretty cool recommendation

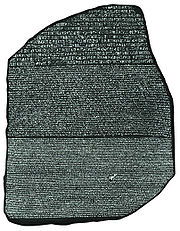

Introducing Project Rosetta Stone

Posted by Paul in code, Music, The Echo Nest, web services on February 10, 2010

Here at The Echo Nest we want to make the world easier for music app developers. We want to solve as many of the problems that developers face when writing music apps so that the developers can focus on building cool stuff instead of worrying about the basic plumbing . One of the problems faced by music application developers is the issue of ID translation. You may have a collection of music that is in one ID space (Musicbrainz for instance) but you want to use a music service (such as the Echo Nest’s Artist Similarity API) that uses a completely different ID space. Before you can use the service you have to translate your Musicbrainz IDs into Echo Nest IDs, make the similarity call and then, since the artist similarity call returns Echo Nest IDs, you have to then map the IDs back into the Musicbrainz space. The mapping from one id space to another takes time (perhaps even requiring another API method call to ‘search_artists’) and is a potential source of error — mapping artist names can be tricky – for example there are artists like Duran Duran Duran, Various Artists (the electronic musician), DJ Donna Summer, and Nirvana (the 60’s UK band) that will trip up even sophisticated name resolvers.

Here at The Echo Nest we want to make the world easier for music app developers. We want to solve as many of the problems that developers face when writing music apps so that the developers can focus on building cool stuff instead of worrying about the basic plumbing . One of the problems faced by music application developers is the issue of ID translation. You may have a collection of music that is in one ID space (Musicbrainz for instance) but you want to use a music service (such as the Echo Nest’s Artist Similarity API) that uses a completely different ID space. Before you can use the service you have to translate your Musicbrainz IDs into Echo Nest IDs, make the similarity call and then, since the artist similarity call returns Echo Nest IDs, you have to then map the IDs back into the Musicbrainz space. The mapping from one id space to another takes time (perhaps even requiring another API method call to ‘search_artists’) and is a potential source of error — mapping artist names can be tricky – for example there are artists like Duran Duran Duran, Various Artists (the electronic musician), DJ Donna Summer, and Nirvana (the 60’s UK band) that will trip up even sophisticated name resolvers.

We hope to eliminate some of the trouble with mapping IDs with Project Rosetta Stone. Project Rosetta Stone is an update to the Echo Nest APIs to support non-Echo-Nest identifiers. The goal for Project Rosetta Stone is to allow a developer to use any music id from any music API with the Echo Nest web services. For instance, if you have a Musicbrainz ID for weezer, you can call any of the Echo Nest artist methods with the Musicbrainz ID and get results. Additionally, methods that return IDs can be told to return them in different ID spaces. So, for example, you can call artist.get_similar and specify that you want the similar artist results to include Musicbrainz artist IDs.

Dealing with the many different music ID formats One of the issues we have to deal with when trying to support many ID spaces is that the IDs come in many shapes and sizes. Some IDs like Echo Nest and Musicbrainz are self-identifying URLs, (self-identifying means that you can tell what the ID space is and the type of the item being identified (whether it is an artist track, release, playlist etc.)) and some IDs (like Spotify) use self-identifying URNs. However, many ID spaces are non-self identifying – for instance a Napster Artist ID is just a simple integer. Note also that many of the ID spaces have multiple renderings of IDs. Echo Nest has short form IDs (AR7BGWD1187FB59CCB and TR123412876434), Spotify has URL-form IDs (http://open.spotify.com/artist/6S58b0fr8TkWrEHOH4tRVu) and Musicbrainz IDs are often represented with just the UUID fragment (bd0303a-f026-416f-a2d2-1d6ad65ffd68) – and note that the use of Spotify and Napster in these examples are just to demonstrate the wide range of ID format.

We want to make the all of the ID types be self-identifying. IDs that are already self-identifying can be used without change. However, non-self-identifying ID types need to be transformed into a URN-style syntax of the form: vendor:type:vendor-specific-id. So for example, and a Napster track ID would be of the form: ‘napster:track:12345678’

What do we support now? In this first release of Rosetta Stone we are supporting URN-style Musicbrainz ids (probably one of the most requested enhancements to the Echo Nest APIs has been to include support for Musicbrainz). This means that any Echo Nest API method that accepts or returns an Echo Nest ID can also take a Musicbrainz ID. For example to get recent audio found on the web for Weezer, you could make the call with the URN form of the musicbrainz ID for weezer:

http://developer.echonest.com/api/get_audio

?api_key=5ZAOMB3BUR8QUN4PE

&id=musicbrainz:artist:6fe07aa5-fec0-4eca-a456-f29bff451b04

&rows=2&version=3 - (try it)

For a call such as artist.get_similar, if we are using Musicbrainz IDs for input, it is likely that you’ll want your results in the form of Musicbrainz ids. To do this, just add the bucket=id:musicbrainz parameter to indicate that you want Musicbrainz IDs included in the results:

http://developer.echonest.com/api/get_similar

?api_key=5ZAOMB3BUR8QUN4PE

&id=musicbrainz:artist:6fe07aa5-fec0-4eca-a456-f29bff451b04

&rows=10&version=3

&bucket=id:musicbrainz (try it)

<similar>

<artist>

<name>Death Cab for Cutie</name>

<id>music://id.echonest.com/~/AR/ARSPUJF1187B9A14B8</id>

<id type="musicbrainz">musicbrainz:artist:0039c7ae-e1a7-4a7d-9b49-0cbc716821a6</id>

<rank>1</rank>

</artist>

<!– more omitted –>

</similar>

Limiting results to a particular ID space – sometimes you are working within a particular ID space and you only want to include items that are in that space. To support this, Rosetta Stone adds an idlimit parameter to some of the calls. If this is set to ‘Y’ then results are constrained to be within the given ID space. This means that if you want to guarantee that only Musicbrainz artists are returned from the get_top_hottt_artists call you can do so like this:

http://developer.echonest.com/api/get_top_hottt_artists

?api_key=5ZAOMB3BUR8QUN4PE

&rows=20

&version=3

&bucket=id:musicbrainz

&idlimit=Y

What’s Next? In this initial release of Rosetta Stone we’ve built the infrastructure for fast ID mapping. We are currently supporting mapping between Echo Nest Artist IDs and Musicbrainz IDs. We will be adding support for mapping at the track level soon – and keep an eye out for the addition of commercial ID spaces that will allow easy mapping being Echo Nest IDs and those associated with commercial music service providers.

In the near future we’ll be rolling out support to the various clients (pyechonest and the Java client API) to support Rosetta Stone.

As always, we love any feedback and suggestions to make writing music apps easier. So email me (paul@echonest.com) or leave a comment here.

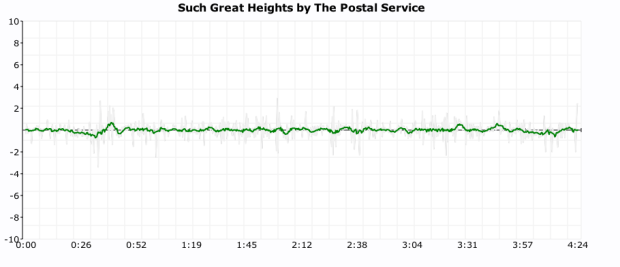

Revisiting the click track

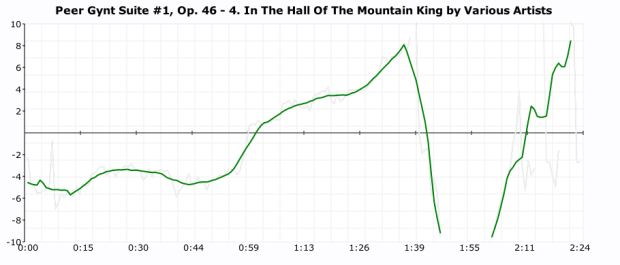

Posted by Paul in Music, The Echo Nest on February 8, 2010

One of my more popular posts from last year was ‘In Search of the Click track‘ where I posted some plots showing the tempo deviations from the average tempo for a number of songs. From these plots it was pretty easy to see which songs had a human setting the beat and which songs had a machine setting the beat (be it a click track, drum machine or an engineer fitting the song to a tempo grid). I got lots of feedback along with many requests to generate click plots for particular drummers. It was a bit of work to generate a click plot (find the audio, upload it to the analyzer, get the results, normalize the data, generate the plot, convert it to an image and finally post it to the web) so I didn’t create too many more.

Last week Brian released the alpha version of a nifty new set of APIs that give access to the analysis data for millions of tracks. Over the weekend, I wrote a web application that takes advantage of the new APIs to make it easy to get a click plot for just about any track. Just type in the name of the artist and track and you’ll get the click plot – you don’t have to find the audio or upload it or wrestle with python or gnuplot. The web app is here:

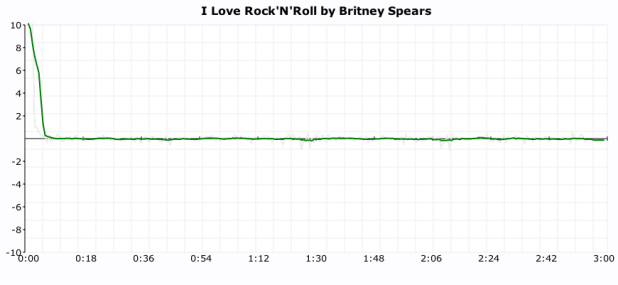

Here are some examples of the output. First up is a plot of “I love rock’n’ roll’ by Britney Spears. The plot shows the tempo deviations from the average song tempo over the course of the song. The plot shows that there’s virtually no deviation at all. Britney is using a machine to set the beat.

Now compare Britney’s plot to the click plot for the song ‘So Lonely’ by the Police:

Here we see lots of tempo variation. There are four main humps each corresponding to each chorus where Police drummer Stewart Copeland accelerates the beat. Over the course of the song there is an increase in the average tempo that build tension and excitement. In this song the tempo is maintained by a thinking, feeling human, whereas Britney is using a coldhearted, sterile machine to set the tempo for her song.

Here we see lots of tempo variation. There are four main humps each corresponding to each chorus where Police drummer Stewart Copeland accelerates the beat. Over the course of the song there is an increase in the average tempo that build tension and excitement. In this song the tempo is maintained by a thinking, feeling human, whereas Britney is using a coldhearted, sterile machine to set the tempo for her song.

For some types of music, machine generated tempos are appropriate. Electronica, synthpop and techno benefit from an ultra-precise tempo. Some examples are Kraftwerk and The Postal Service:

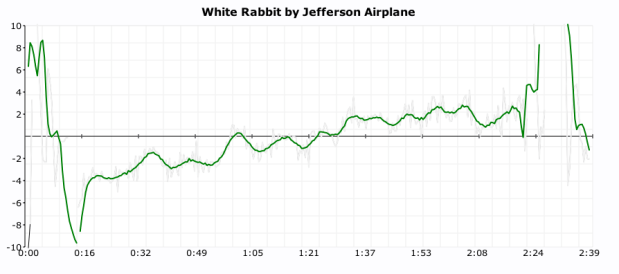

But for many songs, the tempo variations add much to the song. The gradual speed up in Jefferson Airplane’s White Rabbit:

But for many songs, the tempo variations add much to the song. The gradual speed up in Jefferson Airplane’s White Rabbit:

and the crescendo in ‘In the Hall of the Mountain King’:

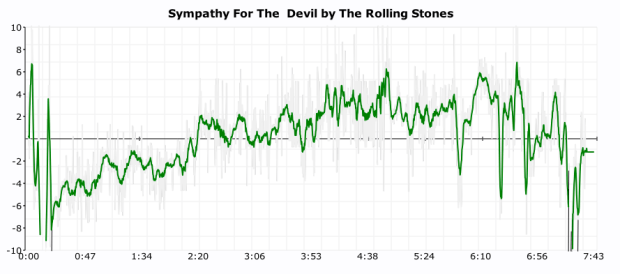

And in the Rolling Stone’s Sympathy for the Devil

And in the Rolling Stone’s Sympathy for the Devil

It is also fun to use the click plots to see how steady drummers are (and to see which ones use clicktracks). Some of my discoveries:

Keith Moon used a click track on ‘Won’t Get Fooled Again’:

(You can see him wearing headphones in this video)

(You can see him wearing headphones in this video)

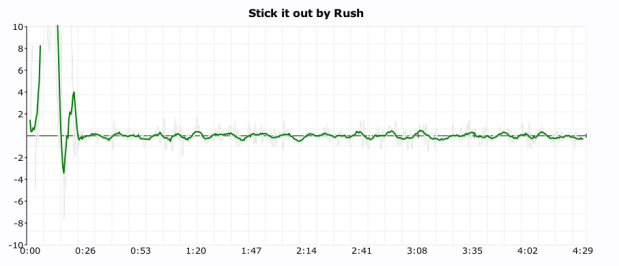

It looks like Neil Peart uses a click track on Stick it out:

Art Blakey can really lay it down without a click track (he really looks like a machine here):

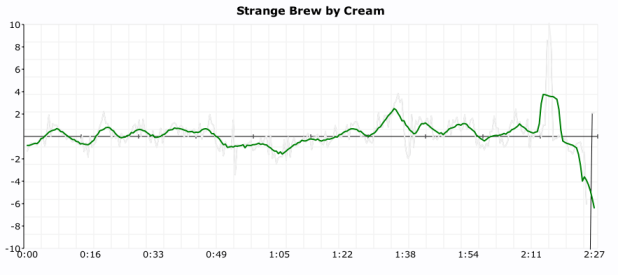

As does Ginger Baker (or does he use a click?):

As does Ginger Baker (or does he use a click?):

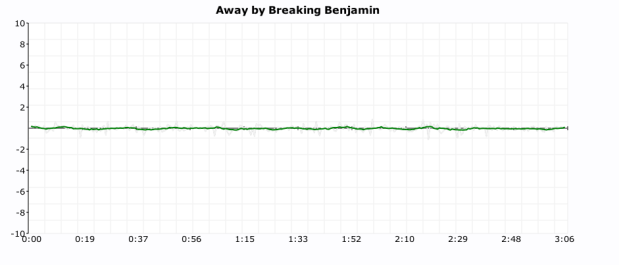

It seems that all of the nümetal bands use clicks:

Breaking Benjamin

Nickelback

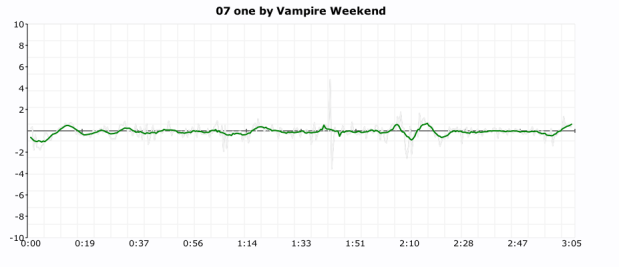

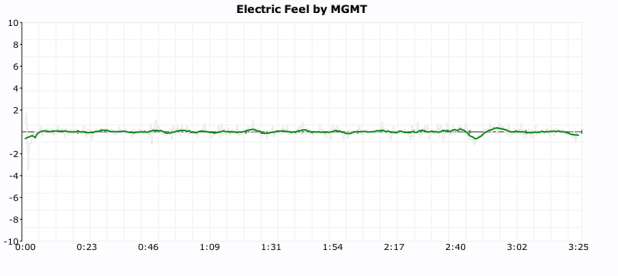

As do some of the indie bands:

As do some of the indie bands:

The Decemberists

I find it interesting to look at the various click plots. It gives me a bit more insight into the band and the drummer. However, some types of music such as progressive rock – with its frequent time signature and tempo changes are really hard to plot – which is too bad since many of the best drummers play prog rock.

In addition the plots I attempted a couple of objective metrics that can be used to measure the machine like quality of drummer. The Machine Score is a measure of how often the beat is within a 2 BPM window of the average tempo of the song. Higher numbers indicate that the drummer is more like a machine. This metric is a bit troublesome for songs that change tempo, a song that changes tempo often may have a lower machine score than it should. The Longest run of machine like drumming is the count of the longest stretch of continuous beats that are within 1BPM of the average tempo of the song. Long runs (over a couple hundred beats) tend to indicate that a machine is in charge of the beat. Both these metrics are somewhat helpful in determining whether or not the drumming is live, but I still find that the best determinate is to look at the plot. More work is needed here.

The new click plotter was a fun weekend project. I got to use rgraph – an HTML5 canvas graph library (thanks to Ryan for suggesting client-side plotting), along with cherrypy, pound and, of course, the Brian’s new web services. The whole thing is just 500 lines of code.

I hope you enjoy generating your own click plots. If you find some interesting ones post a link here and I’ll add them to the Gallery of Drummers.

Roundup of Echo Nest hacks at Stockholm Music Hack Day

Posted by Paul in events, Music, The Echo Nest on February 1, 2010

The first music hack day of the new decade is in the can. There were lots of great hacks produced over the weekend. Here are some of the hacks that used the Echo Nest APIs”

- Proxim.fm – PresentRadio is a QT4 application using the open source Last.fm libraries, it has metadata views and muchos dataporn provided by Echonest. It looks out for local bluetooth devices and will seamlessly switch to the new station when new people arrive/leave without interrupting the track.

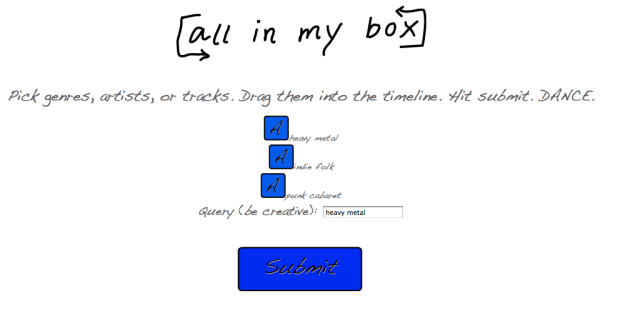

- All In My Box – Allows non-djs to whip up sweet 1 hour mixes in seconds (err, minutes, considering the time to actually beat-match the songs on the server). The drag and drop interface allows users to choose genres and artists and drop them onto a timeline. We use the Echo Nest api to get tracks from the selected genres and artists and stitch them together to create a mix that flows from artist to genre and back again. Echo Nest Prize Winner

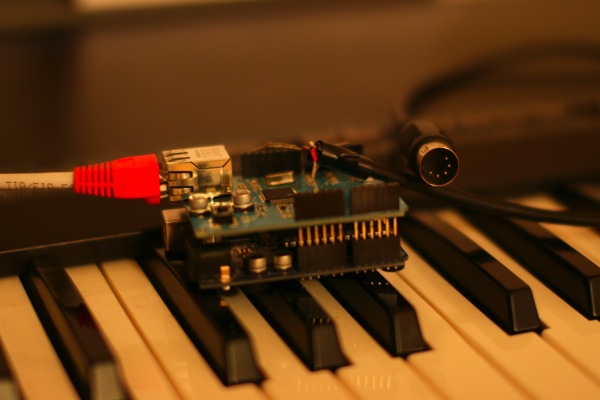

- Echo Nest Midi Player – The Echo Nest Midi Player is a small box you plug into your music instrument (with midi protocol), and on the internet. In real time it plays tracks analysed on the Echo Nest. Echo Nest Prize Winner

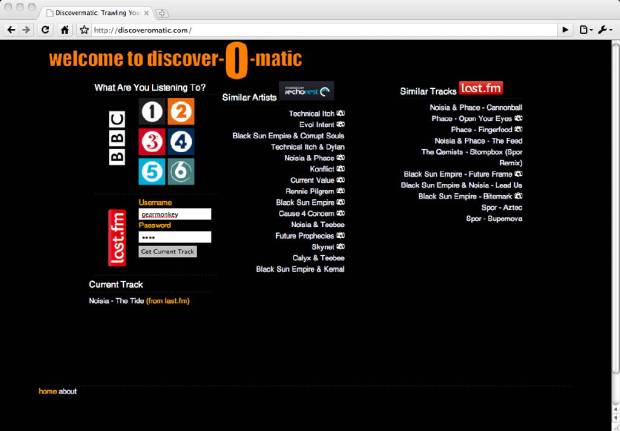

- discoveOMatic – discoverOmatic allows you to discover new artists and tracks while listening to the radio or even your own collection. Simply select the radio station you’re currently listening to (currently on BBC brands supported) and we’ll do the rest. If you’re listening to music through other means and scrobbling to last.fm we can provide recommendation based on your currently playing or most recently scrobbled track as well. Discover the great music while listening to what you like with the discoverOmatic!

- All Music Is Equal – Take any piece of music and turn it into a “music pupil plays church organ, using a slightly stumbling metronome” version!

Screencast:

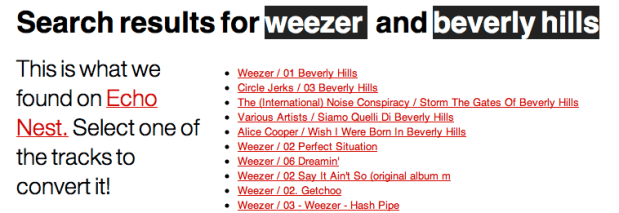

- Mystery Music Search – Mystery Music Search gives you the results for whatever the person before you searched for. Heavily inspired by mysterygoogle.com, and using the new Echonest search_tracks api. Echo Nest Prize Winner

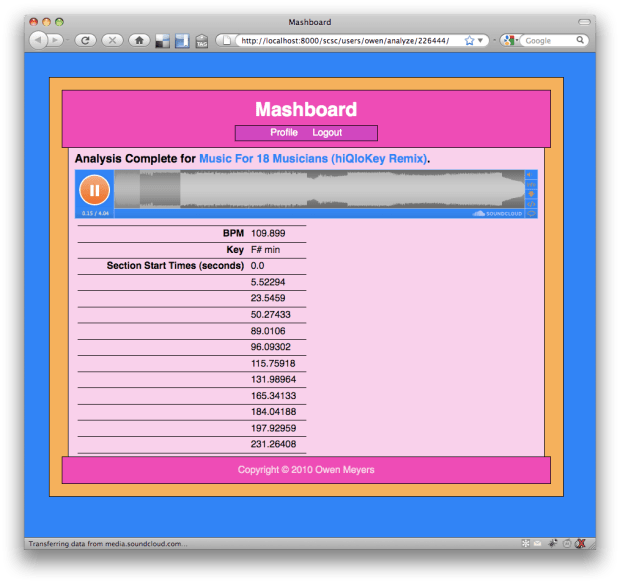

- Mashboard – Mashboard is a simple dashboard for your SoundCloud tracks. You can analyze the tracks using the EchoNest analysis API, returning Key/Mode, BPM, and song section information that is written to the appropriate metadata fields in the SoundCloud track. What’s more, you can scrobble your tracks to your Last.fm profile while listening on your Mashboard profile page. Last, but certainly not least, you can trade your SoundCloud tracks on TuneRights by making and accepting offers from other users, managing the shareholders of your tracks, and bidding on other tracks in the TuneRights system. Echo Nest Prize Winner

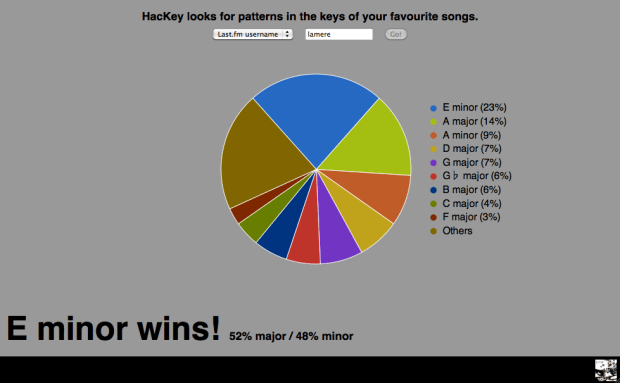

- HacKey – This hack looks gives you a chart that shows you the keys of your most listened to songs in your last.fm profile. (Read more about HacKey in this post).

Echo Nest Prize Winner

- AlbexOne – A mechanical device that creates unique visual patterns of the songs you are listening to! – Echo Nest Prize Winner

Congrats to all the winners and thanks to all for making cool stuff with the Echo Nest!