Archive for category events

The SQL Join is destroying music

Posted by Paul in events, Music, The Echo Nest on October 28, 2009

Brian Whitman,one of the founders of the Echo Nest, gave a provocative talk last week at Music and Bits. Some excerpts:

Useless MIR Problems:

- Genre Identification – “Countless PhDs on this useless task. Trying to teach a computer a marketing construct”

Hard but interesting MIR Problems:

- Finding the saddest song in the world

- Predicting Pitchfork and All Music Guide ratings

- Predicting the gender of a listener based upon their music taste

On Recommendation:

- “The best music experience is still very manual… I am still reading about music, not using a recommender.”

- “If we only used collaborative filtering to discover music, the popular artists would eat the unknowns alive.”

- “The SQL Join is destroying music”

Brian’s notes on the talk are on his blog. The slides are online here. Highly recommended:

ISMIR – MIREX Panel Discussion

Stephen Downie presents the MIREX session

Statistics for 2009:

- 26 tasks

- 138 participants

- 289 evaluation runs

Results are now published: http://music-ir.org/r/09results

This year, new datasets:

- Mazurkas

- MIR 1K

- Back Chorales

- Chord and Segmentation datasets

- Mood dataset

- Tag-a-Tune

Evalutron 6K – Human evaluations – this year, 50 graders / 7500 possible grading events.

What’s Next?

- NEMA

- End-to-End systems and tasks

- Qualitative assessments

- Possible Journal ‘Special Issue’

- MIREX 2010 early start: http://www.music-ir.org/mirex/2010/index.php/Main_Page

- Suggestions to model after the ACM MM Grand challenge

Issues about MIREX

- Rein in the parameter explosion

- Not rigorously tested algorithms

- Hard-coded parameters, path-separators, etc

- Poorly specified data inputs/outputs

- Dynamically linked libraries

- Windows submissions

- Pre-compiled Matlab/MEX Submissions

- The ‘graduation’ problem – Andreas and Cameron will be gone in summer.

Long discussion with people opining about tests, data. Ben Fields had a particularly good point about trying to make MIREX better reflect real systems that draw upon web resources.

ISMIR Oral Session 5 – Tags

Oral Session 5 – Tags

Session Chair: Paul Lamere

I’m the session chair for this session, so I can’t keep notes. So instead I offer the abstracts.

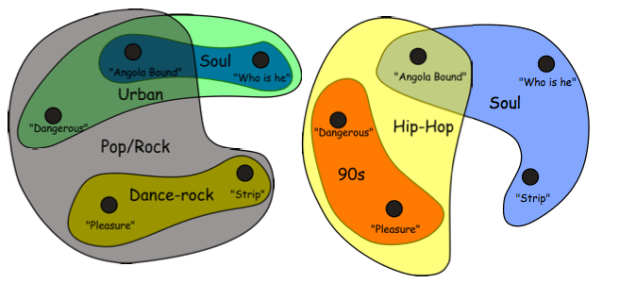

TAG INTEGRATED MULTI-LABEL MUSIC STYLE CLASSIFICATION WITH HYPERGRAPH

Fei Wang, Xin Wang, Bo Shao, Tao Li Mitsunori Ogihara

Abstract: Automatic music style classification is an important, but challenging problem in music information retrieval. It has a number of applications, such as indexing of and search- ing in musical databases. Traditional music style classifi- cation approaches usually assume that each piece of music has a unique style and they make use of the music con- tents to construct a classifier for classifying each piece into its unique style. However, in reality, a piece may match more than one, even several different styles. Also, in this modern Web 2.0 era, it is easy to get a hold of additional, indirect information (e.g., music tags) about music. This paper proposes a multi-label music style classification ap- proach, called Hypergraph integrated Support Vector Ma- chine (HiSVM), which can integrate both music contents and music tags for automatic music style classification. Experimental results based on a real world data set are pre- sented to demonstrate the effectiveness of the method.

EASY AS CBA: A SIMPLE PROBABILISTIC MODEL FOR TAGGING MUSIC

Matthew D. Hoffman, David M. Blei, Perry R. Cook

ABSTRACT Many songs in large music databases are not labeled with semantic tags that could help users sort out the songs they want to listen to from those they do not. If the words that apply to a song can be predicted from audio, then those predictions can be used both to automatically annotate a song with tags, allowing users to get a sense of what qualities characterize a song at a glance. Automatic tag prediction can also drive retrieval by allowing users to search for the songs most strongly characterized by a particular word. We present a probabilistic model that learns to predict the probability that a word applies to a song from audio. Our model is simple to implement, fast to train, predicts tags for new songs quickly, and achieves state-of-the-art performance on annotation and retrieval tasks.

USING ARTIST SIMILARITY TO PROPAGATE SEMANTIC INFORMATION

Joon Hee Kim, Brian Tomasik, Douglas Turnbull

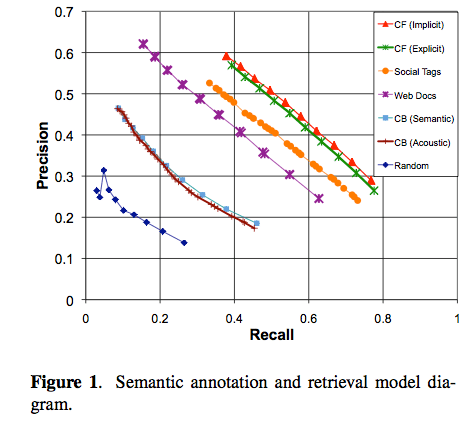

ABSTRACT Tags are useful text-based labels that encode semantic information about music (instrumentation, genres, emotions, geographic origins). While there are a number of ways to collect and generate tags, there is generally a data sparsity problem in which very few songs and artists have been accurately annotated with a sufficiently large set of relevant tags. We explore the idea of tag propagation to help alleviate the data sparsity problem. Tag propagation, originally proposed by Sordo et al., involves annotating a novel artist with tags that have been frequently associated with other similar artists. In this paper, we explore four approaches for computing artists similarity based on dif- ferent sources of music information (user preference data, social tags, web documents, and audio content). We com- pare these approaches in terms of their ability to accurately propagate three different types of tags (genres, acoustic de- scriptors, social tags). We find that the approach based on collaborative filtering performs best. This is somewhat surprising considering that it is the only approach that is not explicitly based on notions of semantic similarity. We also find that tag propagation based on content-based mu- sic analysis results in relatively poor performance.

MUSIC MOOD REPRESENTATIONS FROM SOCIAL TAGS

MUSIC MOOD REPRESENTATIONS FROM SOCIAL TAGS

Cyril Laurier, Mohamed Sordo, Joan Serra, Perfecto Herrera

ABSTRACT This paper presents findings about mood representations. We aim to analyze how do people tag music by mood, to create representations based on this data and to study the agreement between experts and a large community. For this purpose, we create a semantic mood space from last.fm tags using Latent Semantic Analysis. With an unsuper- vised clustering approach, we derive from this space an ideal categorical representation. We compare our commu- nity based semantic space with expert representations from Hevner and the clusters from the MIREX Audio Mood Classification task. Using dimensional reduction with a Self-Organizing Map, we obtain a 2D representation that we compare with the dimensional model from Russell. We present as well a tree diagram of the mood tags obtained with a hierarchical clustering approach. All these results show a consistency between the community and the ex- perts as well as some limitations of current expert models. This study demonstrates a particular relevancy of the basic emotions model with four mood clusters that can be sum- marized as: happy, sad, angry and tender. This outcome can help to create better ground truth and to provide more realistic mood classification algorithms. Furthermore, this method can be applied to other types of representations to build better computational models.

EVALUATION OF ALGORITHMS USING GAMES: THE CASE OF MUSIC TAGGING

Edith Law, Kris West, Michael Mandel, Mert Bay, J. Stephen Downie

Abstract Search by keyword is an extremely popular method for retrieving music. To support this, novel algorithms that automatically tag music are being developed. The conventional way to evaluate audio tagging algorithms is to com- pute measures of agreement between the output and the ground truth set. In this work, we introduce a new method for evaluating audio tagging algorithms on a large scale by collecting set-level judgments from players of a human computation game called TagATune. We present the de- sign and preliminary results of an experiment comparing five algorithms using this new evaluation metric, and con- trast the results with those obtained by applying several conventional agreement-based evaluation metrics.

ISMIR Poster Madness #3

- (PS3-1) Automatic Identification for Singing Style based on Sung Melodic Contour Characterized in Phase Plane

Tatsuya Kako, Yasunori Ohishi, Hirokazu Kameoka, Kunio Kashino and Kazuya Takeda - (PS3-2) Automatic Identification of Instrument Classes in Polyphonic and Poly-Instrument Audio

Philippe Hamel, Sean Wood and Douglas Eck

Looks very interesting - (PS3-3) Using Regression to Combine Data Sources for Semantic Music Discovery

Brian Tomasik, Joon Hee Kim, Margaret Ladlow, Malcolm Augat, Derek Tingle, Rich Wicentowski and Douglas Turnbull - (PS3-4) Lyric Text Mining in Music Mood Classification

Xiao Hu, J. Stephen Downie and Andreas Ehmann

lyrics and modod – surprising results! - (PS3-5) Robust and Fast Lyric Search based on Phonetic Confusion Matrix

Xin Xu, Masaki Naito, Tsuneo Kato and Hisashi Kawai

Phonetic confusion – misheard lyrics! KDDI – must see this. - (PS3-6) Using Harmonic and Melodic Analyses to Automate the Initial Stages of Schenkerian Analysis

Phillip Kirlin

Schenkerian analysis – what is this really? - (PS3-7) Hierarchical Sequential Memory for Music: A Cognitive Model

James Maxwell, Philippe Pasquier and Arne Eigenfeldt

Cognitive model for online learning. - (PS3-8) Additions and Improvements in the ACE 2.0 Music Classifier

Jessica Thompson, Cory McKay, J. Ashley Burgoyne and Ichiro Fujinaga

Open source MIR in java - (PS3-9) A Probabilistic Topic Model for Unsupervised Learning of Musical Key-Profiles

Diane Hu and Lawrence Saul

topic mode for key finding - (PS3-10) Publishing Music Similarity Features on the Semantic Web

Dan Tidhar, György Fazekas, Sefki Kolozali and Mark Sandler

SoundBite – distributed feature collection - (PS3-11) Genre Classification Using Bass-Related High-Level Features and Playing Styles

Jakob Abesser, Hanna Lukashevich, Christian Dittmar and Gerald Schuller

semantic features - (PS3-12) From Multi-Labeling to Multi-Domain-Labeling: A Novel Two-Dimensional Approach to Music Genre Classification

Hanna Lukashevich, Jakob Abeßer, Christian Dittmar and Holger Großmann

Fraunhofer – autotagging - (PS3-13) 21st Century Electronica: MIR Techniques for Classification and Performance

Dimitri Diakopoulos, Owen Vallis, Jordan Hochenbaum, Jim Murphy and Ajay Kapur

Automated ISHKURS with multitouch – woot - (PS3-14) Relationships Between Lyrics and Melody in Popular Music

Eric Nichols, Dan Morris, Sumit Basu and Chris Raphael

Text features vs melodic features – where do the stressed syllables fall - (PS3-15) RhythMiXearch: Searching for Unknown Music by Mixing Known Music

Makoto P. Kato

Looks like an echo nest remix: AutoDJ - (PS3-16) Musical Structure Retrieval by Aligning Self-Similarity Matrices

Benjamin Martin, Matthias Robine and Pierre Hanna

` - (PS3-17) Exploring African Tone Scales

Dirk Moelants, Olmo Cornelis and Marc Leman

No standardized scales – how do you deal with that? - (PS3-18) A Discrete Filter Bank Approach to Audio to Score Matching for Polyphonic Music

Nicola Montecchio and Nicola Orio - (PS3-19) Accelerating Non-Negative Matrix Factorization for Audio Source Separation on Multi-Core and Many-Core Architectures

Eric Battenberg and David Wessel

Runs NMF on GPUs and openMP - (PS3-20) Musical Models for Melody Alignment

Peter van Kranenburg, Anja Volk, Frans Wiering and Remco C. Veltkamp

alignment of folks songs - (PS3-21) Heterogeneous Embedding for Subjective Artist Similarity

Brian McFee and Gert Lanckriet

Crazy ass features! - (PS3-22) The Intersection of Computational Analysis and Music Manuscripts: A New Model for Bach Source Studies of the 21st Century

Masahiro Niitsuma, Tsutomu Fujinami and Yo Tomita

ISMIR Oral Session 4 – Music Recommendation and playlisting

Music Recommendation and playlisting

Session Chair: Douglas Turnbull

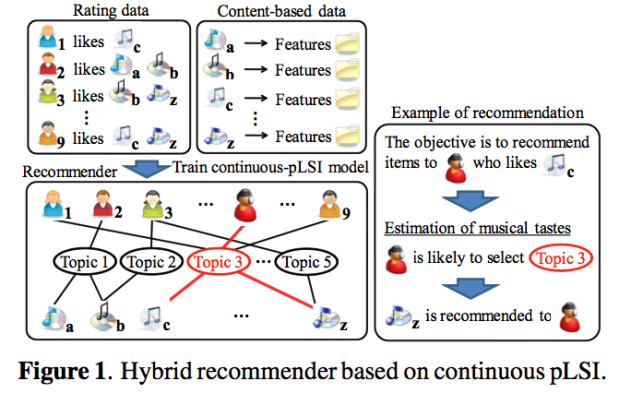

CONTINUOUS PLSI AND SMOOTHING TECHNIQUES FOR HYBRID MUSIC RECOMMENDATION

by Kazuyoshi Yoshii and Masataka Goto

- Unexpected encounters with unknown songs is increasingly important.

- Want accurate and diversifed recommendations

- Use a probabilistic approach suitable to deal with uncertainty of rating histories

- Compares CF vs. content-based and his Hybrid filtering system

Approach: Use PLSI to create a 3-way aspect model: user-song-feature – the unobservable category regading genre, tempo, vocal age, popularity etc. – pLSI typical patterns are given by relationships between users, songs and a limited number of topics. Some drawbacks: PLSI needs discrete features, multinomial distributions are assumed. To deal with this formulate continuous pLSI, use gaussian mixture models and can assume continuous distributions. A drawback of continuous pLSI – local minimum problem and the hub problem. Popular songs are recommended often because of the hubs. How to deal with this: Gaussian parameter tying – this reduces the number of free parameters. Only the mixture weights vary. Artist-based song clustering: Train an artist-based model and update it to a song-based model by an incremental training method (from 2007).

Here’s the system model:

Evaluation: They found that using the techniques to adjust model complexity significantly improved the accuracy of recommendations and that the second technique could also reduce hubness.

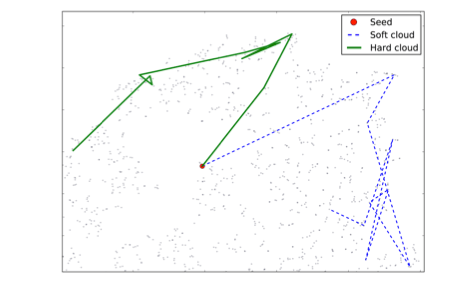

STEERABLE PLAYLIST GENERATION BY LEARNING SONG SIMILARITY FROM RADIO STATION PLAYLISTS

François Maillet, Douglas Eck, Guillaume Desjardins, Paul Lamere

This paper presents an approach to generating steerable playlists. They first demonstrate a method for learning song transition probabilities from audio features extracted from songs played in professional radio station playlists and then show that by using this learnt similarity function as a prior, they are able to generate steerable playlists by choosing the next song to play not simply based on that prior, but on a tag cloud that the user is able to manipulate to express the high-level characteristics of the music he wishes to listen to.

- Learn a similarity space from commercial radion staion playlists

- generate steerable playlists

Francois defines a playlist. Data sources: Radio Paradise and Yes.com’s API. 7million tracks,

Problem: They had positive examples but didn’t have an explicit set of negative examples. Chose them at random.

Learning the song space: Trained a binary classifier to determine if a song sequence is real.

Features: Timbre, Rhythmic/dancability, loudness

Eval:

EVALUATING AND ANALYSING DYNAMIC PLAYLIST GENERATION HEURISTICS USING RADIO LOGS AND FUZZY SET THEORY

Klaas Bosteels, Elias Pampalk, Etienne Kerr

Abstract: In this paper, we analyse and evaluate several heuristics for adding songs to a dynamically generated playlist. We explain how radio logs can be used for evaluating such heuristics, and show that formalizing the heuristics using fuzzy set theory simplifies the analysis. More concretely, we verify previous results by means of a large scale evaluation based on 1.26 million listening patterns extracted from radio logs, and explain why some heuristics perform better than others by analysing their formal definitions and conducting additional evaluations.

Notes:

- Dynamic playlist generation

- Formalization using fuzzy sets. Sets of accepted songs and sets of rejected songs

- Why last two songs not accepted? To make sure the listener is still paying attention?

- Interesting observation that the thing that matters most is membership in the fuzzy set of rejected songs. Why? Inconsistent skipping behavior.

SMARTER THAN GENIUS? HUMAN EVALUATION OF MUSIC RECOMMENDER SYSTEMS.

Luke Barrington, Reid Oda, Gert Lanckriet

Abstract: Genius is a popular commercial music recommender sys- tem that is based on collaborative filtering of huge amounts of user data. To understand the aspects of music similarity that collaborative filtering can capture, we compare Genius to two canonical music recommender systems: one based purely on artist similarity, the other purely on similarity of acoustic content. We evaluate this comparison with a user study of 185 subjects. Overall, Genius produces the best recommendations. We demonstrate that collaborative filter- ing can actually capture similarities between the acoustic content of songs. However, when evaluators can see the names of the recommended songs and artists, we find that artist similarity can account for the performance of Genius. A system that combines these musical cues could generate music recommendations that are as good as Genius, even when collaborative filtering data is unavailable.

Great talk, lots of things to think about.

ISMIR Oral Session 3 – Musical Instrument Recognition and Multipitch Detection

Session Chair: Juan Pablo Bello

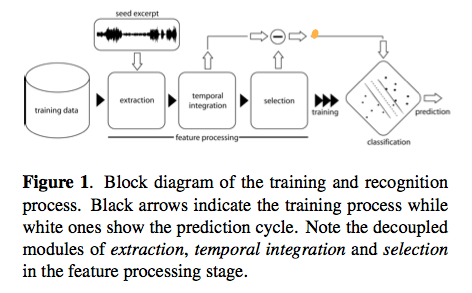

SCALABILITY, GENERALITY AND TEMPORAL ASPECTS IN AUTOMATIC RECOGNITION OF PREDOMINANT MUSICAL INSTRUMENTS IN POLYPHONIC MUSIC

By Ferdinand Fuhrmann, Martín Haro, Perfecto Herrera

- Automatic recognition of music instruments

- Polyphonic music

- Predominate

Research Questions

- scale existing methods to higlh ployphonci muci

- generalize in respect to used intstruments

- model temporal information for recognition

Goals:

- Unifed framework

- Pitched and unpitched …

- (more goals but I couldn’t keep up_

Neat presentation of survey of related work, plotting on simple vs. complex

Ferdinand was going too fast for me (or perhaps jetlag was kicking in), so I include the conclusion from his paper here to summarize the work:

Conclusions: In this paper we addressed three open gaps in automatic recognition of instruments from polyphonic audio. First we showed that by providing extensive, well designed data- sets, statistical models are scalable to commercially avail- able polyphonic music. Second, to account for instrument generality, we presented a consistent methodology for the recognition of 11 pitched and 3 percussive instruments in the main western genres classical, jazz and pop/rock. Fi- nally, we examined the importance and modeling accuracy of temporal characteristics in combination with statistical models. Thereby we showed that modelling the temporal behaviour of raw audio features improves recognition per- formance, even though a detailed modelling is not possible. Results showed an average classification accuracy of 63% and 78% for the pitched and percussive recognition task, respectively. Although no complete system was presented, the developed algorithms could be easily incorporated into a robust recognition tool, able to index unseen data or label query songs according to the instrumentation.

MUSICAL INSTRUMENT RECOGNITION IN POLYPHONIC AUDIO USING SOURCE-FILTER MODEL FOR SOUND SEPARATION

by Toni Heittola, Anssi Klapuri and Tuomas Virtanen

Quick summary: A novel approach to musical instrument recognition in polyphonic audio signals by using a source-filter model and an augmented non-negative matrix factorization algorithm for sound separation. The mixture signal is decomposed into a sum of spectral bases modeled as a product of excitations and filters. The excitations are restricted to harmonic spectra and their fundamental frequencies are estimated in advance using a multipitch estimator, whereas the filters are restricted to have smooth frequency responses by modeling them as a sum of elementary functions on the Mel-frequency scale. The pitch and timbre information are used in organizing individual notes into sound sources. The method is evaluated with polyphonic signals, randomly generated from 19 instrument classes.

Source separation into various sources. Typically uses non-negative matrix factorization. Problem: Each pitch needs its own function leading to many functions. The system overview:

The Examples are very interesting: www.cs.tut.fi/~heittolt/ismir09

HARMONICALLY INFORMED MULTI-PITCH TRACKING

by Zhiyao Duan, Jinyu Han and Bryan Pardo

A novel system for multipitch tracking, i.e. estimate the pitch trajectory of each monophonic source in a mixture of harmonic sounds. Current systems are not robust, since they use local time-frequencies, they tend to generate only short pitch trajectories. This system has two stages: multi-pitch estimation and pitch trajectory formation. In the first stage, they model spectral peaks and non-peak regions to estimate pitches and polyphony in each single frame. In the second stage, pitch trajectories are clustered following some constraints: global timbre consistency, local time-frequency locality.

Here’s the system overview:

ISMIR Poster Madness part 2

Poster madness! Version 2 – even faster this time. I can’t keep up

- Singing Pitch Extraction – Taiwan

- Usability Evaluation of Visualization interfaces for content-based music retrieval – looks really cool! 3D

- Music Paste – concatenating music clipbs based on chroma and rhythm features

- Musical bass-line pattern clustering and its application aduio gener classification

- Detecting cover sets – looks nice – visualization – MTG

- Using Musical Structure to enhance automatic chord transcription –

- Visualizing Musical Structure from performance gesture – motion

- From low-level to song-level percussion descriptors of polyphonic music

- MTG – Query by symbolic example – use a DNA/Blast type approach

- sten – web-based approach to determine the origin of an artist – visualizations

- XML-format for any kind of time related symbolic data

- Erik Schmidt – FPGA feature extraction. MIR for devices

- Accelerating QBH – another hardware solution – 160 times faster

- Learning to control a reverberator using subjective perceptual descriptors – more boomy

- Interactive GTTM Analyzer –

- Estimating the error distribution of a tap sequence without ground Truth – Roger Dannenburg

- Cory McKay – ACE XML – Standard formats for features, metadata, labels and class ontologies

- An efficient multi-resolution spectral transform for music analysis

- Evaluation of multiple F0 estimation and tracking systems

BTW – Oscar informs me that this is not the first ever poster madness – there was one in Barcelona

Live from ISMIR

This week I’m attending ISMIR – the 10th International Society for Music Information Retrieval Conference being held in Kobe Japan. At this conference researchers gather to advance the state of the art in music information retrieval. It is a varied bunch including librarians, musicologists, experts in signal processing, machine learning, text IR, visualization, HCI. I’ll be trying to blog the various talks and poster sessions throughout the conference, (but at some point the jetlag will kick in – making it hard for me to think, let alone type. It’s 9AM – the keynote is starting …

This week I’m attending ISMIR – the 10th International Society for Music Information Retrieval Conference being held in Kobe Japan. At this conference researchers gather to advance the state of the art in music information retrieval. It is a varied bunch including librarians, musicologists, experts in signal processing, machine learning, text IR, visualization, HCI. I’ll be trying to blog the various talks and poster sessions throughout the conference, (but at some point the jetlag will kick in – making it hard for me to think, let alone type. It’s 9AM – the keynote is starting …

Opening Remarks

Masataka and Ich give the opening remarks. First some stats:

- 286 attendees from 28 countries

- 212 submissions fro 29 countries

- 123 papers (58%) accepted

- 214 reviewers

Tutorial Day at ISMIR

Posted by Paul in events, fun, Music, music information retrieval on October 26, 2009

Monday was tutorial day. After months of preparation, Justin finally got to present our material. I was a bit worried that our timing on the talk would be way out of wack and we’d have to self edit on the fly – but all of our time estimates seemed to be right on the money. whew! The tutorial was well attended with 80 or so registered – and lots of good questions at the end. All in all I was pleased at how it turned out. Here’s Justin talking about Echo Nest features:

After the tutorial a bunch of us went into town for dinner. 15 of us managed to find a restaurant that could accommodate us – and after lots of miming and pointing at pictures on the menu we managed to get a good meal. Lots of fun.

My first moments in Kobe – ISMIR 2009

After a 26 hours of travel from Nashua to Kobe Japan via Bostin, NYC, Tokyo to Osaka I arrived to find an extremely comfortable hotel at the conference center:

The conference hotel is the Portopia Hotel. It is quite nice. Here’s the lobby:

And the tower:

I went for a walk this morning to find an American-sized cup of coffee (24 oz is standard issue at Dunkin’s). This is the closest thing I could find. Looks like I’ll need another source of caffeine on this trip:

Thanks to Masataka, Ichiro and the rest of the conference committee for providing such a wonderful venue for ISMIR 2009.