My favorite music-related panels for SXSW Interactive

Posted by Paul in events, Music, The Echo Nest on March 7, 2011

Spring break for geeks is nearly upon us. If you are going to SXSW interactive, and are interested in what is is going on at the intersection of music and technology, be sure to check out these panels.

- Love, Music & APIs – Dave Haynes (SoundCloud) /Matt Ogle (The Echo Nest) – In the old days it was DJs, A&R folks, labels and record store owners that were the gatekeepers to music. Today, we are seeing a new music gatekeeper emerge… the developer. Using open APIs, developers are creating new apps that change how people explore, discover, create and interact with music. But developers can’t do it alone. They need data like gig listings, lyrics, recommendation tools and, of course, music! And they need it from reliable, structured and legitimate sources. In this presentation we’ll discuss and explore what is happening right now in the thriving music developer ecosystem. We’ll describe some of the novel APIs that are making this happen and what sort of building blocks are being put into place from a variety of different sources. We’ll demonstrate how companies within this ecosystem are working closely together in a spirit of co-operation. Each providing their own pieces to an expanding pool of resources from which developers can play, develop and create new music apps across different mediums – web, mobile, software and hardware. We’ll highlight some of the next-generation of music apps that are being created in this thriving ecosystem. Finally we’ll take a look at how music developers are coming together at events like Music Hack Day, where participants have just 24 hours to build the next generation of music apps. Someone once said, “APIs are the sex organs of software. Data is the DNA.” If this is true, then Music Hack Days are orgies.

- Finding Music With Pictures: Data Visualization for Discovery – Paul Lamere (shamelessly self promoting) – The Echo Nest – With so much music available, finding new music that you like can be like finding a needle in a haystack. We need new tools to help us to explore the world of music, tools that can help us separate the wheat from the chaff. In this panel we will look at how visualizations can be used to help people explore the music space and discover new, interesting music that they will like. We will look at a wide range of visualizations, from hand drawn artist maps, to highly interactive, immersive 3D environments. We’ll explore a number of different visualization techniques including graphs, trees, maps, timelines and flow diagrams and we’ll examine different types of music data that can contribute to a visualization. Using numerous examples drawn from commercial and research systems we’ll show how visualizations are being used now to enhance music discovery and we’ll demonstrate some new visualization techniques coming out of the labs that we’ll find in tomorrow’s music discovery applications.

- Connected Devices, the Cloud & the Future of Music – Brenna Ehrlich, Malthe Sigurdsson, Steve Savoca, Travis Bogard – Discovering and listening to music today is a fragmented experience. Most consumers discover in one place, purchase in another, and listen somewhere else. While iTunes remains the dominant way people buy and organize their digital music collections, on-demand music services like Rdio, MOG and Spotify are creating new ways to discover, play, organize, and share music. The wide-spread adoption of smartphones and connected devices, along with the growing ubiquity of wireless networks, has increased the promise of music-in-the-cloud, but are consumers ready to give up their iTunes and owning their music outright? While, early adopters and music enthusiasts are latching on, what will it take for the mainstream to shift their thinking? This session will explore how connected devices and cloud services will affect the way consumers find and buy music going forward.

- Expressing yourself Musically with Mobile Technology – Ge Wang – Smule – The mobile landscape as we know it is focused heavily on gaming, productivity and social media applications. But as mobile technology continues to advance and phones become smarter, people will search for even more intimate, immersive and interactive ways of expressing themselves. Today, mobile technologies have made music creation easy, affordable and accessible to the masses, enabling users of all ages, abilities and backgrounds, to create and share music, regardless of previous musical knowledge. Whether you’re a fan of hip hop, classic, pop or video game theme music, there is an app for everyone. And the entertainment industry has taken notice – almost every big name artist or brand has an app for mobile devices. Most of them are just fancy message boards providing information, but some are pushing the limits of what it means to interact with the artist or brand. From the palm of your hand you can Auto-Tune your voice to sound like your favorite hip hop star, play an instrument designed by Jorden Ruddess of Dream Theater or join a virtual Glee club. Each of these artists and brands are building communities thru mobile apps that provide anyone the ability to explore their inner star. This presentation will discuss how advances in mobile technology have opened up a new world of expression to everyone and enabled users to broadcast their own musical talents across the globe.

- How Digital Media Drives International Collaboration in Music – Farb Nivi, Gunnar Madsen, Russell Raines, Stephen Averill, Troy Campbell – The House of Songs is an Austin, TX based project focusing on musical creativity through international collaboration. The House has been operating since September 2009 and has provided the foundation for creative collaboration between some of the strongest Austin and Scandinavian songwriters. Through these experiences, the participating songwriters have created numerous potential relationships and have attained unique experiences benefiting their musical careers. This panel will discuss how digital media influences these collaboration efforts in the present and in the future. The conversation will also cover current trends in this area, challenges artists face in developing and expanding their audience, how artists today can succeed in procuring worldwide digital revenue, and ultimately emphasize the need of having this conversation.

- Metadata: The Backbone Of Digital Music Commerce – Bill Wilson, Christopher Read, Fayvor Love, Kiran Bellubb – Who cares about metadata? You should. In a world where millions of digital music transactions take place on a daily basis, it’s more important than ever that music, video, and application content appears correctly in digital storefronts, customers can find them, and that the right songwriter, artist and/or content owner gets paid. This panel will review the current landscape and make sense of the various identifiers such as ISRC, ISWC, GRID, ISNI as well as XML communications standards such as DDEX ERN and DSR messages. We’ll also cover why these common systems are critical as the backbone of digital music commerce from the smallest indie artist to the biggest corporate commerce partners.

- Music & Metadata: Do Songs Remain The Same? – Jason Schultz, Jess Hemerly, Larisa Mann – Metadata may be an afterthought when it comes to most people’s digital music collections, but when it comes to finding, buying, selling, rating, sharing, or describing music, little matters more. Metadata defines how we interact and talk about music—from discreet bits like titles, styles, artists, genres to its broader context and history. Metadata builds communities and industries, from the local fan base to the online social network. Its value is immense. But who owns it? Some sources are open, peer-produced and free. Others are proprietary and come with a hefty fee. And who determines its accuracy? From CDDB to MusicBrainz and Music Genome Project to AllMusic, our panel will explore the importance of metadata and information about music from three angles. First, production, where we’ll talk about the quality and accuracy of peer-produced sources for metatdata and music information, like MusicBrainz and Wikipedia, versus proprietary sources, like CDDB. Second, we’ll look at the social importance of music data, like how we use it to discuss music and how we tag it to enhance music description and discovery. Finally, we’ll look at some legal issues, specifically how patent, copyright, and click-through agreements affect portability and ownership of data and how metadata plays into or out of the battles over “walled garden” systems like Facebook and Apple’s iEmpire. We’ll also play a meta-game with metadata during the panel to demonstrate how it works and why it is important.

- Neither Moguls nor Pirates: Grey Area Music Distribution – Alex Seago, Heitor Alvelos, Jeff Ferrell, Pat Aufderheide, Sam Howard-Spink – The debate surrounding music piracy versus the so-called collapse of the music industry has largely been bipolar, and yet so many other processes of music distribution have been developing. From online “sharity” communities that digitize obscure vinyl never released in digital format (a network of cultural preservation, one could argue), all the way to netlabels that could not care less about making money out of their releases, as well as “grime” networks made up of bedroom musicians constantly remixing each other, there is a vast wealth of possibilities driving music in the digital world. This panel will present key examples emerging from this “grey area”, and discuss future scenarios for music production and consumption that stand proudly outside the bipolar box.

- SXSW Music Industry Geeks Meetup – Todd Hansen – As the SXSW Interactive Festival continues to grow, it often becomes harder to discover /network with the specific type of people you want to network with. Hence a full slate of daytime Meet Ups are scheduled for the 2011 event. These Meet Ups are definitely not a panel session — nor do they offer any kind of formal presentation or AV setup. On the contrary, these sessions are a room where many different conversations and (and will) go on at once. This timeslot is for technology geeks working in the music industry to network with other SXSW Interactive, Gold and Platinum network with other technology geeks in this industry. Cash bar onsite.

There you go! See you all soon in Austin.

What’s your favorite music visualization for discovery?

Posted by Paul in Music, visualization on February 28, 2011

In a couple of weeks I’m giving a talk at SXSW called Finding Music with pictures : Data visualization for discovery. In this panel I’ll talk about how visualizations can be used to help people explore the music space and discover new, interesting music that they will like. I intend to include lots of examples both from the commercial world as well as from the research world.

I’ll be drawing material from many sources including the Tutorial that Justin and I gave at ISMIR in Japan in October 2009: Using visualizations for music discovery. Of course lots of things have happened in the year and a half since we put together that tutorial such as iPads, HTML5, plus tons more data availability. If you happen to have a favorite visualization for music discovery, post a link in the comments or send me an email: paul [at] echonest.com.

Is that a hipster in your pocket, or are you just glad to see me?

Yes, this app will mock your music taste and then will tell you what you really should be listening to. It’s Pocket Hipster.

Is the KDD Cup really music recommendation?

The KDD Cup is an annual Data Mining and Knowledge Discovery competition organized by the ACM Special Interest Group on Knowledge Discovery and Data Mining. This year, the KDD-Cup is called Learn the rhythm, predict the musical scores. Yahoo! Music has contributed 300 million ratings performed by over 1 million anonymized users. The ratings are given to to songs, albums, artists and genres. The goal for this competition is for submitters to (1) Accurately predict ratings that users gave to various items and (2) Separation of loved songs from other songs.

This is a pretty exciting set of data. It is perhaps the largest set of music rating data ever released. With a data set of this size we should see Netflix Prize -sized advances in the music recommendation field because of it. However, there’s one little gotcha. The data is entirely anonymized. Not only have the user data been anonymized, but all of the songs, albums, artists and genres as well. So instead of getting ratings data like ‘user 1 rated bon jovi with five stars’, you get data like ‘user 1 rated artist 10 with five stars’ . Here’s a sample of data for one user:

3|14 # user ID 3 has 14 ratings 5980 90 3811 13:24:00 # item 5980 got a score of 90/100 11059 90 3811 13:24:00 # 3811 is a day offset from an 21931 90 3811 13:24:00 # undisclosed date 74262 90 3811 13:24:00 # 146781 90 3811 13:24:00 # 13:24 is the time on day 3811 173094 90 3811 13:24:00 175835 90 3811 13:24:00 180037 90 3811 13:24:00 194044 90 3811 13:24:00 267723 90 3811 13:24:00 290303 90 3811 13:24:00 366723 90 3811 13:24:00 432968 90 3811 13:24:00 451800 90 3811 13:24:00

Without any way to tie the item IDs to actual music items, this competition seems to be less about music recommendation and more about collaborative filtering (CF) algorithms. As Oscar Celma (who literally wrote the book on music recommendation) put it in the KDD Cup competition forum:

Without artist/song name, the dataset has no interest for me (e.g. it doesn’t make any sense not being able to understand what are you predicting). As it is now, this is not really a “music dataset” nor a competition about “music recommendation”, but simply a way to apply CF to a huge dataset. In a way, this is good for people doing research on CF. But, not being able to add *any* knowledge about the domain… it doesn’t make any sense, IMHO.

Researcher Amelie Anglade adds:

There is so much we could do if we had access to the artist and track names, using Music Information Retrieval techniques: we could analyse the audio (tempo, chords, melody, timbre, etc.), the scores, the lyrics,the artists’ connections, and much more. There is a growing community working on these topics, and attempting to do music recommendation without any contextual and/or content information other than the genres (which is a limited approach) is simply ignoring this whole branch of research.

The folks at Yahoo! who have generously put together the dataset do understand how the lack of real, non-anonymized music data makes it difficult for a whole branch of researchers from the Music Information Retrieval community to participate in the competition. However Noam Koenigstein, one of the organizers of this years KDD-Cup, says that the aggressive anonymization of the data is required by their legal team due to recent lawsuits around large releases of user rating data (see Netflix lawsuit) and their hands are tied. Noam does go on to say that:

After working with this dataset for 6 months now, I can defiantly say that there are differences between music CF and other types of CF. One example is the popularity temporal trends in music that are different than in movies (Netflix). So a CF system that considers also temporal effects will be different in music. There are other differences as well, but I can not reveal them right now.

I’m sure Noam is right, that there is some interesting differences between the music rating data and other large rating sets and I’m sure that exploring these differences will improve the state-of-the-art in CF systems, but Oscar and Amelie are right too – so much more could be learned if we had the ability to know what items were actually being rated

There have been two very active research communities involved in music recommendation. The RecSys community takes a traditional recommender systems approach and relies mostly on collaborative filtering techniques to make recommendations. To this community, data mining of user behavior is enough to make good recommendations. Whereas the Music Information Retrieval (MIR) community focuses much more on the music itself, relying on content-based (CB) techniques based on the audio (or descriptions of the audio) to find musical connections to base recommendations on. Each approach has its own strengths and weaknesses (CF has the cold start problem, popularity feedback loops, hacking susceptibility etc. while CB tends to be computationally more challenging and has trouble separating good music from bad). The best systems tend to combine aspects of both approaches into hybrid systems.

The KDD-cup data set is a fantastic set of data, and I’m sure this data will help the RecSys community improve the state-of-the-art in CF systems. The MIR community is also creating its own industrial-sized datasets for research such as the recently released Million Song Data Set which will be used to improve CB techniques. It is my hope that someday we’ll be able to offer a combined dataset that contains both massive rating data and massive content data. If we put all this data in the hands of researchers, there’s no telling what they’ll find. And perhaps that’s the real problem – as Jeremy Reed tweeted: Biomed researchers can obtain illegal substances for research, but we can’t get data because we’ll find users with bad taste!

Finding the most dramatic bits in music

Posted by Paul in code, fun, Music, The Echo Nest on February 20, 2011

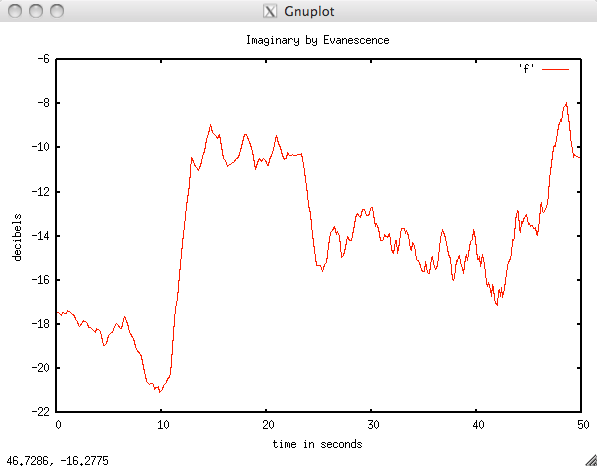

Evanescence is one of my guilty listening pleasures. I enjoy how Amy Lee’s voice is juxtaposed against the wall of sound produced by the rest of the band. For instance, in the song Imaginary, there’s a 30 seconds of sweet voice + violins before you get slammed by the hammer of the gods:

This extreme change in energy makes for a very dramatic moment in the music. It is one of the reasons that I listen to progressive rock and nu-metal (despite the mockery of my co-workers). However, finding these dramatic gems in the music is hard – there’s a lot of goth- and nu-metal to filter through, and much of it is really bad. After even just a few minutes of listening I feel like I’m lost at a Twicon. What I need is a tool to help me find these dramatic moments, to filter through the thousands of songs to find the ones that have those special moments when the beauty comes eye to eye with the beast.

My intuition tells me that a good place to start is to look at the loudness profile for songs with these dramatic moments. I should expect to see a sustained period of relatively soft music followed by sharp transition to a sustained period of loud music. This is indeed what we see:

This plot shows a windowed average of the Echo Nest loudness for the first 50 seconds of the song. In this plot we see a relatively quiet first 10 seconds (hovering between -21 and -18 db), followed by an extremely loud section of around -10db). (Note that this version of the song has a shorter intro than the version in the Youtube video). If we can write some code to detect these transitions, then we will have a drama detector.

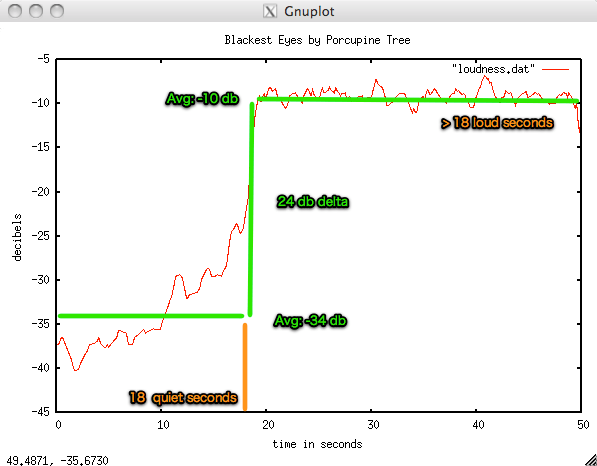

The Drama Detector: Finding a rising edge in a loudness profile is pretty easy, but we want to go beyond that and make sure we have a way to rank then so that we can find the most dramatic changes. There are two metrics that we can use to rank the amount of drama: (1) The average change in loudness at the transition and (2) the length of the quiet period leading up to the transition. The bigger the change in volume and the the longer it has been quiet means more drama. Let’s look at another dramatic moment as an example:

The opening 30 seconds of Blackest Eyes by Porcupine Tree fit the dramatic mold. Here’s an annotated loudness plot for the opening:

The drama-finding algorithm simply looks for loudness edges above a certain dB threshold and then works backward to find the beginning of the ‘quiet period’. To make a ranking score that combines both the decibel change and the quiet period, I tried the simplest thing that could possible work which is to just multiply the change in decibels by the quiet period (in seconds). Let’s try this metric out on a few songs to see how it works:

- Porcupine Tree – Blackest Eyes – score: 18 x 24 = 432

- Evanescence – Imaginary (w/ 30 second intro) – score: 299

- Lady Gaga – Poker Face- score: 82 – not very dramatic

- Katy Perry – I kissed a girl – score: 33 – extremely undramatic

This seems to pass the sanity test, dramatic songs score high, non-dramatic songs score low (using my very narrow definition of dramatic). With this algorithm in mind, I then went hunting for some drama. To do this, I found the 50 artists most similar to Evanescence, and for each of these artists I found the 20 most hotttest songs. I then examined each of these 1,000 songs and ranked them in dramatic order. So, put on your pancake and eye shadow, dim the lights, light the candelabra and enjoy some dramatic moments

First up is the wonderfully upbeat I want to Die by Mortal Love. This 10 minute long song has a whopping drama score of 2014. There a full two minutes of quiet starting at 5 minutes into the song before the dramatic moment (with 16 dB of dramatic power!) occurs:

The dramatic moment occurs at 7:12 seconds into the song – but I’m not sure if it is worth the wait. Not for me, but probably something they could play at the Forks Washington High School prom though.

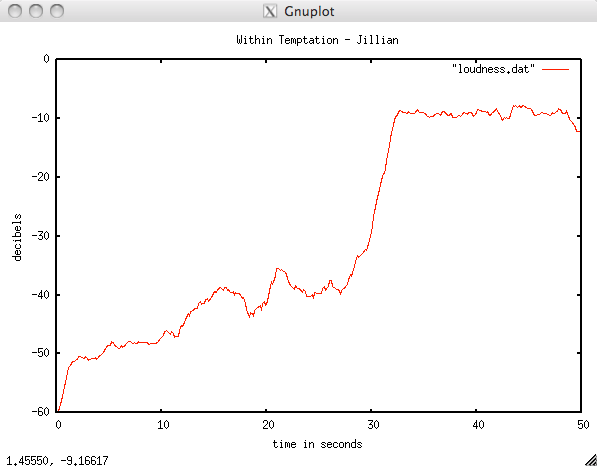

The song Jillian by Within Temptation gets a score of 861 for this dramatic opening:

Now that’s drama! Take a look at the plot:

The slow build – and then the hammer hits. You can almost see the vampires and the werewolves colliding in a frenzy.

During this little project I learned that most of the original band members of Evanescence left and formed another band called We are the Fallen – with a very similar sound (leading me to suspect that there was a whole lot of a very different kind of drama in Evanescence). Here’s their dramatic Tear The World Down (scores a 468):

Finally we have this track Maria Guano Apes – perhaps my favorite of the bunch:

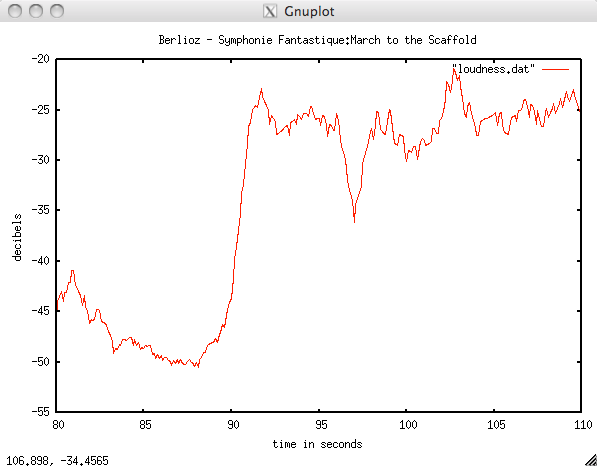

Update: @han wondered how well the dramatic detector faired on Romantic-era music. Here’s a plot for Berlioz’s Symphony Fantastique: March to the Scaffold:

This gets a very dramatic score 361. Note that in the following rendition the dramatic bit that aligns with the previous plot occurs at 1:44:

Well – there you have it , a little bit of code to detect dramatic moments in music. It can’t, of course, tell you whether or not the music is good, but it can help you filter music down to a small set where you can easily preview it all. To build the drama detector, I used a few of The Echo Nest APIs including:

- song/search – to search for songs by name and to get the analysis data (where all the detailed loudness info lives)

- artist/similar – to find all the similar artists to a seed (in this case Evanescence)

The code is written in Python using pyechonest, and the plots were made using gnuplot. If you are interested in finding your own dramatic bits let me know and I’ll post the code somewhere.

Spot the sweatsedo!

Posted by Paul in fun, The Echo Nest, video on February 16, 2011

While all of the hackers were making music hacks at last weekend’s Music Hack Day, the non-technical staff from The Echo Nest were working on their own hack – a video of the event. They’ve posted it on Youtube. It is pretty neat – with a cool remix soundtrack by Ben Lacker.

But wait … they also tweeted this contest:

To win the contest, you had to count the number of Echo Nest tee-shirts and Sweatsedos appear in the video and tweet the results. It turns out it was a really hard contest. My first try I counted 12, but there were many more, some were very very subtle. But we do have a winner! Here’s the answer key:

To win the contest, you had to count the number of Echo Nest tee-shirts and Sweatsedos appear in the video and tweet the results. It turns out it was a really hard contest. My first try I counted 12, but there were many more, some were very very subtle. But we do have a winner! Here’s the answer key:

Last night at 7:30 PM EST one Kevin Dela Rosa posted this tweet:

Congrats to Kevin for his excellent counting ability! Kevin please email your size and shipping info to Paul@echonest.com and we’ll get you into the smooth and velvety blue!

Fans Forever and Ever

One of my very very favorite hacks from this weekend’s Music Hack Day is Greg Sabo’s Fans Forever And Ever. “Fans Forever and Ever” automatically generates a (sometimes rather creepy) fan page for an artist. It works by taking an artist’s cultural data from The Echo Nest API as well as song lyrics from musiXmatch. The fictional fan that creates the page has a randomly created set of personality traits drawn from a pool of crazy. I especially like the Geocities look and the borderline-psychotic poetry:

Give me country music!

Here’s a poem I wrote:

I fill myself with the pop sound

The concert changed my life

I hope I do a good job

die, die

…because death is the only solution

I put it on my iPod

I’ll just put on some female

such music

HEAVEN is the only place for Taylor Swift

My friends don’t understand female artist

There is only one life that I want to take

I live for the music.

what should I wear as I commit murder

“Forever & Always” gets me every time

it’s a shame to make it go quick

I don’t care what they say

I have a collection of saws

Never ever say I’m not a true Taylor Swift fan

‘Fans Forever and Ever’ makes sure you remember to keep the ‘fan’ in ‘fanatic’.

“We will hack!”

Music Hack Day NYC 2011 is done! What a weekend it was! 175 Hackers built 72 kick ass hacks in 24 hours. Much thanks to General Assembly for hosting the weekend in their wonderful ‘urban campus’. The facilities were top notch, wireless and network were flawless and the people from General Assembly were incredibly accommodating.

John Britton from Twilio and NYHacker brought the whole event together lining up the venue, and great set of sponsors. (Oh, and Twilio is really cool – fantastic API for hacking on phones).

The event would not have happened without Elissa and and sweatsedo army that did everything that needed to get done – from setting up 200 folding chairs, registering the hackers, serving beer, to taking out the trash. Thanks to Elissa, Matthew, Meghan, Janelle and DJ Sohn for working around the clock to make this all happen!

There are some great reporting on the Music Hack Day already appearing on line:

- At Evolver.FM Eliot Van Buskirk is writing a detailed blog post about each and every one of the 72 hacks. It should take him about 40 days and 40 nights to get it done.

- Peter Kirn at Create Digital Music has an excellent post focusing on the novel performance control hacks from the weekend: At Music Hack day, Amidst Listening Interfaces, Novel Performance Control a Winner

- Fuse.TV had a whole crew at the event, filming, interviewing and blogging the whole time.

- The New York Observer: 72 Apps are born at NYC Music Hack Day

Be sure to check out Thomas Bonte’s excellent Flickr photostream of the event.

Final thanks to Dave Haynes for kicking off the Music Hack Day and diligently shepherding the movement over the last two years. Music Hack Day is now an unstoppable force!

Eric and the SoundCloud gang put together the Music Hack Day Rap – just listen to the energy in the crowd (and this is after sitting through nearly 3 hours and 72 demos):

I had a great time at the event – can’t wait to do it again next year!

The 3D Music Explorer

Posted by Paul in events, Music, visualization on January 27, 2011

Next month I’m giving a talk at SXSW Interactive on using visualizations for discovering music. In my talk I’ll be giving a number of demos of various types of visualizations used for music exploration and discovery. One of the demos is an interactive 3D visualizer that I built a few years back. The goal of this visualizer is to allow you to use 3D game mechanics to interact with your music collection. Here’s a video

Hope to see you at the talk.

MIDEM Hack Day

I’m just back from a whirlwind trip to Cannes where I took part in the first ever MIDEM Hack Day where 20 hotshot music hackers gathered to build the future of music. The hackers were from music tech companies like Last.fm, SoundCloud, Songkick, The Echo Nest, BMAT, MusixMatch, from universities like Queen Mary and Goldsmiths, one of the four major Labels, and a number of independent developers. We all arrived to the exotic French Riviera, with its casinos, yachts and palm trees. But instead of spending our time laying on the beach we all willingly spent our time in this wonderful room called Auditorium J:

First thing was we did was rearrange the furniture so we could all see each other making interactions easier. It wasn’t long before we had audio hooked up – with hackers taking turns at being the DJ for the room.

Dave and I took a break from the hacking to give a talk on the ‘New Developer Ecosystem’. We talked about how developers were becoming the new gatekeepers in the world of music. The talk was well attended with lots of good questions from the audience. (Yes, I was a bit surprised. I was half expecting that MIDEM would be filled with the old guard – reps from the traditional music industry that would be hostile toward self-proclaimed new gatekeepers. There were indeed folks from the labels there and asking questions, but they seemed very eager to engage with us).

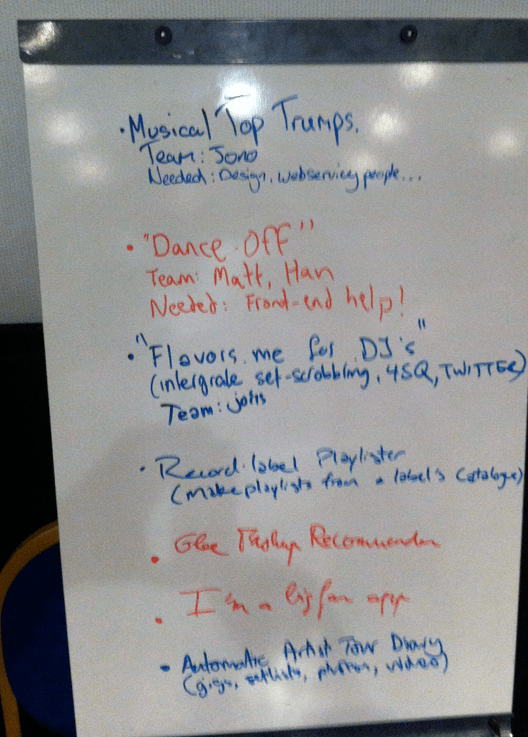

While Dave and I were talking, the rest of the gang had self-organized, giving project pitches, forming teams, making coding assignments and perhaps most importantly figuring out how to make the espresso machine work.

Here are some of the project pitches:

Some teams started with designs with dataflow diagrams, while others dived straight into coding (one team instead, starting composing the music for their app)

Dataflow diagrams, system architecture, and UI minispecs became the artwork for the hacking space.

After the lightening design rounds, people settled into their hacking spots to start hacking:

By mid-afternoon on the first day of hacking, the teams were focused on building their hacks.

There were some interesting contrasts during the day. While we were hacking away in Auditorium J, right next door was a seminar on HADOPI. (the proposed French law where those accused of copyright violations could be banned from the Internet forever).

As we got further in to our hacks, we gave demos for each other

Over the course of the weekend, we had a few ‘walk-ins’ who were interested in understanding what was going on. We did feel a little bit like zoo animals as we coded with an audience.

Taylor Hanson dropped by to see what was going on. He was really interested in the idea of connecting artists with hackers/technologists. After the visit we were MMMboppping the rest of the day.

Towards the end of the first day, the Palais cleared out, so we had the whole conference center to ourselves. We made the beer run, had a couple and then went right back to hacking.

Finally, the demo time had arrived. After more than 24 hours of hacking we were ready (or nearly ready). Demos were created, rehearsed and recorded.

We presented our demos to an enthusiastic audience. We laughed, we cried …

There were some really creative hacks demoed – Evolver.fm has chronicled them all: MIDEM Hack Day Hacks Part 1 and MIDEM Hack Day Hacks Part 2. At the end of the hack day, we were all very tired, but also very excited about what we had accomplished in one weekend.

Thanks much to the MIDEMNet organizers who took care of all of the details for the event – sandwiches, soda, coffee, flawless Internet. They provided everything we needed to make this event possible. Special thanks to Thomas Bonte (unofficial Music Hack Day photographer) for taking so many awesome photos.