Archive for category The Echo Nest

Music Hack Day Hacks – HacKey

Posted by Paul in fun, Music, The Echo Nest on January 31, 2010

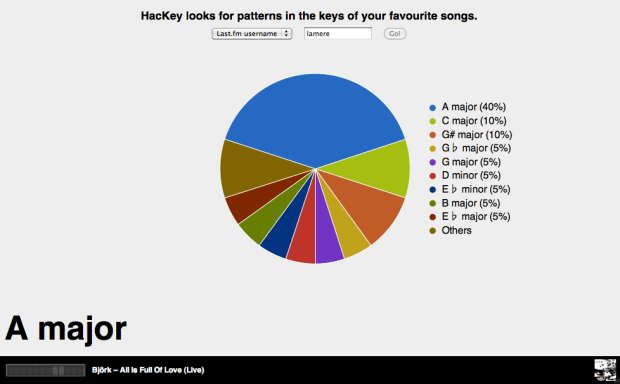

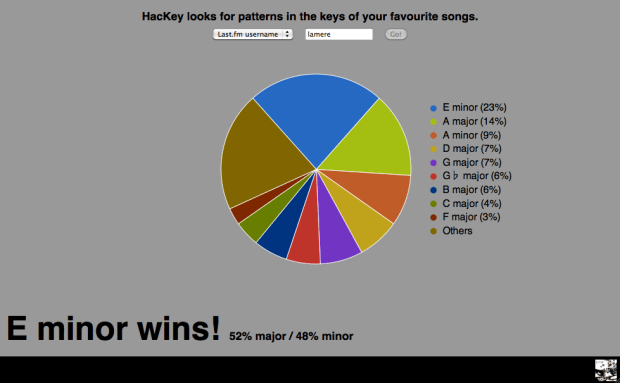

The Stockholm Music Hack Day hacks are starting to roll in. One really neat one is ‘hackKey‘ by Matt Ogle from Last.fm. This hack looks gives you a chart that shows you the keys of your most listened to songs in your last.fm profile:

As you can see my favorite key is E minor. I should put this on a tee-shirt.

The hack uses Brian’s new search_tracks API for the key identification. Cool beans! Will we see flaneur in velour?

I don’t know how well Matt’s hack will scale so I won’t put a link to it here on the blog until after he’s done demoing it at the hack day. Matt’s demo is done so here’s the link: http://users.last.fm/~matt/hackey/

Scar – an automatic remix of ‘Cars’

Posted by Paul in remix, The Echo Nest on January 30, 2010

An automatic cut-up by Adam Lindsay of this video:

Adam says: No human choices were made in the creation of this video. The video and the audio are always cut in sync with reference to the original: what you see and hear at any given moment are what Rob Sheridan captured in real time with his single camera setup.

New Echo Nest APIs demoed at the Stockholm Music Hackday

Posted by Paul in events, Music, The Echo Nest, web services on January 30, 2010

Today at the Stockholm Music Hack Day, Echo Nest co-founder Brian Whitman demoed the alpha version of a new set of Echo Nest APIs . There are 3 new public methods and hints about a fourth API method.

- search_tracks: This is IMHO the most awesomest method in the Echo Nest API. This method lets you search through the millions of tracks that the Echo Nest knows about. You can search for tracks based on artist and track title of course, but you can also search based upon how people describe the artist or track (‘funky jazz’, ‘punk cabaret’, ‘screamo’. You can constrain the return results based upon musical attributes (range of tempo, range of loudness, the key/mode), you can even constrain the results based upon the geo-location of the artist. Finally, you can specify how you want the search results ordered. You can sort the results by tempo, loudness, key, mode, and even lat/long.This new method lets you fashion all sorts of interesting queries like:

- Find the slowest songs by Radiohead

- Find the loudest romantic songs

- Find the northernmost rendition of a reggae track

The index of tracks for this API is already quite large, and will continue to grow as we add more music to the Echo Nest. (but note, that this is an alpha version and thus it is subject to the whims of the alpha-god – even as I write this the index used to serve up these queries is being rebuilt so only a small fraction of our set of tracks are currently visible). And BTW if you are at the Stockholm Music Hack Day, look for Brian and ask him about the secret parameter that will give you some special search_tracks goodness!

One of the things you get back from the search_tracks method is a track ID. You can use this track ID to get the analysis for any track using the new get_analysis method. No longer do you need to upload a track to get the analysis for it. Just search for it and we are likely to have the analysis already. This search_tracks method has been the most frequently requested method by our developers, so I’m excited to see this method be released.

- get_analysis – this method will give you the full track analysis for any track, given its track ID. The method couldn’t be simpler, give it a track ID and you get back a big wad-o-json. All of the track analysis, with one call. (Note that for this alpha release, we have a separate track ID space from the main APIs, so IDs for tracks that you’ve analyzed with the released/supported APIs won’t necessarily be available with this method).

- capsule – this is an API that supports this-is-my-jam functionality. Give the API a URL to an XSPF playlist and you’ll get back some json that points you to both a flashplayer url and an mp3 url to a capsulized version of the playlist. In the capsulized version, the song transitions are aligned and beatmatched like an old style DJ would.

Brian also describes a new identify_track method that returns metadata for a track given the Echo Nest a set of musical fingerprint hashcodes. This is a method that you use in conjunction with the new Echo Nest audio fingerprinter (woah!). If you are at the Stockholm music hackday and you are interested in solving the track resolution problem talk to Brian about getting access to the new and nifty audio fingerprinter.

These new APIs are still in alpha – so lots of caveats surround them. To quote Brian: we may pull or throttle access to alpha APIs at a different rate from the supported ones. Please be warned that these are not production ready, we will be making enhancements and restarting servers, there will be guaranteed downtime.

The new APIs hint at the direction we are going here at the Echo Nest. We want to continue to open up our huge quantities of data for developers, making as much of it available as we can to anyone who wants to build music apps. These new APIs return JSON – XML is so old fashioned. All the cool developers are using JSON as the data transport mechanism nowadays: its easy to generate, easy to parse and makes for a very nimble way to work with web-services. We’ll be adding JSON support to all of our released APIs soon.

I’m also really excited about the new fingerprinting technology. Here at the Echo Nest we know how hard it is to deal with artist and track resolution – and we want to solve this problem once and for all, for everybody – so we will soon be releasing an audio fingerprinting system. We want to make this system as open as we can, so we’ll make all the FP data available to anyone. No secret hash-to-ID algorithms, and no private datasets. The Fingerprinter is fast, uses state-of-the-art audio analysis and will be backed by a dataset of fingerprint hashcodes for millions of tracks. I’ll be writing more about the new fingerprinter soon.

These new APIs should give those lucky enough to be in Stockholm this weekend something fun to play with. If you are at the Stockholm Hack Day and you build something cool with these new APIs you may find yourself going home with the much coveted Echo Nest sweatsedo:

Best ever Echo Nest prize at the Stockholm Music Hackday

Posted by Paul in events, fun, The Echo Nest on January 26, 2010

Yep, the Sweatsedos have arrived in Stockholm. 4 lucky users of the Echo Nest API will get to wear the official Echo Nest uniform home as a prize for their efforts.

Two new sweatsedos in the office

Posted by Paul in fun, The Echo Nest on January 25, 2010

What I see everyday at The Echo Nest

Posted by Paul in fun, The Echo Nest on January 22, 2010

Rearranging the Machine

Posted by Paul in Music, remix, The Echo Nest on January 5, 2010

Last month I used Echo Nest remix to rearrange a Nickelback song (See From Nickelback to Bickelnack) by replacing each sound segment with another similar sounding segment. Since Nickelback is notorious for their self-similarity, a few commenters suggested that I try the remix with a different artist to see if the musicality stems from the remix algorithm or from Nickelback’s self-similarity. I also had a few tweaks to the algorithm that I wanted to try out, so I gave it go. Instead of remixing Nickelback I remixed the best selling Christmas song of 2009 Rage Against The Machine’s ‘Killing in the Name’.

Here’s the remix using the exact same algorithm that was used to make the Bickelnack remix:

Like the Bickelnack remix – this remix is still rather musical. (Source for this remix is here: vafroma.py)

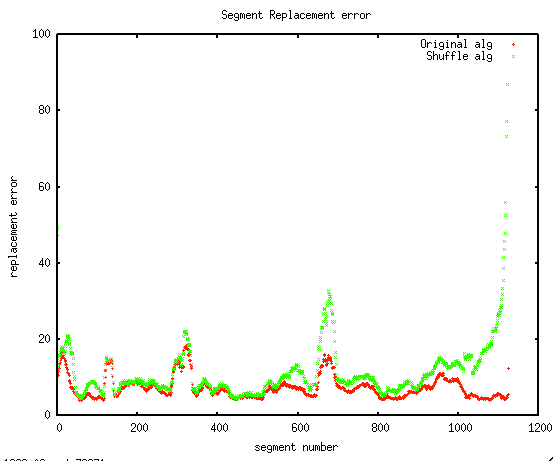

A true shuffle: One thing that is a bit unsatisfying about this algorithm is that it is not a true reshuffling of the input. Since the remix algorithm is looking for the nearest match, it is possible for single segment to appear many times in the output while some segments may not appear at all. For instance, of the 1140 segments that make up the original RATM Killing in the Name, only 706 are used to create the final output (some segments are used as many as 9 times in the output). I wanted to make a version that was a true reshuffling, one that used every input segment exactly once in the output, so I changed the inner remix loop to only consider unused segments for insertion. The algorithm is a greedy one, so segments that occur early in the song have a bigger pool of replacement segments to draw on. The result is that as the song progresses, the similarity of replacement segments tends to drop off.

I was curious to see how much extra error there was in the later segments, so I plotted the segment fitting error. In this plot, the red line is the fitting error for the original algorithm and the green line is the fitting error for shuffling algorithm. I was happy to see that for most of the song, there is very little extra error in the shuffling algorithm, things only get bad in the last 10% of the song.

You can hear see and hear the song decay as the pool of replacement segments diminish. The last 30 seconds are quite chaotic. (Remix source for this version is here: vafroma2.py)

More coherence: Pulling song segments from any part of a song to build a new version yields fairly coherent audio, however, the remixed video can be rather chaotic as it seems to switch cameras every second or so. I wanted to make a version of the remix that would reduce the shifting of the camera. To do this, I gave slight preference to consecutive segments when picking the next segment. For example, if I’ve replaced segment 5 with segment 50, when it is time to replace segment 6, I’ll give segment 51 a little extra chance. The result is that the output contains longer sequences of contiguous segments. – nevertheless no segment is ever in its original spot in the song. Here’s the resulting version:

I find this version to be easier to watch. (Source is here: vafroma3.py).

Next experiments along these lines will be to draw segments from a large number of different songs by the same artist, to see if we can rebuild a song without using any audio from the source song. I suspect that Nickelback will again prove themselves to be the masters of self-simlarity:

Here’s the original, un-remixed version of RATM- Killing in the name:

Watch out world, the Echo Nest is now unstoppable

Posted by Paul in fun, Music, The Echo Nest on December 22, 2009

Today, we are unveiling the new Echo Nest secret weapon that will guarantee World Domination: our new Echo Nest suit. These suits are in stunning robin’s egg blue velour and are made of a special textile that is guaranteed to absorb all forms of moisture, keeping us fresh and dry at all times. Here I am modeling the new suit at the Echo Nest Holiday party:

Here are the Echo Nest big wigs trying out the suit: Brian, on the left, doesn’t need a special suit — he already has a superpower (doughnut scrying).

Here’s Team Blue heading out at lunch to grab some burgers. They turned a few heads in Davis Square.

We have the suits in the office, and at any moment we are prepared to ‘suit up’ to meet any music data emergencies that may arise anywhere in the world. This suit is why the Echo Nest is so awesome.

The other obsession at the Echo Nest

Posted by Paul in fun, The Echo Nest on December 21, 2009

At the Echo Nest, everyone is obsessed with music. But there’s also another obsession as highlighted in this new photo blog: lookatthisfuckingcrema.com:

Echo Nest analysis and visualization for Dopplereffekt – Scientist

Posted by Paul in fun, remix, The Echo Nest, visualization on December 20, 2009