Archive for category research

ISMIR Oral Session 3 – Musical Instrument Recognition and Multipitch Detection

Session Chair: Juan Pablo Bello

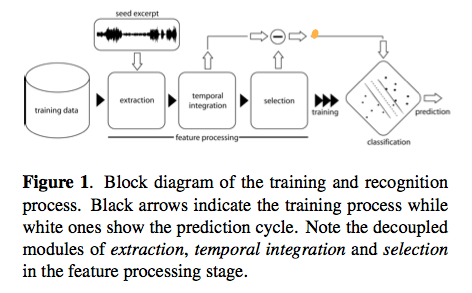

SCALABILITY, GENERALITY AND TEMPORAL ASPECTS IN AUTOMATIC RECOGNITION OF PREDOMINANT MUSICAL INSTRUMENTS IN POLYPHONIC MUSIC

By Ferdinand Fuhrmann, Martín Haro, Perfecto Herrera

- Automatic recognition of music instruments

- Polyphonic music

- Predominate

Research Questions

- scale existing methods to higlh ployphonci muci

- generalize in respect to used intstruments

- model temporal information for recognition

Goals:

- Unifed framework

- Pitched and unpitched …

- (more goals but I couldn’t keep up_

Neat presentation of survey of related work, plotting on simple vs. complex

Ferdinand was going too fast for me (or perhaps jetlag was kicking in), so I include the conclusion from his paper here to summarize the work:

Conclusions: In this paper we addressed three open gaps in automatic recognition of instruments from polyphonic audio. First we showed that by providing extensive, well designed data- sets, statistical models are scalable to commercially avail- able polyphonic music. Second, to account for instrument generality, we presented a consistent methodology for the recognition of 11 pitched and 3 percussive instruments in the main western genres classical, jazz and pop/rock. Fi- nally, we examined the importance and modeling accuracy of temporal characteristics in combination with statistical models. Thereby we showed that modelling the temporal behaviour of raw audio features improves recognition per- formance, even though a detailed modelling is not possible. Results showed an average classification accuracy of 63% and 78% for the pitched and percussive recognition task, respectively. Although no complete system was presented, the developed algorithms could be easily incorporated into a robust recognition tool, able to index unseen data or label query songs according to the instrumentation.

MUSICAL INSTRUMENT RECOGNITION IN POLYPHONIC AUDIO USING SOURCE-FILTER MODEL FOR SOUND SEPARATION

by Toni Heittola, Anssi Klapuri and Tuomas Virtanen

Quick summary: A novel approach to musical instrument recognition in polyphonic audio signals by using a source-filter model and an augmented non-negative matrix factorization algorithm for sound separation. The mixture signal is decomposed into a sum of spectral bases modeled as a product of excitations and filters. The excitations are restricted to harmonic spectra and their fundamental frequencies are estimated in advance using a multipitch estimator, whereas the filters are restricted to have smooth frequency responses by modeling them as a sum of elementary functions on the Mel-frequency scale. The pitch and timbre information are used in organizing individual notes into sound sources. The method is evaluated with polyphonic signals, randomly generated from 19 instrument classes.

Source separation into various sources. Typically uses non-negative matrix factorization. Problem: Each pitch needs its own function leading to many functions. The system overview:

The Examples are very interesting: www.cs.tut.fi/~heittolt/ismir09

HARMONICALLY INFORMED MULTI-PITCH TRACKING

by Zhiyao Duan, Jinyu Han and Bryan Pardo

A novel system for multipitch tracking, i.e. estimate the pitch trajectory of each monophonic source in a mixture of harmonic sounds. Current systems are not robust, since they use local time-frequencies, they tend to generate only short pitch trajectories. This system has two stages: multi-pitch estimation and pitch trajectory formation. In the first stage, they model spectral peaks and non-peak regions to estimate pitches and polyphony in each single frame. In the second stage, pitch trajectories are clustered following some constraints: global timbre consistency, local time-frequency locality.

Here’s the system overview:

ISMIR Oral Session 2 – Tempo and Rhythm

Posted by Paul in ismir, Music, music information retrieval, research on October 27, 2009

Session chair: Anssi Klapuri

IMPROVING RHYTHMIC SIMILARITY COMPUTATION BY BEAT HISTOGRAM TRANSFORMATIONS

By Marthias Gruhne, Christian Dittmar, and Daniel Gaertner

Marthias described their approach to generating beat histogram techniques, similar to those used by Burred, Gouyun, Foote and Tzanetakis. Problem: beat histogram can not be directly used as feature because of tempo dependency. Similar rhythms appear far apart in a Euclidean space because of this dependency. Challenge: reduce tempo dependence.

Solution: logarithmic Transformation. See the figure:

This leads to a histogram with a tempo independent part which can be separated from the tempo dependent part. This tempo independent part can then be used in a Euclidean space to find similar rhythms.

Evaluation: results 20% to 70%, and from 66% to 69% (Needs a significance test here I think)

USING SOURCE SEPARATION TO IMPROVE TEMPO DETECTION

By Parag Chordia and Alex Rae – presented by George Tzanetakis

Well, this is unusual that George will be presenting Para and Alex’s work. Anssi suggests that we can use the wisdom of the crowds to anser the questions.

Motivation: Tempo detection is often unreliable for complex music.

Humans often resolve rhythms by entraining to a rhythmical regular part.

Idea: Separate music into components, some components may be more reliable.

Method:

- Source separation

- track tempo for each source

- decide global tempo by either:

- Pick one with most regular structure

- Look for common tempo across all sources/layers

Here’s the system:

PLCA is a source separation method (Probablistic Latent Component Analysis). Issues: Number of components need to be specified in advance. Could merge sources or one source could be split into multiple layers.

Autocorrelation is used for tempo detection. Regular sources will have higher peaks.

Other approach – a machine learning approach – a supervised learning problem

Global Tempo using Clustering – merge all tempo candidates into single vector (and others within a 5% tolerance (and .5x and 2x), to give a peak histogram showing confidence for each tempo.

Evaluation

- IDM09 – http://paragchordia.com/data.html

- mirex06 (20 mixed genre exceprts)

Accuracy: MIREX06: 0.50 THIS : 0.60

Question: How many sources were specified to PLCA, Answer: 8. George thinks it doesn’t matter too much.

Question: Other papers show that similar techniques do not show improvement for larger datasets

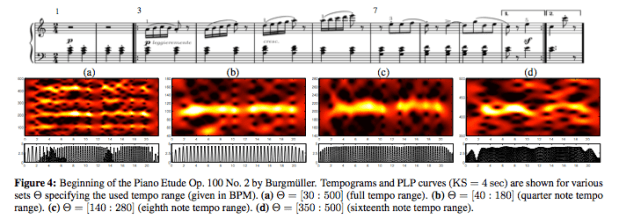

A MID-LEVEL REPRESENTATION FOR CAPTURING DOMINANT TEMPO AND PULSE INFORMATION IN MUSIC RECORDINGS

By Peter Grosche and Meinard Müller

Example – a waltz – where the downbeat is not too strong compared to beats 2 & 3. It is hard to find onsets in the energy curves. Instead, use:

- Create a spectogram

- Log compression of the spectrogram

- Derivative

- Accumulation

This yields a novelty curve, which can be used for onset detection. Downbeats are missing. How to beat track this? compute tempogram – a spectrogram of the novelty curve. This yields a periodicity kernel. All kernels are combined to obtain a single kernel – rectified – this gives a predominate local pulse curve. The PLP curve is dynamic but can be constrained to track at the bar, beat or tatum level.

Issues: PLP likes to fill in the gaps – which is not always appropriate. Trouble with the Borodin String Quartet No. 2. But when tempo is tightly constrained, it works much better.

This was a very good talk. Meinard presented lots of examples including examples where the system did not work well.

Question: Realtime? Currently kernels are 4 to 6 seconds. With a latency of 4 to 6 seconds it should work in an online scenario.

Question: How different from DTW on the tempogram? Not connected to DTW in anyway.

Question: How important is the hopsize? Not that important since a sliding window is used.

ISMIR Oral Session 1 – Knowledge on the Web

Oral Session 1A – Knowledge on the Web

Oral Session 1A – The first Oral Session of ISMIR 2009, chaired by Malcolm Slaney of Yahoo! Research

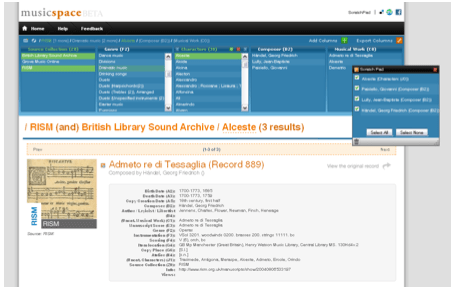

Integrating musicology’s heterogeneous data sources for better exploration

by David Bretherton, Daniel Alexander Smith, mc schraefel,

Richard Polfreman, Mark Everist, Jeanice Brooks, and Joe Lambert

Project Link: http://www.mspace.fm/projects/musicspace

Musicologists consult many data sources, musicspace tries to integrate these resources. Here’s a screenshot of what they are trying to build.

Wirking with many public and private organizations (From British Libary to Naxos).

Motivation: Many musicologist queries are just intractible because: Need to consult several resources, they are multipart queries (require *pen and paper*), insufficient granularity of metadata or serch options. Solution: Integrate sources, optimally interactive UI, increase granularity.

Difficulties: Many formats for data sources,

Strategies: Increase granularity of data by making annotations explicit. Generate metadata – fallback on human intelligence, inspired by Amazon turk – to clean and extract data.

User Interface – David demonstrated the faceted browser to satisfy a complex query (find all the composers that montiverdi’s scribe was also the scribe for). I liked the dragging columns.

There are issues with linked databases from multiple sources – when one source goes away (for instance, for licensing reason), the links break.

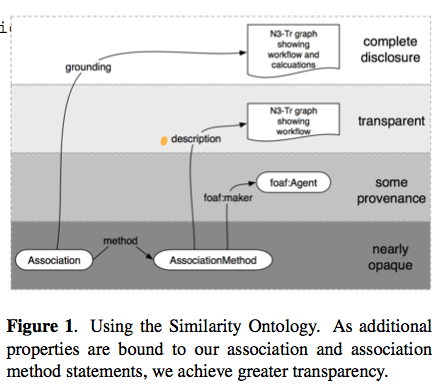

An Ecosystem for transparent music similarity in an open world

By Kurt Jacobson, Yves Raimond, Mark Sandler

Link http://classical.catfishsmooth.net/slides/

Kurt was really fast, was hard to take coherent notes, so my fragmented notes are here.

Assumption: you can use music similarity for recommendation. Music similarity:

Tversky’s suggested that you can’t really put similarity into a euclidean space. It is not symmetric. He suggests a contrast model basd on comparing features – analagous to ‘bag of features’.

What does music similarity realy mean? We can’t say! Means different things in different contexts. Context is important. Make similarity be a RDF concept. A hierarchy of similarity was too limiting. Similarity now has properties. We reify the simialriy, how, how much etc.

Association method with levels of transparency as follows:

Example implementation: http://classical.catfishsmooth.net/about/

Kurt demoed the system showing how he can create a hybrid query for timbral, key and composer influence similarity. It was a nice demo.

Future work: digitally signed similarity statements – neat idea.

Kurt challenges the Last.fm, BMATs and the Echo Nests and anyone who provides similarity information: Why not publish MuSim?

Interfaces for document representation in digital music libraries

By Andrew Hankinson Laurent Pugin Ichiro Fujinaga

Goal: Designing user interfaces for displaying music scores

Part of the RISM project – music digitization and metadata

Bring together information for many resources.

Five Considerations

- Preservation of Document integrity – image gallery approach doesn’t give you a sense of the complete document

- Simultaneous viewing of parts – for example, the tenor and bass may be separated in the work without window juggling.

- Provide multiple page resolutions – zooming is important

- Optimized page loading

- Image + Metadata should be presented simultaneously

Current Work

Implemented a prototype viewer that takes the 5 considerations into account. Andy gave a demo of the prototype – seems to be quite an effective tool for displaying and browsing music scores:

A good talk,well organized and presented – nice demo.

Oral Session 1B – Performance Recognition

Oral Session 1B, chaired by Simon Dixon (Queen Mary)

Body movement in music information retrieval

by Rolf Inge Godøy and Alexander Refsum Jensenius

Abstract: We can see many and strong links between music and hu- man body movement in musical performance, in dance, and in the variety of movements that people make in lis- tening situations. There is evidence that sensations of hu- man body movement are integral to music as such, and that sensations of movement are efficient carriers of infor- mation about style, genre, expression, and emotions. The challenge now in MIR is to develop means for the extrac- tion and representation of movement-inducing cues from musical sound, as well as to develop possibilities for using body movement as input to search and navigation inter- faces in MIR.

Links between body movement and music everywhere. Performers, listeners, etc. Movement is integral to music experience. Suggest that studying music-related body movement can help our understanding of music.

One example: http://www.youtube.com/watch?v=MxCuXGCR8TE

Relate music sound to subject images.

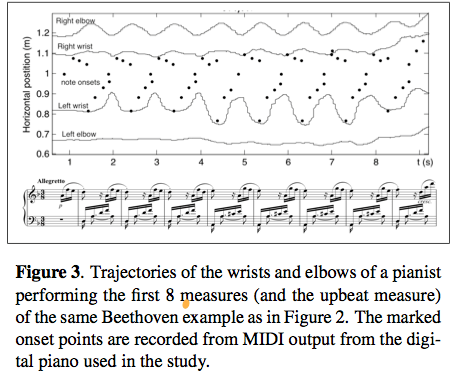

Looking at performance of pianists – creates a motiongram – and a motion capture.

Listeners have lots of knowledge about sound producing motions/actions. These motions are integral to music perception. There’s a constant mental model of the sound/action. Example: Air guitar vs. Real Guitar

This was a thought provoking talk. wonder how the music-action model works when the music controller is no longer acoustic – do we model music motions when using a laptop as our instrument?

Who is who in the end? Recognizing pianists by their final ritardandi

by Raarten Grachten and Gerhrad Widmer

Some examples Harasiewicz, vs Ashkenazy – similar to how different people walk down the stairs.

Why? Structure asks for it or … they just feel like it. How much of this is specific to the performer? Fixed effects: transmitting moods, clarifying musical structure. Transient effects: Spontaneous deicsions, motor noise, performance errors.

Study – looking at piece specific vs. performance specific tempo variation. Results: Global tempo from the piece, local variation is performance specific.

Method:

- Define a performance norm

- Determine where performers significantly deviate

- Model the deviations

- Classify performances based on the model

In actuality, use the average performance as a norm. Note also there may be errors in annotations that have to be accounted for.

Here are the deviations from the performance norm for various performers.

Accuracy ranges from 65.31 to 43.53 – (50% is random baseline). Results are not overwhelming, but still interesting considering the simplistic model.

Interesting questions about students and schools and styles that may influence results.

All in all, an interesting talk and very clearly presented.

Using Visualizations for Music Discovery

Posted by Paul in code, data, events, fun, Music, music information retrieval, research, The Echo Nest, visualization on October 22, 2009

On Monday, Justin and I will present our magnum opus – a three-hour long tutorial entitled: Using Visualizations for Music Discovery. In this talk we look the various techniques that can be used for visualization of music. We include a survey of the many existing visualizations of music, as well as talk about techniques and algorithms for creating visualizations. My hope is that this talk will be inspirational as well as educational spawning new music discovery visualizations. I’ve uploaded a PDF of our slide deck to slideshare. It’s a big deck, filled with examples, but note that large as it is, the PDF isn’t the whole talk. The tutorial will include many demonstrations and videos of visualizations that just are not practical to include in a PDF. If you have the chance, be sure to check out the tutorial at ISMIR in Kobe on the 26th.

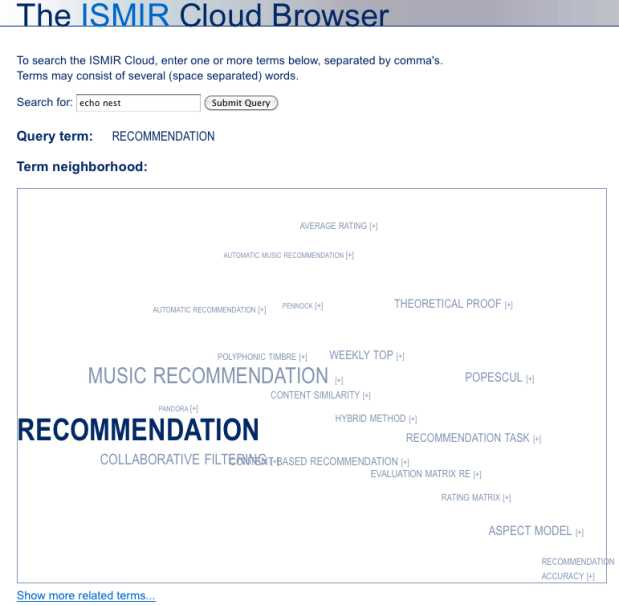

ISMIR Cloud browser

Posted by Paul in Music, music information retrieval, research on October 19, 2009

Maarten Grachten, Markus Schedl, Tim Pohle and Gerhard Widmer have created an ISMIR Cloud browser that lets you browse the 10 years of music information research via a text cloud. Check it out: The ISMIR Cloud Browser

There must be 85 ways to visualize your music

Posted by Paul in Music, research, visualization on October 2, 2009

Justin and I have been working hard, preparing our tutorial: Using Visualizations for Music Discovery being presented at ISMIR 2009 in Kobe Japan. Here’s a teaser image showing 85 of the visualizations that we’ll be talking about during the tutorial. If you’ve created a music visualization that is useful for music exploration and discovery, and you don’t see a thumbnail of it here, let me know in the next couple of days.

Draw a picture of your musical taste

Tristan F from the BBC posted this hand drawing of his musical taste to Flickr. As he says in the photo comments: I had to stop at some point so it’s not comprehensive. But it’s all about connections.

I find these types of drawings to yield really interesting insights into the listener and to music in general. For instance Tristan has a line connecting Sufjan Stevens to Bill Frisell. I’m still pondering that connection. As I prepare for my upcoming ISMIR tutorial on Using Visualizations for Discovering Music, I’d like to collect a few more personal visualizations of music taste. If you feel so inclined , draw a picture that represents your music taste, post it to Flickr and tag it with ‘MyMusicTaste’. I’ll post a follow up … and particularly interesting ones will appear in the tutorial.

Herd it on Facebook

Posted by Paul in data, Music, music information retrieval, research on September 25, 2009

UCSD Researcher Gert Lanckriet announced today that “Herd It” – the game-with-a-purpose for collecting audio annotations has been officially launched on Facebook. Following in the footsteps of other gwaps such as Major Miner, Tag-a-tune and the Listen Game.

UCSD Researcher Gert Lanckriet announced today that “Herd It” – the game-with-a-purpose for collecting audio annotations has been officially launched on Facebook. Following in the footsteps of other gwaps such as Major Miner, Tag-a-tune and the Listen Game.

On the music-ir mailing list Gert explains ‘Herd it’: “The scientific goal of this experiment is to investigate whether a human computation game integrated with the Facebook social network will allow the collection of high quality tags for audio clips. The quality of the tags will be tested by using them to train an automatic music tagging system (based on statistical models). Its predictive accuracy will be compared to a system trained on high quality tags collected through controlled human surveys (such as, e.g., the CAL500 data set). The central question we want to answer is whether the “game tags” can train an auto-tagging system as (or more) accurately than “survey tags” and, if yes, under what conditions (amount of tags needed, etc.). The results will be reported once enough data has been collected.”

I’ve played a few rounds of the game and enjoyed myself. I recognized all of the music that they played (it seemed to be drawn from top 100 artists like Nirvana, Led Zeppelin, Maria Carey and John Lennon). The timed rounds made the game move quickly. Overall, the game was fun. But I did miss the feeling of close collaboration that I would get from some other Gwaps where I would have to try to guess how my partner would try to describe a song. Despite this, I found the games to be fun and I could easily see spending a few hours trying to get a top score. The team at UCSD clearly has put lots of time into making the games highly interactive and fun. Animations, sound and transparent game play all add to the gaming experience. Once glitch, even though I was logged into Facebook, the Herd It game didn’t seem to know who I was, it just called me ‘Herd It’. So my awesome highscore is anonymous.

Here are some screen shots from the game. For this round, I had to chose the most prominent sound (this was for the song ‘Heart of Gold’), I chose slide guitar, but most people chose acoustic guitar (what do they know!).

For this round, I had to chose the genre for a song. easy enough.

For this round I had to position a song on a Thayer mood model scale.

Here’s the game kick off screen … as you can see, I’m “Herd it” and not Paul

I hope the Herd It game attracts lots of attention. It could be a great source of music metadata.

Divisible by Zero

Posted by Paul in Music, music information retrieval, research on September 9, 2009

Be sure to check out the new MIR blog Divisible by Zero by Queen Mary PhD Student Rebecca Stewart. Becky is particularly interested in using spatial audio techniques to enhance music discovery. I find her first post You want the third song on the left to be quite interesting. She’s using a spatially-enabled database to manage fast lookups of similar tracks that have been positioned in a 2D space using LDMS. This is a really neat idea. It turns a problem that can be particularly vexing into a simple SQL query.

I hope Becky will continue to write about this project in her blog. I’m particularly interested in learning how well the spatial database scales to industrial-sized music collections, what her query times are and how the LDMS/GIS similarity compare to results using a brute force nearest neighbor distance calculation on the feature vectors. – (via Ben Fields)

Finding music with pictures

Posted by Paul in Music, music information retrieval, research, visualization on September 7, 2009

As part of the collateral information for our upcoming ISMIR tutorial (Using Visualizations for Music Discovery), Justin and I have created a new blog: Visualizing Music. This blog, inspired by our favorite InfoVis blogs like Information Aesthetics and Visual Complexity, will be be a place where we catalog and critique visualizations that help people explore and understand music.

There are hundreds of music visualizations out there – so it may take us a little while to get them all cataloged, but we’ve already added some of our favorites. Help us fill out the whole catalog by sending us links to interesting music visualizations.

Check out the new blog: Visualizing Music