Archive for category research

A Cartesian Ensemble of Feature Subspace Classifiers for Music Categorization

Posted by Paul in ismir, Music, music information retrieval, research on August 11, 2010

A Cartesian Ensemble of Feature Subspace Classifiers for Music Categorization (pdf)

Thomas Lidy, Rudolf Mayer, Andreas Rauber, Pedro J. Ponce de León, Antonio Pertusa, and Jose Manuel Iñesta

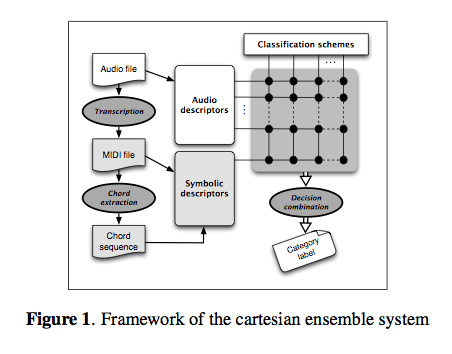

Abstract: We present a cartesian ensemble classification system that is based on the principle of late fusion and feature sub- spaces. These feature subspaces describe different aspects of the same data set. The framework is built on the Weka machine learning toolkit and able to combine arbitrary fea- ture sets and learning schemes. In our scenario, we use it for the ensemble classification of multiple feature sets from the audio and symbolic domains. We present an extensive set of experiments in the context of music genre classifi- cation, based on numerous Music IR benchmark datasets, and evaluate a set of combination/voting rules. The results show that the approach is superior to the best choice of a single algorithm on a single feature set. Moreover, it also releases the user from making this choice explicitly.

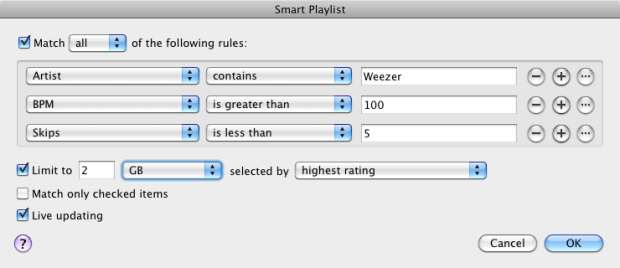

An ensemble classification system built on top of Weka:

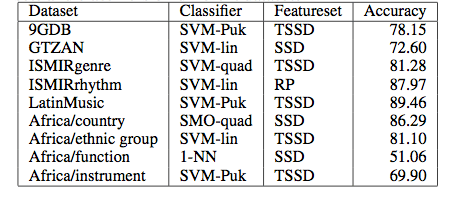

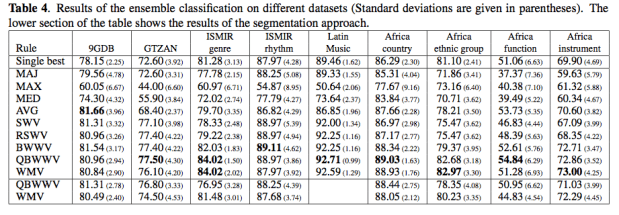

Results, using different datasets, classifiers and feature sets:

Results, using different datasets, classifiers and feature sets:

Execution times were about 10 seconds per song, so rather slow for large collections.

The ensemble approach delivered superior results through adding a reasonable amount of feature sets and classifiers. However, they did not discover a combination rule that always outperforms all the others.

ON THE APPLICABILITY OF PEER-TO-PEER DATA IN MUSIC INFORMATION RETRIEVAL RESEARCH

Posted by Paul in ismir, Music, music information retrieval, research on August 11, 2010

ON THE APPLICABILITY OF PEER-TO-PEER DATA IN MUSIC INFORMATION RETRIEVAL RESEARCH (pdf)

Noam Koenigstein, Yuval Shavitt, Ela Weinsberg, and Udi Weinsberg

abstract:Peer-to-Peer (p2p) networks are being increasingly adopted as an invaluable resource for various music information re- trieval (MIR) tasks, including music similarity, recommen- dation and trend prediction. However, these networks are usually extremely large and noisy, which raises doubts re- garding the ability to actually extract sufficiently accurate information.

This paper evaluates the applicability of using data orig- inating from p2p networks for MIR research, focusing on partial crawling, inherent noise and localization of songs and search queries. These aspects are quantified using songs collected from the Gnutella p2p network. We show that the power-law nature of the network makes it relatively easy to capture an accurate view of the main-streams using relatively little effort. However, some applications, like trend prediction, mandate collection of the data from the “long tail”, hence a much more exhaustive crawl is needed. Furthermore, we present techniques for overcoming noise originating from user generated content and for filtering non informative data, while minimizing information loss

Observation – CF systems tend to outperform content-based systems until you get in the long tail – so to improved CF systems, you need more long tail data. This work explores how to get more long tail data by mining p2p networks.

Observation – CF systems tend to outperform content-based systems until you get in the long tail – so to improved CF systems, you need more long tail data. This work explores how to get more long tail data by mining p2p networks.

P2P systems have some problems – privacy concerns, data collection is hard. High user churn, very noisy data, some users delete content from shared folders right away, sparsity

P2P mining Shared folders are useful for similarity, search queries are useful for trends.

Lots of p2p challenges and steps – getting IP addresses for p2p nodes, filtering out non-musical content, geo-identification, anonymization.

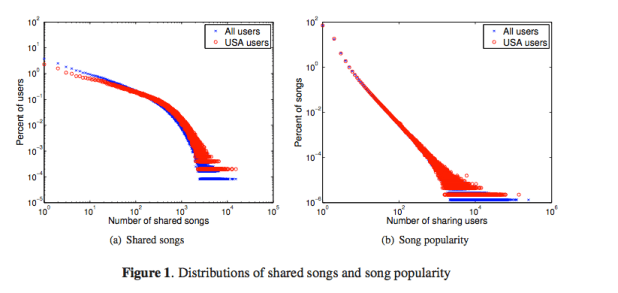

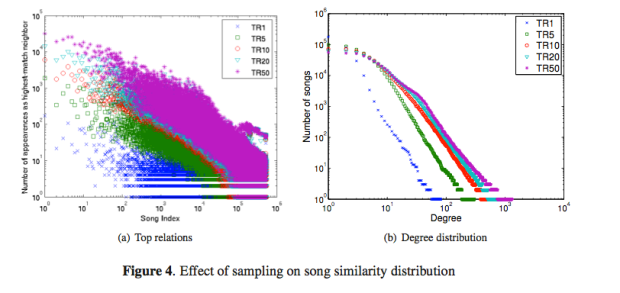

Dealing with sparsity: 1.2 million users, but average of 1 artist/song data point for each artist/song relation. These graphs show song popularity in shared folders. They use this data to help filter out non-typical users.

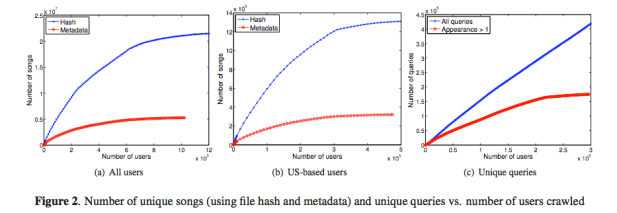

Identifying songs: Use the hash file – but of course many songs have many different digital copies – so they also look at the (noisy) metadata.

Songs Discovery Rate

Once you reach about 1/3 of the network you’ve found most of the tracks if you use metadata for resolving. If you use the hashes, you need to crawl 70% of the network.

Using shared folders for similarity

There’s a preferential attachment model for popular songs

Conclusion: P2P data is good source of long tail data, but dealing with the noisy data is hard. The p2p data is especially good for building similarity models localized to countries. A good talk with from someone with lots of experience with p2p stuff.

MUSIC EMOTION RECOGNITION: A STATE OF THE ART REVIEW

Posted by Paul in ismir, music information retrieval, research on August 11, 2010

MUSIC EMOTION RECOGNITION: A STATE OF THE ART REVIEWYoungmoo E. Kim, Erik M. Schmidt, Raymond Migneco, Brandon G. Morton Patrick Richardson, Jeffrey Scott, Jacquelin A. Speck, and Douglas Turnbull (pdf)

From the paper: Recognizing musical mood remains a challenging problem primarily due to the inherent ambiguities of human emotions. Though research on this topic is not as mature as some other Music-IR tasks, it is clear that rapid progress is being made. In the past 5 years, the performance of automated systems for music emotion recognition using a wide range of annotated and content-based features (and multi-modal feature combinations) have advanced significantly. As with many Music-IR tasks open problems remain at all levels, from emotional representations and annotation methods to feature selection and machine learning.

While significant advances have been made, the most accurate systems thus far achieve predictions through large-scale machine learning algorithms operating on vast feature sets, sometimes spanning multiple domains, applied to relatively short musical selections. Oftentimes, this approach reveals little in terms of the underlying forces driving the perception of musical emotion (e.g., varying contributions of features) and, in particular, how emotions in music change over time. In the future, we anticipate further collaborations between Music-IR researchers, psychologists, and neuroscientists, which may lead to a greater understanding of not only mood within music, but human emotions in general. Furthermore, it is clear that individu- als perceive emotions within music differently. Given the multiple existing approaches for modeling the ambiguities of musical mood, a truly personalized system would likely need to incorporate some level of individual profiling to adjust its predictions.

This paper has provided a broad survey of the state of the art, highlighting many promising directions for further research. As attention to this problem increases, it is our hope that the progress of this research will continue to accelerate in the near future.

My notes:

Mirex performance on mood classification has held steady for the last few years. Most mood classification systems in Mirex are just adapted genre classifiers.

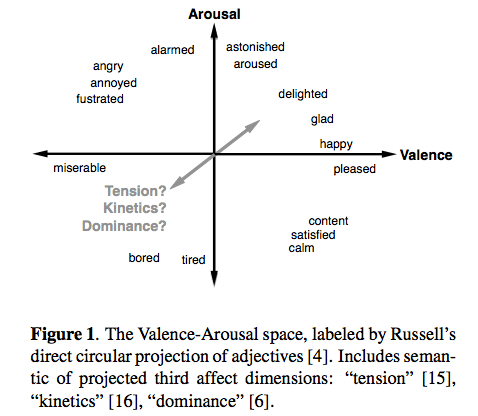

Categorical vs. dimensional

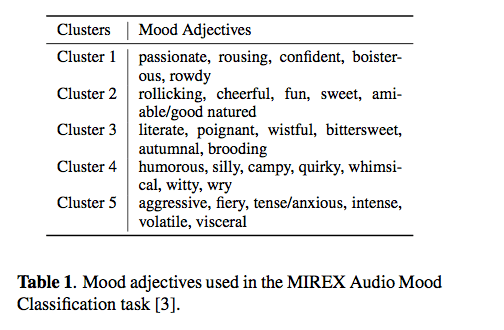

Categorical: Mirex classifies mood into 5 clusters:

Dimensional: – the ever popular Valence-Arousal space – sometimes called the Thayer Mood model:

Dimensional: – the ever popular Valence-Arousal space – sometimes called the Thayer Mood model:

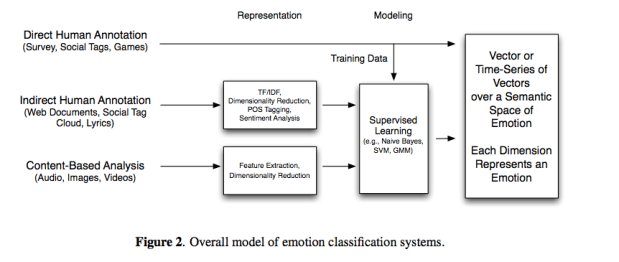

Typical emotion classification system

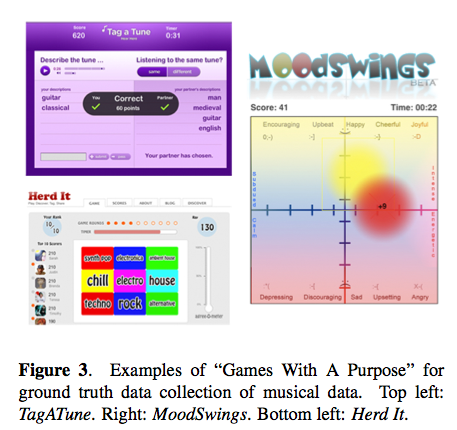

Ground Truth

A big challenge is to come up with groundtruth for training a recognition system. Last.fm tags, GWAP, AMG labels, web documents are common sources.

Lyrics – using lyrics alone has not been too successful for mood classification.

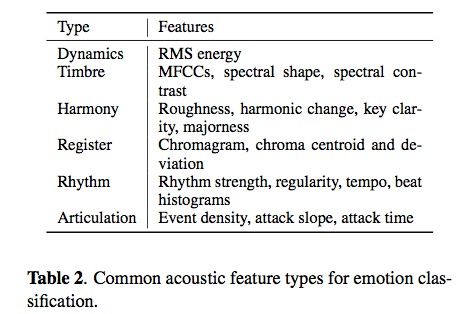

Content-based methods – typical features for mood:

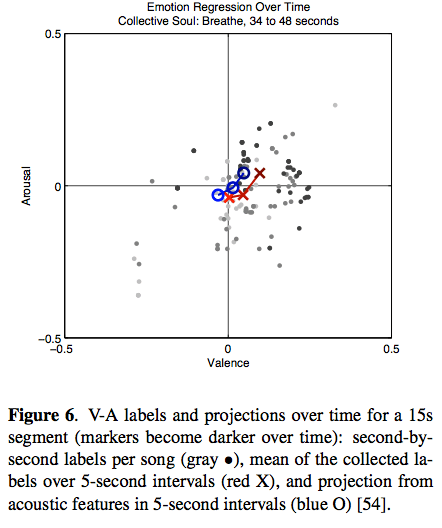

Youngmoo’s latest work (with Eric Schmidt) is showing the distribution and change of emotion over time.

Hybrid systems

- Audio + Lyrics – some to high improvement

- Audio + Tags = good improvement

- Audio + Images = using album art to derive associations to mood

Conclusions – Mood recognition hasn’t improved much in recent years – probably because most systems are not really designed specifically for mood.

This was a great overview of the state-of-the-art. I’d be interested in hearing a much longer version of this talk. The paper and the references will be a great resource for anyone who’s interested in pursuing mood classification.

Solving Misheard Lyric Search Queries ….

Posted by Paul in ismir, music information retrieval, research on August 10, 2010

Solving Misheard Lyric Search Queries using a Probabilistic Model of Speech Sounds

Hussein Hirjee and Daniel G. Brown

People often use lyrics to find songs – and they get them wrong. Some examples ‘Nirvana’ = “Don’t walk on guns, burn your friends”. Approach: look at using phonetic similarity. They adapt ‘blast’ from DNA sequence matching to the problem. Lyrics are represented as a sequence of matrix.

For training, they get data from ‘misheard lyrics’ sites like KissThisGuy.com. They align the misheard with the real lyrics – to build a model of frequently misheard phonemes. They tested with KissThisGuy.com misheard lyrics. Scored with 5 different models.

Evaluation: Mean Reciprocal Rank and Hit Rank by Rank. The approach compared well with previous techniques. Still, 17% of lyrics are still not identified – some are just bad queries, but dealing with short queries is a source of errors. They also looked at phoneme confusion, in particular confusions caused by singing.

Future work: look at phoneme trigrams, and build a web site. Quesitioner suggests that they create a mondegreen generator

Good presentation, interesting, fun problem area.

APPROXIMATE NOTE TRANSCRIPTION FOR THE IMPROVED IDENTIFICATION OF DIFFICULT CHORDS

Posted by Paul in events, ismir, music information retrieval, research on August 10, 2010

APPROXIMATE NOTE TRANSCRIPTION FOR THE IMPROVED IDENTIFICATION OF DIFFICULT CHORDS – Matthias Mauch and Simon Dixon

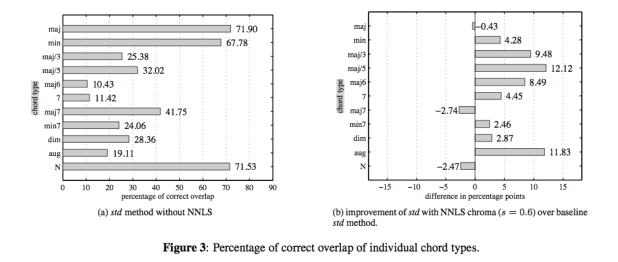

This is a new chroma extraction method using a non-negative least squares (NNLS) algorithm for prior approximate note transcription. Twelve different chroma methods were tested for chord transcription accuracy on popular music, using an existing high- level probabilistic model. The NNLS chroma features achieved top results of 80% accuracy that significantly exceed the state of the art by a large margin.

We have shown that the positive influence of the approximate transcription is particularly strong on chords whose harmonic structure causes ambiguities, and whose identification is therefore difficult in approaches without prior approximate transcription. The identification of these difficult chord types was substantially increased by up to twelve percentage points in the methods using NNLS transcription.

Matthias is an enthusiastic presenter who did not hesitate to jump onto the piano to demonstrate ‘difficult chords’. Very nice presentation.

What’s Hot? Estimating Country Specific Artist Popularity

I am at ISMIR this week, blogging sessions and papers that I find interesting.

What’s Hot? Estimating Countrhy Specific Artist Popularity

Markus Schedl, Tim Pohle, Noam Koenigstein, Peter Knees

Traditional charts are not perfect, not available in on countries, have biases (sales vs. plays), don’t incorporate non-sales channels like p2p. inhomogenity between countries .

Approach: Look at different channels: Google, Twitter, shared folders in Gnutella, Last.fm

- Google: “led zeppelin” + “france” but applied a popularity filter to reduce affect of overall popularity

- twiiter – geolocated major citiies of the world using freebase. Used twitter APIs with #nowplaying hashtag along with the geolocation api to search for plays in a particular country

- P2p shared folders – gnutella network – gathered a million gnutella IP addresses, gathered the metadata for the shared folders at each address, used IP2location to resolve to a geographic location

- Last.fm – retreive top 400 listeners in each country. For these top 400 listeners, retrieve the top-played artists.

Evaluation: Retrieve Last.fm most popular. Use top-n rank overlap for scoring. Compared the 4 different sources. Each approach was prone to certain distortions and bias. For future they hope to combine these sources to build a hybrid system that combines best attributes of all approaches.

Finding a path through the Jukebox: The Playlist Tutorial

Posted by Paul in events, music information retrieval, playlist, research, The Echo Nest on August 6, 2010

Ben Fields and I have just put the finishing touches on our playlisting tutorial for ISMIR. Everything you could want to know about playlists. As one of the founders of a well known music intelligence company once said: Take the fun out of music and read Paul’s slides …

Do you use Smart Playlists?

[tweetmeme only_single=false] iTunes Smart Playlists allow for very flexible creation of dynamic playlists based on a whole boat-load of parameters. But I wonder how often people use this feature. Is it too complicated? Let’s find out. I’ve created a poll that will take you about 20 seconds to complete. Go to iTunes, count up how many smart playlists you have. You can tell which playlists are smart playlists because they have the little gear icon:

Don’t count the pre-fab smart playlists that come with iTunes (like 90’s music, Recently Added, My Top Rated, etc.). Once you’ve counted up your playlists, take the poll:

Help researchers understand earworms

Posted by Paul in Music, music information retrieval, research on July 29, 2010

Researchers at Goldsmiths, University of London, in a collaboration with the BBC 6 and the British Academy, are conducting research to find out about the music in people’s heads, sometimes called ’musical imagery’. They want to know what songs are the most common, whether people like it or don’t, what triggers it, and if some people have music in their head all the time, etc.

To help researchers understand this phenomenon, take part in a questionnaire (and you could win £150 too). I took the survey, it took about 10 minutes. They do ask some rather personal questions that seem related to one’s tendency towards compulsive behavior. (yes, I do sometimes count the stairs that I’m walking up).

It looks to be an interesting research project. More details about it are here: The Earwomery.com

Some preliminary Playlist Survey results

[tweetmeme source= ‘plamere’ only_single=false] I’m conducting a somewhat informal survey on playlisting to compare how well playlists created by an expert radio DJ compare to those generated by a playlisting algorithm and a random number generator. So far, nearly 200 people have taken the survey (Thanks!). Already I’m seeing some very interesting results. Here’s a few tidbits (look for a more thorough analysis once the survey is complete).

People expect human DJs to make better playlists:

The survey asks people to try to identify the origin of a playlist (human expert, algorithm or random) and also rate each playlist. We can look at the ratings people give to playlists based on what they think the playlist origin is to get an idea of people’s attitudes toward human vs. algorithm creation.

Predicted Origin Rating ---------------- ------ Human expert 3.4 Algorithm 2.7 Random 2.1

We see that people expect humans to create better playlists than algorithms and that algorithms should give better playlists than random numbers. Not a surprising result.

Human DJs don’t necessarily make better playlists:

Now lets look at how people rated playlists based on the actual origin of the playlists:

Actual Origin Rating ------------- ------ Human expert 2.5 Algorithm 2.7 Random 2.6

These results are rather surprising. Algorithmic playlists are rated highest, while human-expert-created playlists are rated lowest, even lower than those created by the random number generator. There are lots of caveats here, I haven’t done any significance tests yet to see if the differences here really matter, the survey size is still rather small, and the survey doesn’t present real-world playlist listening conditions, etc. Nevertheless, the results are intriguing.

I’d like to collect more survey data to flesh out these results. So if you haven’t already, please take the survey:

The Playlist Survey

Thanks!