Archive for category music information retrieval

Tutorial Day at ISMIR

Posted by Paul in events, fun, Music, music information retrieval on October 26, 2009

Monday was tutorial day. After months of preparation, Justin finally got to present our material. I was a bit worried that our timing on the talk would be way out of wack and we’d have to self edit on the fly – but all of our time estimates seemed to be right on the money. whew! The tutorial was well attended with 80 or so registered – and lots of good questions at the end. All in all I was pleased at how it turned out. Here’s Justin talking about Echo Nest features:

After the tutorial a bunch of us went into town for dinner. 15 of us managed to find a restaurant that could accommodate us – and after lots of miming and pointing at pictures on the menu we managed to get a good meal. Lots of fun.

Using Visualizations for Music Discovery

Posted by Paul in code, data, events, fun, Music, music information retrieval, research, The Echo Nest, visualization on October 22, 2009

On Monday, Justin and I will present our magnum opus – a three-hour long tutorial entitled: Using Visualizations for Music Discovery. In this talk we look the various techniques that can be used for visualization of music. We include a survey of the many existing visualizations of music, as well as talk about techniques and algorithms for creating visualizations. My hope is that this talk will be inspirational as well as educational spawning new music discovery visualizations. I’ve uploaded a PDF of our slide deck to slideshare. It’s a big deck, filled with examples, but note that large as it is, the PDF isn’t the whole talk. The tutorial will include many demonstrations and videos of visualizations that just are not practical to include in a PDF. If you have the chance, be sure to check out the tutorial at ISMIR in Kobe on the 26th.

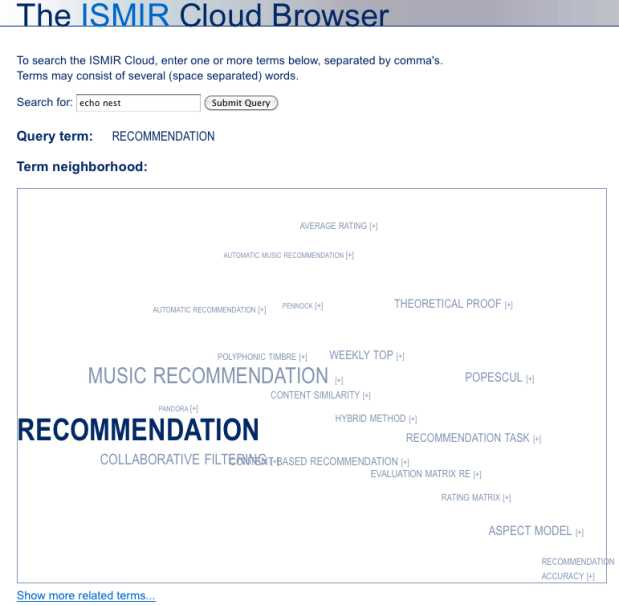

ISMIR Cloud browser

Posted by Paul in Music, music information retrieval, research on October 19, 2009

Maarten Grachten, Markus Schedl, Tim Pohle and Gerhard Widmer have created an ISMIR Cloud browser that lets you browse the 10 years of music information research via a text cloud. Check it out: The ISMIR Cloud Browser

Herd it on Facebook

Posted by Paul in data, Music, music information retrieval, research on September 25, 2009

UCSD Researcher Gert Lanckriet announced today that “Herd It” – the game-with-a-purpose for collecting audio annotations has been officially launched on Facebook. Following in the footsteps of other gwaps such as Major Miner, Tag-a-tune and the Listen Game.

UCSD Researcher Gert Lanckriet announced today that “Herd It” – the game-with-a-purpose for collecting audio annotations has been officially launched on Facebook. Following in the footsteps of other gwaps such as Major Miner, Tag-a-tune and the Listen Game.

On the music-ir mailing list Gert explains ‘Herd it’: “The scientific goal of this experiment is to investigate whether a human computation game integrated with the Facebook social network will allow the collection of high quality tags for audio clips. The quality of the tags will be tested by using them to train an automatic music tagging system (based on statistical models). Its predictive accuracy will be compared to a system trained on high quality tags collected through controlled human surveys (such as, e.g., the CAL500 data set). The central question we want to answer is whether the “game tags” can train an auto-tagging system as (or more) accurately than “survey tags” and, if yes, under what conditions (amount of tags needed, etc.). The results will be reported once enough data has been collected.”

I’ve played a few rounds of the game and enjoyed myself. I recognized all of the music that they played (it seemed to be drawn from top 100 artists like Nirvana, Led Zeppelin, Maria Carey and John Lennon). The timed rounds made the game move quickly. Overall, the game was fun. But I did miss the feeling of close collaboration that I would get from some other Gwaps where I would have to try to guess how my partner would try to describe a song. Despite this, I found the games to be fun and I could easily see spending a few hours trying to get a top score. The team at UCSD clearly has put lots of time into making the games highly interactive and fun. Animations, sound and transparent game play all add to the gaming experience. Once glitch, even though I was logged into Facebook, the Herd It game didn’t seem to know who I was, it just called me ‘Herd It’. So my awesome highscore is anonymous.

Here are some screen shots from the game. For this round, I had to chose the most prominent sound (this was for the song ‘Heart of Gold’), I chose slide guitar, but most people chose acoustic guitar (what do they know!).

For this round, I had to chose the genre for a song. easy enough.

For this round I had to position a song on a Thayer mood model scale.

Here’s the game kick off screen … as you can see, I’m “Herd it” and not Paul

I hope the Herd It game attracts lots of attention. It could be a great source of music metadata.

Divisible by Zero

Posted by Paul in Music, music information retrieval, research on September 9, 2009

Be sure to check out the new MIR blog Divisible by Zero by Queen Mary PhD Student Rebecca Stewart. Becky is particularly interested in using spatial audio techniques to enhance music discovery. I find her first post You want the third song on the left to be quite interesting. She’s using a spatially-enabled database to manage fast lookups of similar tracks that have been positioned in a 2D space using LDMS. This is a really neat idea. It turns a problem that can be particularly vexing into a simple SQL query.

I hope Becky will continue to write about this project in her blog. I’m particularly interested in learning how well the spatial database scales to industrial-sized music collections, what her query times are and how the LDMS/GIS similarity compare to results using a brute force nearest neighbor distance calculation on the feature vectors. – (via Ben Fields)

Finding music with pictures

Posted by Paul in Music, music information retrieval, research, visualization on September 7, 2009

As part of the collateral information for our upcoming ISMIR tutorial (Using Visualizations for Music Discovery), Justin and I have created a new blog: Visualizing Music. This blog, inspired by our favorite InfoVis blogs like Information Aesthetics and Visual Complexity, will be be a place where we catalog and critique visualizations that help people explore and understand music.

There are hundreds of music visualizations out there – so it may take us a little while to get them all cataloged, but we’ve already added some of our favorites. Help us fill out the whole catalog by sending us links to interesting music visualizations.

Check out the new blog: Visualizing Music

Musically Intelligent Machines

Posted by Paul in Music, music information retrieval, startup on September 2, 2009

Musically Intelligent Machines, is a spin-off of the song autotagging work done by Michael Mandel. Michael has chosen a rather awesome name for his company, combining ‘music’ and ‘machine’ into a catchy title – now why didn’t I think of that ;).

You can see Mike’s demo on this livestream here:

Click on on-demand / September 1st / Musically Intelligent Machines.

Social Tags and Music Information Retrieval

Posted by Paul in Music, music information retrieval, research, tags on May 11, 2009

It is paper writing season with the ISMIR submission deadline just four days away. In the last few days a couple of researchers have asked me for a copy of the article I wrote for the Journal of New Music Research on social tags. My copyright agreement with the JNMR lets me post a pre-press version of the article – so here’s a version that is close to what appeared in the journal.

Social Tagging and Music Information Retrieval

Abstract

Social tags are free text labels that are applied to items such as artists, albums and songs. Captured in these tags is a great deal of information that is highly relevant to Music Information Retrieval (MIR) researchers including information about genre, mood, instrumentation, and quality. Unfortunately there is also a great deal of irrelevant information and noise in the tags.

Imperfect as they may be, social tags are a source of human-generated contextual knowledge about music that may become an essential part of the solution to many MIR problems. In this article, we describe the state of the art in commercial and research social tagging systems for music. We describe how tags are collected and used in current systems. We explore some of the issues that are encountered when using tags, and we suggest possible areas of exploration for future research.

Here’s the reference:

Paul Lamere. Social tagging and music information retrieval. Journal of New Music Research, 37(2):101–114.

TagatuneJam

Posted by Paul in data, Music, music information retrieval, research on April 28, 2009

TagATune, the music-oriented ‘game with a purpose’ is now serving music from Jamendo.com. TagATune has already been an excellent source of high quality music labels. Now they will be getting gamers to apply music labels to popular music. A new dataset will be forthcoming. Also, adding to the excitement of this release, is the announcment of a contest. The highest scoring Jammer will be formally acknowledged as a contributor to this dataset as well as receive a special mytery prize. (I think it might be jam). Sweet.

TagATune, the music-oriented ‘game with a purpose’ is now serving music from Jamendo.com. TagATune has already been an excellent source of high quality music labels. Now they will be getting gamers to apply music labels to popular music. A new dataset will be forthcoming. Also, adding to the excitement of this release, is the announcment of a contest. The highest scoring Jammer will be formally acknowledged as a contributor to this dataset as well as receive a special mytery prize. (I think it might be jam). Sweet.

Magnatagatune – a new research data set for MIR

Posted by Paul in data, Music, music information retrieval, research, tags, The Echo Nest on April 1, 2009

Edith Law (of TagATune fame) and Olivier Gillet have put together one of the most complete MIR research datasets since uspop2002. The data (with the best name ever) is called magnatagatune. It contains:

- Human annotations collected by Edith Law’s TagATune game.

- The corresponding sound clips from magnatune.com, encoded in 16 kHz, 32kbps, mono mp3. (generously contributed by John Buckman, the founder of every MIR researcher’s favorite label Magnatune)

- A detailed analysis from The Echo Nest of the track’s structure and musical content, including rhythm, pitch and timbre.

- All the source code for generating the dataset distribution

Some detailed stats of the data calculated by Olivier are:

- clips: 25863

- source mp3: 5405

- albums: 446

- artists: 230

- unique tags: 188

- similarity triples: 533

- votes for the similarity judgments: 7650

This dataset is one stop shopping for all sorts of MIR related tasks including:

- Artist Identification

- Genre classification

- Mood Classification

- Instrument identification

- Music Similarity

- Autotagging

- Automatic playlist generation

As part of the dataset The Echo Nest is providing a detailed analysis of each of the 25,000+ clips. This analysis includes a description of all musical events, structures and global attributes, such as key, loudness, time signature, tempo, beats, sections, and harmony. This is the same information that is provided by our track level API that is described here: developer.echonest.com.

Note that Olivier and Edith mention me by name in their release announcement, but really I was just the go between. Tristan (one of the co-founders of The Echo Nest) did the analysis and The Echo Nest compute infrastructure got it done fast (our analysis of the 25,000 tracks took much less time than it did to download the audio).

I expect to see this dataset become one of the oft-cited datasets of MIR researchers.

Here’s the official announcement:

Edith Law, John Buckman, Paul Lamere and myself are proud to announce the release of the Magnatagatune dataset.

This dataset consists of ~25000 29s long music clips, each of them annotated with a combination of 188 tags. The annotations have been collected through Edith’s “TagATune” game (http://www.gwap.com/gwap/gamesPreview/tagatune/). The clips are excerpts of songs published by Magnatune.com – and John from Magnatune has approved the release of the audio clips for research purposes. For those of you who are not happy with the quality of the clips (mono, 16 kHz, 32kbps), we also provide scripts to fetch the mp3s and cut them to recreate the collection. Wait… there’s more! Paul Lamere from The Echo Nest has provided, for each of these songs, an “analysis” XML file containing timbre, rhythm and harmonic-content related features.

The dataset also contains a smaller set of annotations for music similarity: given a triple of songs (A, B, C), how many players have flagged the song A, B or C as most different from the others.

Everything is distributed freely under a Creative Commons Attribution – Noncommercial-Share Alike 3.0 license ; and is available here: http://tagatune.org/Datasets.html

This dataset is ever-growing, as more users play TagATune, more annotations will be collected, and new snapshots of the data will be released in the future. A new version of TagATune will indeed be up by next Monday (April 6). To make this dataset grow even faster, please go to http://www.gwap.com/gwap/gamesPreview/tagatune/ next Monday and start playing.

Enjoy!

The Magnatagatune team