Archive for category ismir

My worst ISMIR moment

I’ve been invited to be a session chair for the Oral Session on Tags that runs this morning. This brings back memories of my worst ISMIR moment which I wrote about in my old Duke Listens blog:

It was in my role as session chair that I had my worst ISMIR moment. I was doing fine making sure that the speakers ended on time (even when we had to swap speakers around when one chap couldn’t get his slides to appear on the projector). However there was one speaker who gave a talk about a topic that I just didn’t understand. I didn’t grasp the goal of the research, the methods, the conclusions or the applicability of the research. All the way through the talk I was wracking my brains trying to eek out an appropriate, salient question about the research. A question that wouldn’t mark me as the idiot that I clearly was. By the end of the talk I was regretting my decision to accept the position as session chair. I could only pray that someone else would ask the required question and save me from humiliating myself and insulting the speaker. The speaker concluded the talk, I stood up and thanked the speaker, offered a silent prayer to the God of Curiosity and then asked the assembled for questions. Silence. Long Silence. Really long silence. My worst nightmare. I was going to ask a question, but by this point I couldn’t even remember what the talk was about. It was going to be a bad question, something like “Why do you find this topic interesting?” or “Isn’t Victoria nice?”. Just microseconds before I uttered my feeble query, a hand went up, I was saved. Someone asked a question. I don’t remember the question, I just remember the relief. My job as session chair was complete, every speaker had their question.

This year, I think I’ll be a bit more comfortable as a session chair. I know the topic of the session pretty well, and I know most of the speakers too, but still, please don’t be offended if I ask you “How do you like Kobe?”

ISMIR Day 1 Posters

Lots of very interesting posters, you can see some of my favorites in this Flickr slide show.

ISMIR Oral Session 3 – Musical Instrument Recognition and Multipitch Detection

Session Chair: Juan Pablo Bello

SCALABILITY, GENERALITY AND TEMPORAL ASPECTS IN AUTOMATIC RECOGNITION OF PREDOMINANT MUSICAL INSTRUMENTS IN POLYPHONIC MUSIC

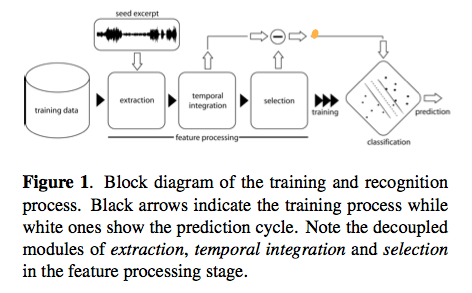

By Ferdinand Fuhrmann, Martín Haro, Perfecto Herrera

- Automatic recognition of music instruments

- Polyphonic music

- Predominate

Research Questions

- scale existing methods to higlh ployphonci muci

- generalize in respect to used intstruments

- model temporal information for recognition

Goals:

- Unifed framework

- Pitched and unpitched …

- (more goals but I couldn’t keep up_

Neat presentation of survey of related work, plotting on simple vs. complex

Ferdinand was going too fast for me (or perhaps jetlag was kicking in), so I include the conclusion from his paper here to summarize the work:

Conclusions: In this paper we addressed three open gaps in automatic recognition of instruments from polyphonic audio. First we showed that by providing extensive, well designed data- sets, statistical models are scalable to commercially avail- able polyphonic music. Second, to account for instrument generality, we presented a consistent methodology for the recognition of 11 pitched and 3 percussive instruments in the main western genres classical, jazz and pop/rock. Fi- nally, we examined the importance and modeling accuracy of temporal characteristics in combination with statistical models. Thereby we showed that modelling the temporal behaviour of raw audio features improves recognition per- formance, even though a detailed modelling is not possible. Results showed an average classification accuracy of 63% and 78% for the pitched and percussive recognition task, respectively. Although no complete system was presented, the developed algorithms could be easily incorporated into a robust recognition tool, able to index unseen data or label query songs according to the instrumentation.

MUSICAL INSTRUMENT RECOGNITION IN POLYPHONIC AUDIO USING SOURCE-FILTER MODEL FOR SOUND SEPARATION

by Toni Heittola, Anssi Klapuri and Tuomas Virtanen

Quick summary: A novel approach to musical instrument recognition in polyphonic audio signals by using a source-filter model and an augmented non-negative matrix factorization algorithm for sound separation. The mixture signal is decomposed into a sum of spectral bases modeled as a product of excitations and filters. The excitations are restricted to harmonic spectra and their fundamental frequencies are estimated in advance using a multipitch estimator, whereas the filters are restricted to have smooth frequency responses by modeling them as a sum of elementary functions on the Mel-frequency scale. The pitch and timbre information are used in organizing individual notes into sound sources. The method is evaluated with polyphonic signals, randomly generated from 19 instrument classes.

Source separation into various sources. Typically uses non-negative matrix factorization. Problem: Each pitch needs its own function leading to many functions. The system overview:

The Examples are very interesting: www.cs.tut.fi/~heittolt/ismir09

HARMONICALLY INFORMED MULTI-PITCH TRACKING

by Zhiyao Duan, Jinyu Han and Bryan Pardo

A novel system for multipitch tracking, i.e. estimate the pitch trajectory of each monophonic source in a mixture of harmonic sounds. Current systems are not robust, since they use local time-frequencies, they tend to generate only short pitch trajectories. This system has two stages: multi-pitch estimation and pitch trajectory formation. In the first stage, they model spectral peaks and non-peak regions to estimate pitches and polyphony in each single frame. In the second stage, pitch trajectories are clustered following some constraints: global timbre consistency, local time-frequency locality.

Here’s the system overview:

ISMIR Poster Madness part 2

Poster madness! Version 2 – even faster this time. I can’t keep up

- Singing Pitch Extraction – Taiwan

- Usability Evaluation of Visualization interfaces for content-based music retrieval – looks really cool! 3D

- Music Paste – concatenating music clipbs based on chroma and rhythm features

- Musical bass-line pattern clustering and its application aduio gener classification

- Detecting cover sets – looks nice – visualization – MTG

- Using Musical Structure to enhance automatic chord transcription –

- Visualizing Musical Structure from performance gesture – motion

- From low-level to song-level percussion descriptors of polyphonic music

- MTG – Query by symbolic example – use a DNA/Blast type approach

- sten – web-based approach to determine the origin of an artist – visualizations

- XML-format for any kind of time related symbolic data

- Erik Schmidt – FPGA feature extraction. MIR for devices

- Accelerating QBH – another hardware solution – 160 times faster

- Learning to control a reverberator using subjective perceptual descriptors – more boomy

- Interactive GTTM Analyzer –

- Estimating the error distribution of a tap sequence without ground Truth – Roger Dannenburg

- Cory McKay – ACE XML – Standard formats for features, metadata, labels and class ontologies

- An efficient multi-resolution spectral transform for music analysis

- Evaluation of multiple F0 estimation and tracking systems

BTW – Oscar informs me that this is not the first ever poster madness – there was one in Barcelona

ISMIR Oral Session 2 – Tempo and Rhythm

Posted by Paul in ismir, Music, music information retrieval, research on October 27, 2009

Session chair: Anssi Klapuri

IMPROVING RHYTHMIC SIMILARITY COMPUTATION BY BEAT HISTOGRAM TRANSFORMATIONS

By Marthias Gruhne, Christian Dittmar, and Daniel Gaertner

Marthias described their approach to generating beat histogram techniques, similar to those used by Burred, Gouyun, Foote and Tzanetakis. Problem: beat histogram can not be directly used as feature because of tempo dependency. Similar rhythms appear far apart in a Euclidean space because of this dependency. Challenge: reduce tempo dependence.

Solution: logarithmic Transformation. See the figure:

This leads to a histogram with a tempo independent part which can be separated from the tempo dependent part. This tempo independent part can then be used in a Euclidean space to find similar rhythms.

Evaluation: results 20% to 70%, and from 66% to 69% (Needs a significance test here I think)

USING SOURCE SEPARATION TO IMPROVE TEMPO DETECTION

By Parag Chordia and Alex Rae – presented by George Tzanetakis

Well, this is unusual that George will be presenting Para and Alex’s work. Anssi suggests that we can use the wisdom of the crowds to anser the questions.

Motivation: Tempo detection is often unreliable for complex music.

Humans often resolve rhythms by entraining to a rhythmical regular part.

Idea: Separate music into components, some components may be more reliable.

Method:

- Source separation

- track tempo for each source

- decide global tempo by either:

- Pick one with most regular structure

- Look for common tempo across all sources/layers

Here’s the system:

PLCA is a source separation method (Probablistic Latent Component Analysis). Issues: Number of components need to be specified in advance. Could merge sources or one source could be split into multiple layers.

Autocorrelation is used for tempo detection. Regular sources will have higher peaks.

Other approach – a machine learning approach – a supervised learning problem

Global Tempo using Clustering – merge all tempo candidates into single vector (and others within a 5% tolerance (and .5x and 2x), to give a peak histogram showing confidence for each tempo.

Evaluation

- IDM09 – http://paragchordia.com/data.html

- mirex06 (20 mixed genre exceprts)

Accuracy: MIREX06: 0.50 THIS : 0.60

Question: How many sources were specified to PLCA, Answer: 8. George thinks it doesn’t matter too much.

Question: Other papers show that similar techniques do not show improvement for larger datasets

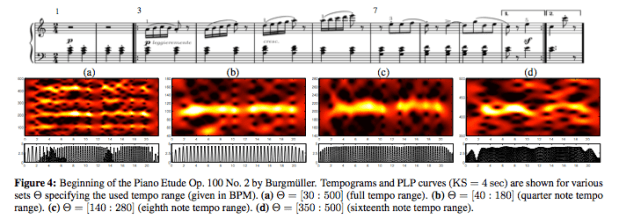

A MID-LEVEL REPRESENTATION FOR CAPTURING DOMINANT TEMPO AND PULSE INFORMATION IN MUSIC RECORDINGS

By Peter Grosche and Meinard Müller

Example – a waltz – where the downbeat is not too strong compared to beats 2 & 3. It is hard to find onsets in the energy curves. Instead, use:

- Create a spectogram

- Log compression of the spectrogram

- Derivative

- Accumulation

This yields a novelty curve, which can be used for onset detection. Downbeats are missing. How to beat track this? compute tempogram – a spectrogram of the novelty curve. This yields a periodicity kernel. All kernels are combined to obtain a single kernel – rectified – this gives a predominate local pulse curve. The PLP curve is dynamic but can be constrained to track at the bar, beat or tatum level.

Issues: PLP likes to fill in the gaps – which is not always appropriate. Trouble with the Borodin String Quartet No. 2. But when tempo is tightly constrained, it works much better.

This was a very good talk. Meinard presented lots of examples including examples where the system did not work well.

Question: Realtime? Currently kernels are 4 to 6 seconds. With a latency of 4 to 6 seconds it should work in an online scenario.

Question: How different from DTW on the tempogram? Not connected to DTW in anyway.

Question: How important is the hopsize? Not that important since a sliding window is used.

ISMIR Oral Session 1 – Knowledge on the Web

Oral Session 1A – Knowledge on the Web

Oral Session 1A – The first Oral Session of ISMIR 2009, chaired by Malcolm Slaney of Yahoo! Research

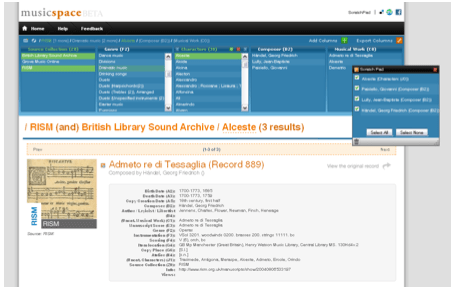

Integrating musicology’s heterogeneous data sources for better exploration

by David Bretherton, Daniel Alexander Smith, mc schraefel,

Richard Polfreman, Mark Everist, Jeanice Brooks, and Joe Lambert

Project Link: http://www.mspace.fm/projects/musicspace

Musicologists consult many data sources, musicspace tries to integrate these resources. Here’s a screenshot of what they are trying to build.

Wirking with many public and private organizations (From British Libary to Naxos).

Motivation: Many musicologist queries are just intractible because: Need to consult several resources, they are multipart queries (require *pen and paper*), insufficient granularity of metadata or serch options. Solution: Integrate sources, optimally interactive UI, increase granularity.

Difficulties: Many formats for data sources,

Strategies: Increase granularity of data by making annotations explicit. Generate metadata – fallback on human intelligence, inspired by Amazon turk – to clean and extract data.

User Interface – David demonstrated the faceted browser to satisfy a complex query (find all the composers that montiverdi’s scribe was also the scribe for). I liked the dragging columns.

There are issues with linked databases from multiple sources – when one source goes away (for instance, for licensing reason), the links break.

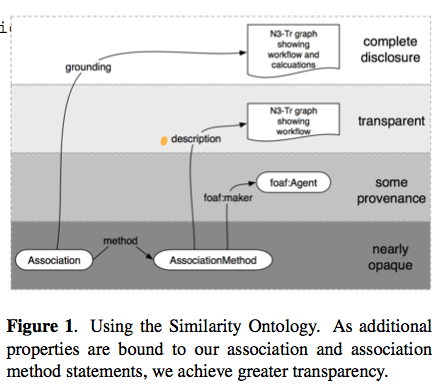

An Ecosystem for transparent music similarity in an open world

By Kurt Jacobson, Yves Raimond, Mark Sandler

Link http://classical.catfishsmooth.net/slides/

Kurt was really fast, was hard to take coherent notes, so my fragmented notes are here.

Assumption: you can use music similarity for recommendation. Music similarity:

Tversky’s suggested that you can’t really put similarity into a euclidean space. It is not symmetric. He suggests a contrast model basd on comparing features – analagous to ‘bag of features’.

What does music similarity realy mean? We can’t say! Means different things in different contexts. Context is important. Make similarity be a RDF concept. A hierarchy of similarity was too limiting. Similarity now has properties. We reify the simialriy, how, how much etc.

Association method with levels of transparency as follows:

Example implementation: http://classical.catfishsmooth.net/about/

Kurt demoed the system showing how he can create a hybrid query for timbral, key and composer influence similarity. It was a nice demo.

Future work: digitally signed similarity statements – neat idea.

Kurt challenges the Last.fm, BMATs and the Echo Nests and anyone who provides similarity information: Why not publish MuSim?

Interfaces for document representation in digital music libraries

By Andrew Hankinson Laurent Pugin Ichiro Fujinaga

Goal: Designing user interfaces for displaying music scores

Part of the RISM project – music digitization and metadata

Bring together information for many resources.

Five Considerations

- Preservation of Document integrity – image gallery approach doesn’t give you a sense of the complete document

- Simultaneous viewing of parts – for example, the tenor and bass may be separated in the work without window juggling.

- Provide multiple page resolutions – zooming is important

- Optimized page loading

- Image + Metadata should be presented simultaneously

Current Work

Implemented a prototype viewer that takes the 5 considerations into account. Andy gave a demo of the prototype – seems to be quite an effective tool for displaying and browsing music scores:

A good talk,well organized and presented – nice demo.

Oral Session 1B – Performance Recognition

Oral Session 1B, chaired by Simon Dixon (Queen Mary)

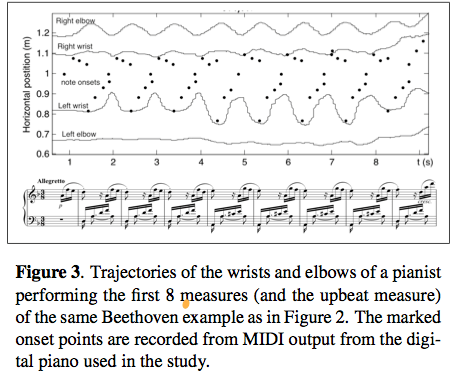

Body movement in music information retrieval

by Rolf Inge Godøy and Alexander Refsum Jensenius

Abstract: We can see many and strong links between music and hu- man body movement in musical performance, in dance, and in the variety of movements that people make in lis- tening situations. There is evidence that sensations of hu- man body movement are integral to music as such, and that sensations of movement are efficient carriers of infor- mation about style, genre, expression, and emotions. The challenge now in MIR is to develop means for the extrac- tion and representation of movement-inducing cues from musical sound, as well as to develop possibilities for using body movement as input to search and navigation inter- faces in MIR.

Links between body movement and music everywhere. Performers, listeners, etc. Movement is integral to music experience. Suggest that studying music-related body movement can help our understanding of music.

One example: http://www.youtube.com/watch?v=MxCuXGCR8TE

Relate music sound to subject images.

Looking at performance of pianists – creates a motiongram – and a motion capture.

Listeners have lots of knowledge about sound producing motions/actions. These motions are integral to music perception. There’s a constant mental model of the sound/action. Example: Air guitar vs. Real Guitar

This was a thought provoking talk. wonder how the music-action model works when the music controller is no longer acoustic – do we model music motions when using a laptop as our instrument?

Who is who in the end? Recognizing pianists by their final ritardandi

by Raarten Grachten and Gerhrad Widmer

Some examples Harasiewicz, vs Ashkenazy – similar to how different people walk down the stairs.

Why? Structure asks for it or … they just feel like it. How much of this is specific to the performer? Fixed effects: transmitting moods, clarifying musical structure. Transient effects: Spontaneous deicsions, motor noise, performance errors.

Study – looking at piece specific vs. performance specific tempo variation. Results: Global tempo from the piece, local variation is performance specific.

Method:

- Define a performance norm

- Determine where performers significantly deviate

- Model the deviations

- Classify performances based on the model

In actuality, use the average performance as a norm. Note also there may be errors in annotations that have to be accounted for.

Here are the deviations from the performance norm for various performers.

Accuracy ranges from 65.31 to 43.53 – (50% is random baseline). Results are not overwhelming, but still interesting considering the simplistic model.

Interesting questions about students and schools and styles that may influence results.

All in all, an interesting talk and very clearly presented.

Live from ISMIR

This week I’m attending ISMIR – the 10th International Society for Music Information Retrieval Conference being held in Kobe Japan. At this conference researchers gather to advance the state of the art in music information retrieval. It is a varied bunch including librarians, musicologists, experts in signal processing, machine learning, text IR, visualization, HCI. I’ll be trying to blog the various talks and poster sessions throughout the conference, (but at some point the jetlag will kick in – making it hard for me to think, let alone type. It’s 9AM – the keynote is starting …

This week I’m attending ISMIR – the 10th International Society for Music Information Retrieval Conference being held in Kobe Japan. At this conference researchers gather to advance the state of the art in music information retrieval. It is a varied bunch including librarians, musicologists, experts in signal processing, machine learning, text IR, visualization, HCI. I’ll be trying to blog the various talks and poster sessions throughout the conference, (but at some point the jetlag will kick in – making it hard for me to think, let alone type. It’s 9AM – the keynote is starting …

Opening Remarks

Masataka and Ich give the opening remarks. First some stats:

- 286 attendees from 28 countries

- 212 submissions fro 29 countries

- 123 papers (58%) accepted

- 214 reviewers