Archive for category ismir

ISMIR Oral Session – Folk Songs

Session Title: Folk songs

Session Chair: Remco C. Veltkamp (Universiteit Utrecht, Netherland)

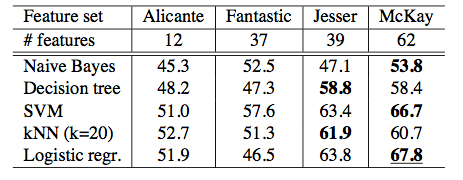

Global Feature Versus Event Models for Folk Song Classification

Ruben Hillewaere, Bernard Manderick and Darrell Conklin

Abstract: Music classification has been widely investigated in the past few years using a variety of machine learning approaches. In this study, a corpus of 3367 folk songs, divided into six geographic regions, has been created and is used to evaluate two popular yet contrasting methods for symbolic melody classification. For the task of folk song classification, a global feature approach, which summarizes a melody as a feature vector, is outperformed by an event model of abstract event features. The best accuracy obtained on the folk song corpus was achieved with an ensemble of event models. These results indicate that the event model should be the default model of choice for folk song classification.

Robust Segmentation and Annotation of Folk Song Recordings

Meinard Mueller, Peter Grosche and Frans Wiering

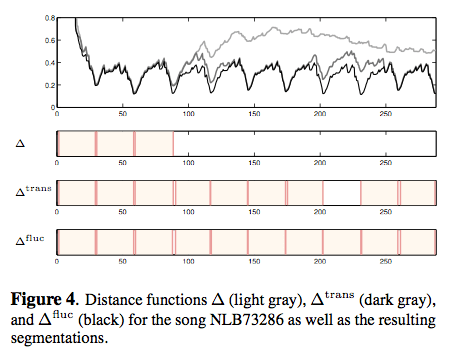

Abstract: Even though folk songs have been passed down mainly by oral tradition, most musicologists study the relation between folk songs on the basis of score-based transcriptions. Due to the complexity of audio recordings, once having the transcriptions, the original recorded tunes are often no longer studied in the actual folk song research though they still may contain valuable information. In this paper, we introduce an automated approach for segment- ing folk song recordings into its constituent stanzas, which can then be made accessible to folk song researchers by means of suitable visualization, searching, and navigation interfaces. Performed by elderly non-professional singers, the main challenge with the recordings is that most singers have serious problems with the intonation, fluctuating with their voices even over several semitones throughout a song. Using a combination of robust audio features along with various cleaning and audio matching strategies, our approach yields accurate segmentations even in the presence of strong deviations.

Notes: Interesting talk (as always) by Meinard about dealing with real world problems when dealing with folk song audio recordings.

Supporting Folk-Song Research by Automatic Metric Learning and Ranking

Korinna Bade, Andreas Nurnberger, Sebastian Stober, Jörg Garbers and Frans Wiering

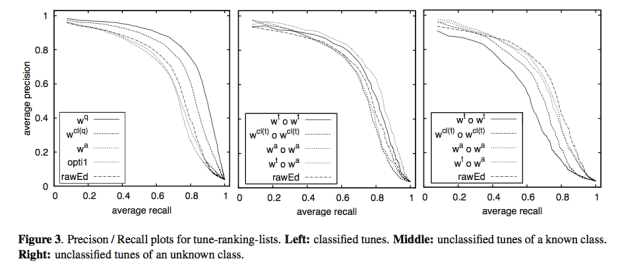

Abstract: In folk song research, appropriate similarity measures can be of great help, e.g. for classification of new tunes. Several measures have been developed so far. However, a particular musicological way of classifying songs is usually not directly reflected by just a single one of these measures. We show how a weighted linear combination of different basic similarity measures can be automatically adapted to a specific retrieval task by learning this metric based on a special type of constraints. Further, we describe how these constraints are derived from information provided by experts. In experiments on a folk song database, we show that the proposed approach outperforms the underlying basic similarity measures and study the effect of different levels of adaptation on the performance of the retrieval system.

ISMIR 2009 – The Future of MIR

Posted by Paul in ismir, Music, recommendation on October 29, 2009

This year ISMIR concludes with the 1st Workshop on the Future of MIR. The workshop is organized by students who are indeed the future of MIR.

09:00-10:00 Special Session: 1st Workshop on the Future of MIR

MIR, where we are, where we are going

Session Chair: Amélie Anglade Program Chair of f(MIR)

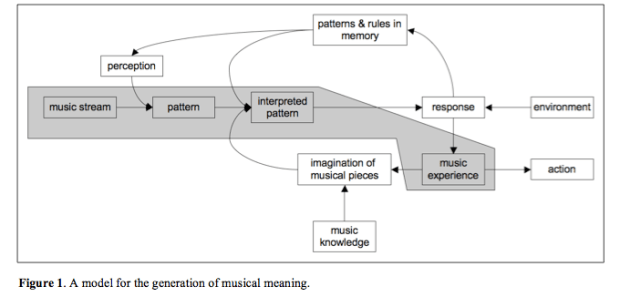

Meaningful Music Retrieval

Frans Wiering – [pdf]

Notes

- Some unfortunate tendencies: anatomical view of music – a dead body that we do autopsies, time is the loser Traditional production-oriented/

- Measure of similarity: relevance, surprise

- Few interesting applications for end-users

- bad fit to present-day musicological themes

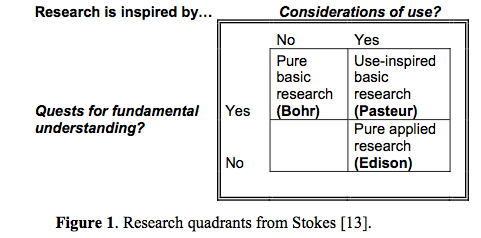

- We are in the world of ‘pure applied research’ – no truth interdisciplinary between music domain knowledge and computer science.

- Music is meaningful (and the underlying personal motivation of most MIR researchers).

- Meaning in musicology – traditionally a taboo suject

- Subjectivity: an indivds. disposition to engage in social and cultural interactions

- Meaning generation process – we have a long-term memory for music –

- Can musical meaning provide the ‘big story line’ for MIR?

The Discipline Formerly Known As MIR

Perfecto Herrera, Joan Serrà, Cyril Laurier, Enric Guaus, Emilia Gómez and Xavier Serra

Intro: Our exploration is not a science-fiction essay. We do not try to imagine how music will be conceptualized, experienced and mediated by our yet-to-come research, technological achievements and music gizmos. Alternatively, we reflect on how the discipline should evolve to become consolidated as such, in order it may get an effective future instead of becoming, after a promising start, just a “would-be” discipline.Our vision addresses different aspects: the discipline’s object of study, the employed methodologies, social and cultural impacts (which are out of this long abstract because of space restrictions), and we finish with some (maybe) disturbing issues that could be taken as partial and biased guidelines for future research.

Notes: One motivation for advancing MIR – more banquets!

- MIR is no more about retrieval than computer science is about computers

- Music Information Retrieval – it’s too narrow

- Music Information or Information about Music?

- Interested in the interaction with music information

- We should be asking more profound questions

- music

- content tresasures in short musical exceprts, tracks performances etc.

- context

- music understanding systems

- Most metadata will be generated in the creation / production phase (hmm.. don’t agree necessarily, all the good metadata (tags, who likes what) is based on context and use which is post-hoc)

- Instead of automatic analysis – build systems to help humans help humans

- Music like water? or Music as dog!!! – a friend – companion –

- Personalization, Findability

- Music turing test

Good, provocative talk

Oral Session 2: Potential future MIR applications

Session Chair: Jason Hockman (McGill University), Program Chair of f(MIR)

Machine Listening to Percussion: Current Approaches and Future Directions – [pdf]

Michael Ward

Abstract: approaches have been taken to detect and classify percussive events within music signals for a variety of purposes with differing and converging aims. In this paper an overview of those technologies is presented and a discussion of the issues still to overcome and future possibilities in the field are presented. Finally a system capable of monitoring a student drummer is envisaged which draws together current approaches and future work in the field.

Notes:

- Challengs: Onset detection of isolated drum strokes

- Onset detection and classification of overlapping drum sounds

- Onset detection and classification in the presence of other instruments

- Variability in Percussive sounds . Dozens of criteria effect the sounds produced (strike velocity, angle, position etc.)

- Future Research Areas

- Extension of recognition to include the wide variety of strokes. (open hh, half-open hh, hh foot splash etc)

MIR When All Recordings Are Gone: Recommending Live Music in Real-Time – [pdf]

Marco Lüthy and Jean-Julien Aucouturier

Recommending live and short lived events. Bandsintown, Songkick, gigulate … pay attention to this paper.

Notes:

- Recommendation for live music in real-time

- Coldplay -> free album when you get a ticket to a coldplay concert – give away the music

- NIN -> USB keys in the toilet – which had strange recording on the file – strange sounds – an FFT of the sounds showed phone number and GPS coordinates – turned into a treasure hunt to a NIN nails concert.

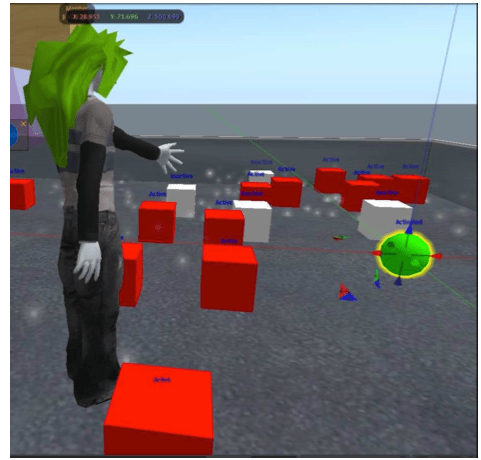

- Komuso Tokugawa – an avatar for a musiciaon in second life. Plays in second life, twitters concert announcements (playing wake for Les Paul in 3 minutes)

- ‘How do we get there in time?’

- JJ walked through how to implement a recommender system in second life

- Implicit preference inferred from how long your avatar listens to a concert (Nicole Yankelovich at Sun Labs should look at this stuff)

- Great talk by JJ – full of energy – neat ideas. Good work.

Poster Session

- Global Access to Ethnic Music: The Next Big Challenge?

Olmo Cornelis, Dirk Moelants and Marc Leman - The Future of Music IR: How Do You Know When a Problem Is Solved?

Eric Nichols and Donald Byrd

ISMIR 2009 – The Industry Panel

On Thursday I participated in the ISMIR industrial panel. 8 members of industry talked about the issues and challenges that they face in industry. I had a good time on the panel, the panelists were all on target and very thoughtful, and there were great questions from the audience. I’m happy too that the IRC channel offered a place for those to vent without the session turning into SXSW-style riot.

Justin Donaldson kept good notes on the panel and has posted them on his blog: ISMIR 2009 Industry Panel

Taiko at the ISMIR 2009 Banquet

During the ISMIR Banquet (held in the most beautiful place in the world, the Kobe Kachoen) we were entertained by the Maturishu a Taiko performance group. They were just fantastic:

ISMIR Keynote – Wind instrument-playing humanoid robots

What’s not to love!?!? Robots and Music! This was a great talk.

Wind instrument-playing humanoid robots

Atsuo Takanishi

Some history of robots:

Wabot-2 – early music playing robot

Wabian-2 – walking robots

Emotional Robots

Kobian: Emotional humanoid robot

Voice Producing Robots

Music Performance Robots

(Compare)

ISMIR Oral Session 6 – Similarity

Oral Session 6 – Similarity

Chair: Roger Dannenberg

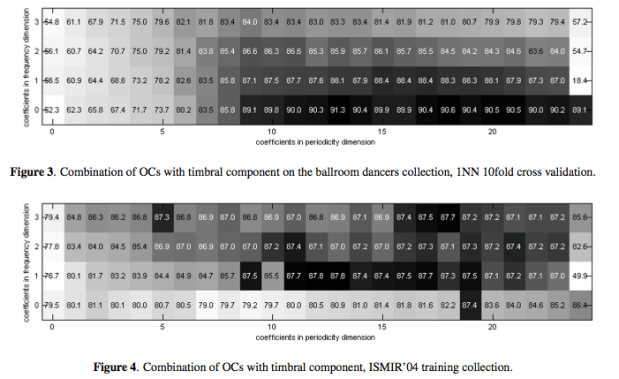

ON RHYTHM AND GENERAL MUSIC SIMILARITY

Tim Pohle, Dominik Schnitzer, Markus Schedl, Peter Knees and Gerhard Widmer

Paper: pdf

Abstract: The contribution of this paper is threefold:

First, we propose modifications to Fluctuation Patterns [14]. The resulting descriptors are evaluated in the task of rhythm similarity computation on the “Ballroom Dancers” collection.Second, we show that by combining these rhythmic descriptors with a timbral component, results for rhythm similarity computation are improved beyond the level obtained when using the rhythm descriptor component alone.Third, we present one “unified” algorithm with fixed parameter set. This algorithm is evaluated on three different music collections. We conclude from these evaluations that the computed similarities reflect relevant aspects both of rhythm similarity and of general music similarity. The performance can be improved by tuning parameters of the “unified” algorithm to the specific task (rhythm similarity / general music similarity) and the specific collection, respectively.

Notes:

- B&O recommender used OFAI

- Nice results

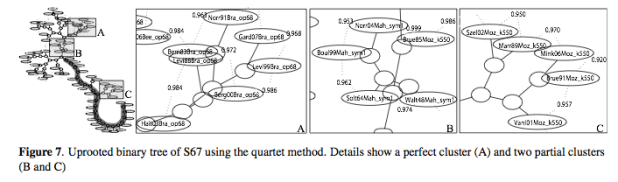

GROUPING RECORDED MUSIC BY STRUCTURAL SIMILARITY

Juan Pablo Bello

Paper: PDF

Abstract: This paper introduces a method for the organization of recorded music according to structural similarity. It uses the Normalized Compression Distance (NCD) to measure the pairwise similarity between songs, represented using beat-synchronous self-similarity matrices. The approach is evaluated on its ability to cluster a collection into groups of performances of the same musical work. Tests are aimed at finding the combination of system parameters that improve clustering, and at highlighting the benefits and shortcomings of the proposed method. Results show that structural similarities can be well characterized by this approach, given consistency in beat tracking and overall song structure.

Notes:

- Normalized Compression Distance (NCD) a universal distance metric.

- Experimental setup – all classical music

A FILTER-AND-REFINE INDEXING METHOD FOR FAST SIMILARITY SEARCH IN MILLIONS OF MUSIC TRACKS

Dominik Schnitzer, Arthur Flexer, Gerhard Widmer

Paper: PDF

ABSTRACT We present a filter-and-refine method to speed up acous- tic audio similarity queries which use the Kullback-Leibler divergence as similarity measure. The proposed method rescales the divergence and uses a modified FastMap [1] implementation to accelerate nearest-neighbor queries. The search for similar music pieces is accelerated by a fac- tor of 10−30 compared to a linear scan but still offers high recall values (relative to a linear scan) of 95 − 99%. We show how the proposed method can be used to query several million songs for their acoustic neighbors very fast while producing almost the same results that a linear scan over the whole database would return. We present a work- ing prototype implementation which is able to process sim- ilarity queries on a 2.5 million songs collection in about half a second on a standard CPU.

Notes: Gaussian similarity features can be expensive.

ISMIR – MIREX Panel Discussion

Stephen Downie presents the MIREX session

Statistics for 2009:

- 26 tasks

- 138 participants

- 289 evaluation runs

Results are now published: http://music-ir.org/r/09results

This year, new datasets:

- Mazurkas

- MIR 1K

- Back Chorales

- Chord and Segmentation datasets

- Mood dataset

- Tag-a-Tune

Evalutron 6K – Human evaluations – this year, 50 graders / 7500 possible grading events.

What’s Next?

- NEMA

- End-to-End systems and tasks

- Qualitative assessments

- Possible Journal ‘Special Issue’

- MIREX 2010 early start: http://www.music-ir.org/mirex/2010/index.php/Main_Page

- Suggestions to model after the ACM MM Grand challenge

Issues about MIREX

- Rein in the parameter explosion

- Not rigorously tested algorithms

- Hard-coded parameters, path-separators, etc

- Poorly specified data inputs/outputs

- Dynamically linked libraries

- Windows submissions

- Pre-compiled Matlab/MEX Submissions

- The ‘graduation’ problem – Andreas and Cameron will be gone in summer.

Long discussion with people opining about tests, data. Ben Fields had a particularly good point about trying to make MIREX better reflect real systems that draw upon web resources.

ISMIR Oral Session 5 – Tags

Oral Session 5 – Tags

Session Chair: Paul Lamere

I’m the session chair for this session, so I can’t keep notes. So instead I offer the abstracts.

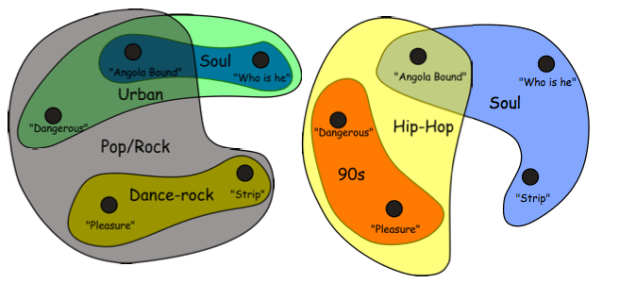

TAG INTEGRATED MULTI-LABEL MUSIC STYLE CLASSIFICATION WITH HYPERGRAPH

Fei Wang, Xin Wang, Bo Shao, Tao Li Mitsunori Ogihara

Abstract: Automatic music style classification is an important, but challenging problem in music information retrieval. It has a number of applications, such as indexing of and search- ing in musical databases. Traditional music style classifi- cation approaches usually assume that each piece of music has a unique style and they make use of the music con- tents to construct a classifier for classifying each piece into its unique style. However, in reality, a piece may match more than one, even several different styles. Also, in this modern Web 2.0 era, it is easy to get a hold of additional, indirect information (e.g., music tags) about music. This paper proposes a multi-label music style classification ap- proach, called Hypergraph integrated Support Vector Ma- chine (HiSVM), which can integrate both music contents and music tags for automatic music style classification. Experimental results based on a real world data set are pre- sented to demonstrate the effectiveness of the method.

EASY AS CBA: A SIMPLE PROBABILISTIC MODEL FOR TAGGING MUSIC

Matthew D. Hoffman, David M. Blei, Perry R. Cook

ABSTRACT Many songs in large music databases are not labeled with semantic tags that could help users sort out the songs they want to listen to from those they do not. If the words that apply to a song can be predicted from audio, then those predictions can be used both to automatically annotate a song with tags, allowing users to get a sense of what qualities characterize a song at a glance. Automatic tag prediction can also drive retrieval by allowing users to search for the songs most strongly characterized by a particular word. We present a probabilistic model that learns to predict the probability that a word applies to a song from audio. Our model is simple to implement, fast to train, predicts tags for new songs quickly, and achieves state-of-the-art performance on annotation and retrieval tasks.

USING ARTIST SIMILARITY TO PROPAGATE SEMANTIC INFORMATION

Joon Hee Kim, Brian Tomasik, Douglas Turnbull

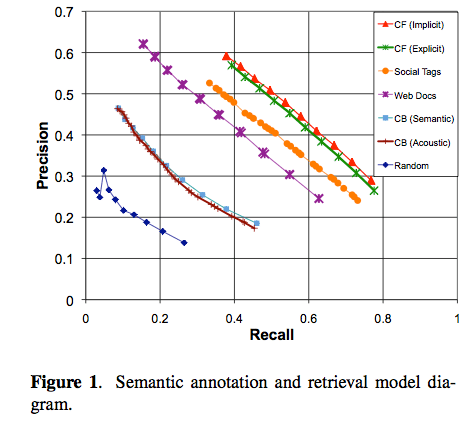

ABSTRACT Tags are useful text-based labels that encode semantic information about music (instrumentation, genres, emotions, geographic origins). While there are a number of ways to collect and generate tags, there is generally a data sparsity problem in which very few songs and artists have been accurately annotated with a sufficiently large set of relevant tags. We explore the idea of tag propagation to help alleviate the data sparsity problem. Tag propagation, originally proposed by Sordo et al., involves annotating a novel artist with tags that have been frequently associated with other similar artists. In this paper, we explore four approaches for computing artists similarity based on dif- ferent sources of music information (user preference data, social tags, web documents, and audio content). We com- pare these approaches in terms of their ability to accurately propagate three different types of tags (genres, acoustic de- scriptors, social tags). We find that the approach based on collaborative filtering performs best. This is somewhat surprising considering that it is the only approach that is not explicitly based on notions of semantic similarity. We also find that tag propagation based on content-based mu- sic analysis results in relatively poor performance.

MUSIC MOOD REPRESENTATIONS FROM SOCIAL TAGS

MUSIC MOOD REPRESENTATIONS FROM SOCIAL TAGS

Cyril Laurier, Mohamed Sordo, Joan Serra, Perfecto Herrera

ABSTRACT This paper presents findings about mood representations. We aim to analyze how do people tag music by mood, to create representations based on this data and to study the agreement between experts and a large community. For this purpose, we create a semantic mood space from last.fm tags using Latent Semantic Analysis. With an unsuper- vised clustering approach, we derive from this space an ideal categorical representation. We compare our commu- nity based semantic space with expert representations from Hevner and the clusters from the MIREX Audio Mood Classification task. Using dimensional reduction with a Self-Organizing Map, we obtain a 2D representation that we compare with the dimensional model from Russell. We present as well a tree diagram of the mood tags obtained with a hierarchical clustering approach. All these results show a consistency between the community and the ex- perts as well as some limitations of current expert models. This study demonstrates a particular relevancy of the basic emotions model with four mood clusters that can be sum- marized as: happy, sad, angry and tender. This outcome can help to create better ground truth and to provide more realistic mood classification algorithms. Furthermore, this method can be applied to other types of representations to build better computational models.

EVALUATION OF ALGORITHMS USING GAMES: THE CASE OF MUSIC TAGGING

Edith Law, Kris West, Michael Mandel, Mert Bay, J. Stephen Downie

Abstract Search by keyword is an extremely popular method for retrieving music. To support this, novel algorithms that automatically tag music are being developed. The conventional way to evaluate audio tagging algorithms is to com- pute measures of agreement between the output and the ground truth set. In this work, we introduce a new method for evaluating audio tagging algorithms on a large scale by collecting set-level judgments from players of a human computation game called TagATune. We present the de- sign and preliminary results of an experiment comparing five algorithms using this new evaluation metric, and con- trast the results with those obtained by applying several conventional agreement-based evaluation metrics.

ISMIR Poster Madness #3

- (PS3-1) Automatic Identification for Singing Style based on Sung Melodic Contour Characterized in Phase Plane

Tatsuya Kako, Yasunori Ohishi, Hirokazu Kameoka, Kunio Kashino and Kazuya Takeda - (PS3-2) Automatic Identification of Instrument Classes in Polyphonic and Poly-Instrument Audio

Philippe Hamel, Sean Wood and Douglas Eck

Looks very interesting - (PS3-3) Using Regression to Combine Data Sources for Semantic Music Discovery

Brian Tomasik, Joon Hee Kim, Margaret Ladlow, Malcolm Augat, Derek Tingle, Rich Wicentowski and Douglas Turnbull - (PS3-4) Lyric Text Mining in Music Mood Classification

Xiao Hu, J. Stephen Downie and Andreas Ehmann

lyrics and modod – surprising results! - (PS3-5) Robust and Fast Lyric Search based on Phonetic Confusion Matrix

Xin Xu, Masaki Naito, Tsuneo Kato and Hisashi Kawai

Phonetic confusion – misheard lyrics! KDDI – must see this. - (PS3-6) Using Harmonic and Melodic Analyses to Automate the Initial Stages of Schenkerian Analysis

Phillip Kirlin

Schenkerian analysis – what is this really? - (PS3-7) Hierarchical Sequential Memory for Music: A Cognitive Model

James Maxwell, Philippe Pasquier and Arne Eigenfeldt

Cognitive model for online learning. - (PS3-8) Additions and Improvements in the ACE 2.0 Music Classifier

Jessica Thompson, Cory McKay, J. Ashley Burgoyne and Ichiro Fujinaga

Open source MIR in java - (PS3-9) A Probabilistic Topic Model for Unsupervised Learning of Musical Key-Profiles

Diane Hu and Lawrence Saul

topic mode for key finding - (PS3-10) Publishing Music Similarity Features on the Semantic Web

Dan Tidhar, György Fazekas, Sefki Kolozali and Mark Sandler

SoundBite – distributed feature collection - (PS3-11) Genre Classification Using Bass-Related High-Level Features and Playing Styles

Jakob Abesser, Hanna Lukashevich, Christian Dittmar and Gerald Schuller

semantic features - (PS3-12) From Multi-Labeling to Multi-Domain-Labeling: A Novel Two-Dimensional Approach to Music Genre Classification

Hanna Lukashevich, Jakob Abeßer, Christian Dittmar and Holger Großmann

Fraunhofer – autotagging - (PS3-13) 21st Century Electronica: MIR Techniques for Classification and Performance

Dimitri Diakopoulos, Owen Vallis, Jordan Hochenbaum, Jim Murphy and Ajay Kapur

Automated ISHKURS with multitouch – woot - (PS3-14) Relationships Between Lyrics and Melody in Popular Music

Eric Nichols, Dan Morris, Sumit Basu and Chris Raphael

Text features vs melodic features – where do the stressed syllables fall - (PS3-15) RhythMiXearch: Searching for Unknown Music by Mixing Known Music

Makoto P. Kato

Looks like an echo nest remix: AutoDJ - (PS3-16) Musical Structure Retrieval by Aligning Self-Similarity Matrices

Benjamin Martin, Matthias Robine and Pierre Hanna

` - (PS3-17) Exploring African Tone Scales

Dirk Moelants, Olmo Cornelis and Marc Leman

No standardized scales – how do you deal with that? - (PS3-18) A Discrete Filter Bank Approach to Audio to Score Matching for Polyphonic Music

Nicola Montecchio and Nicola Orio - (PS3-19) Accelerating Non-Negative Matrix Factorization for Audio Source Separation on Multi-Core and Many-Core Architectures

Eric Battenberg and David Wessel

Runs NMF on GPUs and openMP - (PS3-20) Musical Models for Melody Alignment

Peter van Kranenburg, Anja Volk, Frans Wiering and Remco C. Veltkamp

alignment of folks songs - (PS3-21) Heterogeneous Embedding for Subjective Artist Similarity

Brian McFee and Gert Lanckriet

Crazy ass features! - (PS3-22) The Intersection of Computational Analysis and Music Manuscripts: A New Model for Bach Source Studies of the 21st Century

Masahiro Niitsuma, Tsutomu Fujinami and Yo Tomita

ISMIR Oral Session 4 – Music Recommendation and playlisting

Music Recommendation and playlisting

Session Chair: Douglas Turnbull

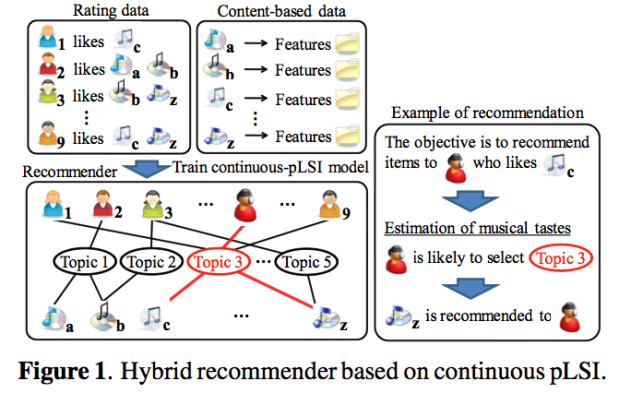

CONTINUOUS PLSI AND SMOOTHING TECHNIQUES FOR HYBRID MUSIC RECOMMENDATION

by Kazuyoshi Yoshii and Masataka Goto

- Unexpected encounters with unknown songs is increasingly important.

- Want accurate and diversifed recommendations

- Use a probabilistic approach suitable to deal with uncertainty of rating histories

- Compares CF vs. content-based and his Hybrid filtering system

Approach: Use PLSI to create a 3-way aspect model: user-song-feature – the unobservable category regading genre, tempo, vocal age, popularity etc. – pLSI typical patterns are given by relationships between users, songs and a limited number of topics. Some drawbacks: PLSI needs discrete features, multinomial distributions are assumed. To deal with this formulate continuous pLSI, use gaussian mixture models and can assume continuous distributions. A drawback of continuous pLSI – local minimum problem and the hub problem. Popular songs are recommended often because of the hubs. How to deal with this: Gaussian parameter tying – this reduces the number of free parameters. Only the mixture weights vary. Artist-based song clustering: Train an artist-based model and update it to a song-based model by an incremental training method (from 2007).

Here’s the system model:

Evaluation: They found that using the techniques to adjust model complexity significantly improved the accuracy of recommendations and that the second technique could also reduce hubness.

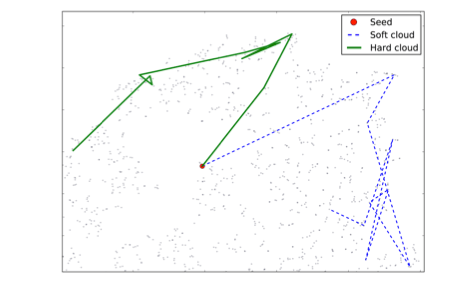

STEERABLE PLAYLIST GENERATION BY LEARNING SONG SIMILARITY FROM RADIO STATION PLAYLISTS

François Maillet, Douglas Eck, Guillaume Desjardins, Paul Lamere

This paper presents an approach to generating steerable playlists. They first demonstrate a method for learning song transition probabilities from audio features extracted from songs played in professional radio station playlists and then show that by using this learnt similarity function as a prior, they are able to generate steerable playlists by choosing the next song to play not simply based on that prior, but on a tag cloud that the user is able to manipulate to express the high-level characteristics of the music he wishes to listen to.

- Learn a similarity space from commercial radion staion playlists

- generate steerable playlists

Francois defines a playlist. Data sources: Radio Paradise and Yes.com’s API. 7million tracks,

Problem: They had positive examples but didn’t have an explicit set of negative examples. Chose them at random.

Learning the song space: Trained a binary classifier to determine if a song sequence is real.

Features: Timbre, Rhythmic/dancability, loudness

Eval:

EVALUATING AND ANALYSING DYNAMIC PLAYLIST GENERATION HEURISTICS USING RADIO LOGS AND FUZZY SET THEORY

Klaas Bosteels, Elias Pampalk, Etienne Kerr

Abstract: In this paper, we analyse and evaluate several heuristics for adding songs to a dynamically generated playlist. We explain how radio logs can be used for evaluating such heuristics, and show that formalizing the heuristics using fuzzy set theory simplifies the analysis. More concretely, we verify previous results by means of a large scale evaluation based on 1.26 million listening patterns extracted from radio logs, and explain why some heuristics perform better than others by analysing their formal definitions and conducting additional evaluations.

Notes:

- Dynamic playlist generation

- Formalization using fuzzy sets. Sets of accepted songs and sets of rejected songs

- Why last two songs not accepted? To make sure the listener is still paying attention?

- Interesting observation that the thing that matters most is membership in the fuzzy set of rejected songs. Why? Inconsistent skipping behavior.

SMARTER THAN GENIUS? HUMAN EVALUATION OF MUSIC RECOMMENDER SYSTEMS.

Luke Barrington, Reid Oda, Gert Lanckriet

Abstract: Genius is a popular commercial music recommender sys- tem that is based on collaborative filtering of huge amounts of user data. To understand the aspects of music similarity that collaborative filtering can capture, we compare Genius to two canonical music recommender systems: one based purely on artist similarity, the other purely on similarity of acoustic content. We evaluate this comparison with a user study of 185 subjects. Overall, Genius produces the best recommendations. We demonstrate that collaborative filter- ing can actually capture similarities between the acoustic content of songs. However, when evaluators can see the names of the recommended songs and artists, we find that artist similarity can account for the performance of Genius. A system that combines these musical cues could generate music recommendations that are as good as Genius, even when collaborative filtering data is unavailable.

Great talk, lots of things to think about.