Archive for category music information retrieval

Locating Tune Changes and Providing a Semantic Labelling of Sets of Irish Traditional Tunes

Posted by Paul in events, ismir, music information retrieval on August 10, 2010

Locating Tune Changes and Providing a Semantic Labelling of Sets of Irish Traditional Tunes by Cillian Kelly (pdf)

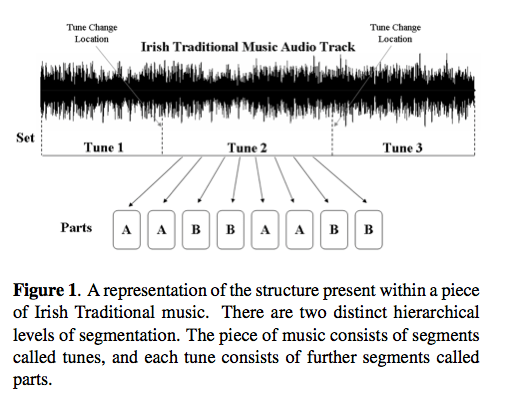

Abstract – An approach is presented which provides the tune change loca- tions within a set of Irish Traditional tunes. Also provided are semantic labels for each part of each tune within the set. A set in Irish Traditional music is a number of individual tunes played segue. Each of the tunes in the set are made up of structural segments called parts. Musical variation is a prominent characteristic of this genre. However, a certain set of notes known as ‘set accented tones’ are considered impervious to musical variation. Chroma information is extracted at ‘set accented tone’ locations within the music. The resulting chroma vectors are grouped to represent the parts of the music. The parts are then compared with one another to form a part similarity matrix. Unit kernels which represent the possible structures of an Irish Traditional tune are matched with the part similarity matrix to determine the tune change locations and semantic part labels.

Identifying Repeated Patterns in Music …

Posted by Paul in events, ismir, music information retrieval on August 10, 2010

I am at ISMIR this week, blogging sessions and papers that I find interesting.

Identifying Repeated Patterns in Music using Sparse Convolutive Non-Negative Matrix Factorization – Ron Weiss, Juan Bello (pdf)

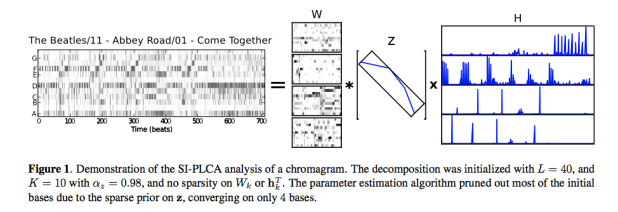

Problem: Looking at repetition in music – verse, chorus, repeated motifs. Can one identify high level and short term structiure simulataneous from audio? Lots of math in this.

Ron describes an unsupervised, data-driven, method for automatically identifying repeated patterns in music by analyzing a feature matrix using a variant of sparse convolutive non-negative matrix factorization. They utilize sparsity constraints to automatically identify the number of patterns and their lengths, parameters that would normally need to be fixed in advance. The proposed analysis is applied to beat- synchronous chromagrams in order to concurrently extract repeated harmonic motifs and their locations within a song. They show how this analysis can be used for long- term structure segmentation, resulting in an algorithm that is competitive with other state-of-the-art segmentation algorithms based on hidden Markov models and self similarity matrices.

One particular application is riff identification for music thumbnailing. Another application is structure segmentation – verse chorus, bridge etc.)

The code is open-sourced here: http://ronw.github.com/siplca-segmentation/

This was a really interesting presentation, with great examples. Excellent work. This one should be a candidate for best paper IMHO.

ISMIR Day zero in Utrecht

Posted by Paul in events, music information retrieval on August 10, 2010

We’ve just finished Day 0 of ISMIR (the yearly conference of the International Society of Music Information Retrieval) being held in Utrecht. It is a lovely city, I’ve been enjoying walks along the many canals in the comfortably cool weather.

The zeroth day of ISMIR is the tutorial day. Ben Fields and I presented our playlisting tutorial. It was well attended, with lots of good questions at the end. The 3 hour long presentation seemed to fly by. Here’s Ben making last minute edits just before the presentation.

Finding a path through the Jukebox: The Playlist Tutorial

Posted by Paul in events, music information retrieval, playlist, research, The Echo Nest on August 6, 2010

Ben Fields and I have just put the finishing touches on our playlisting tutorial for ISMIR. Everything you could want to know about playlists. As one of the founders of a well known music intelligence company once said: Take the fun out of music and read Paul’s slides …

Help researchers understand earworms

Posted by Paul in Music, music information retrieval, research on July 29, 2010

Researchers at Goldsmiths, University of London, in a collaboration with the BBC 6 and the British Academy, are conducting research to find out about the music in people’s heads, sometimes called ’musical imagery’. They want to know what songs are the most common, whether people like it or don’t, what triggers it, and if some people have music in their head all the time, etc.

To help researchers understand this phenomenon, take part in a questionnaire (and you could win £150 too). I took the survey, it took about 10 minutes. They do ask some rather personal questions that seem related to one’s tendency towards compulsive behavior. (yes, I do sometimes count the stairs that I’m walking up).

It looks to be an interesting research project. More details about it are here: The Earwomery.com

SoundBite for Songbird

Posted by Paul in Music, music information retrieval, research, visualization on March 23, 2010

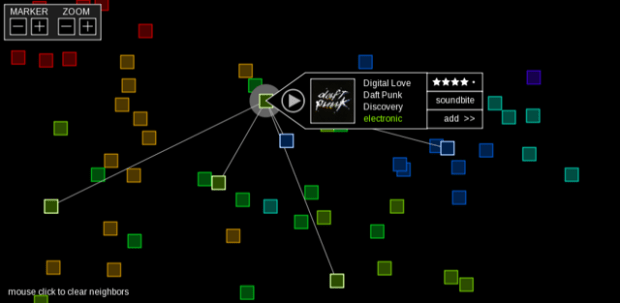

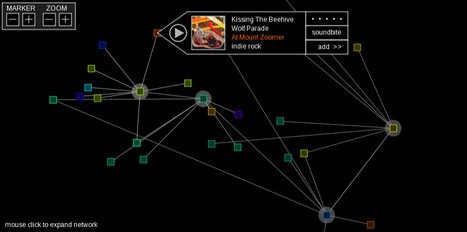

Steve Lloyd of Queen Mary University has released SongBite for Songbird. (Update – if the link is offline, and you are interested in trying SoundBite just email soundbite@repeatingbeats.com ). SongBite is a visual music explorer that uses music similarity to enable network-based music navigation and to create automatic “sounds like” playlists.

Here’s a video that shows SoundBite in action:

It’s a pretty neat plugin for Songbird. It’s great to see yet another project from the Music Information Retrieval community go mainstream.

How Music Information Retrieval can help you get the girl

Posted by Paul in music information retrieval, startup on March 17, 2010

Parag Chordia from Georgia Tech and his colleagues have spun out a music-tech company called khush. Khush makes cutting-edge artificial intelligence music applications. Their first app is LaDiDa – which is an auto-accompaniment application. You sing a capella into your iPhone and Ladida plays it back with a full accompaniment of music …. something like Songsmith (but with good music).

I had a chance to chat with Parag, along with Khush CEO Perna Gupta (she’s the dream girl in the video, btw), and Alex Rae (programmer+music geek). These folks are fired up about khush and LaDiDa. It’s great to see another innovative company come out of the MIR world. I think they will be going places.

AdMIRe 2010 Call for Papers

Posted by Paul in Music, music information retrieval, research on January 20, 2010

The organizers for AdMire 2010 (The 2nd International Workshop on Advances in Music Information Research) have just issued the call for papers. Detail info can be found on the workshop website: AdMIRe: International Workshop on Advances in Music Information Research 2010.

Poolcasting: an intelligent technique to customise music programmes for their audience

Posted by Paul in Music, music information retrieval, research on November 2, 2009

In preparation for his defense, Claudio Baccigalupo has placed online his thesis: Poolcasting: an intelligent technique to customise music programmes for their audience. It looks to be an in depth look at playlisting.

Here’s the abstract:

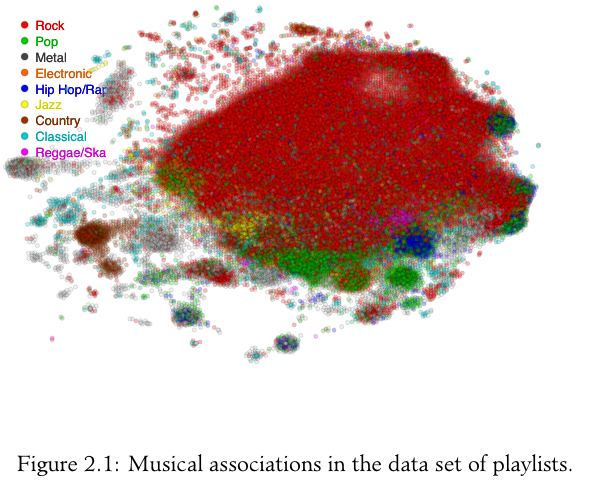

Poolcasting is an intelligent technique to customise musical sequences for groups of listeners. Poolcasting acts like a disc jockey, determining and delivering songs that satisfy its audience. Satisfying an entire audience is not an easy task, especially when members of the group have heterogeneous preferences and can join and leave the group at different times. The approach of poolcasting consists in selecting songs iteratively, in real time, favouring those members who are less satisfied by the previous songs played.

Poolcasting additionally ensures that the played sequence does not repeat the same songs or artists closely and that pairs of consecutive songs ‘flow’ well one after the other, in a musical sense. Good disc jockeys know from expertise which songs sound well in sequence; poolcasting obtains this knowledge from the analysis of playlists shared on the Web. The more two songs occur closely in playlists, the more poolcasting considers two songs as associated, in accordance with the human experiences expressed through playlists. Combining this knowledge and the music profiles of the listeners, poolcasting autonomously generates sequences that are varied, musically smooth and fairly adapted for a particular audience.

A natural application for poolcasting is automating radio programmes. Many online radios broadcast on each channel a random sequence of songs that is not affected by who is listening. Applying poolcasting can improve radio programmes, playing on each channel a varied, smooth and group-customised musical sequence. The integration of poolcasting into a Web radio has resulted in an innovative system called Poolcasting Web radio. Tens of people have connected to this online radio during one year providing first-hand evaluation of its social features. A set of experiments have been executed to evaluate how much the size of the group and its musical homogeneity affect the performance of the poolcasting technique.

I’m quite interested in this topic so it looks like my reading list is set for the week.

ISMIR Oral Session 2 – Tempo and Rhythm

Posted by Paul in ismir, Music, music information retrieval, research on October 27, 2009

Session chair: Anssi Klapuri

IMPROVING RHYTHMIC SIMILARITY COMPUTATION BY BEAT HISTOGRAM TRANSFORMATIONS

By Marthias Gruhne, Christian Dittmar, and Daniel Gaertner

Marthias described their approach to generating beat histogram techniques, similar to those used by Burred, Gouyun, Foote and Tzanetakis. Problem: beat histogram can not be directly used as feature because of tempo dependency. Similar rhythms appear far apart in a Euclidean space because of this dependency. Challenge: reduce tempo dependence.

Solution: logarithmic Transformation. See the figure:

This leads to a histogram with a tempo independent part which can be separated from the tempo dependent part. This tempo independent part can then be used in a Euclidean space to find similar rhythms.

Evaluation: results 20% to 70%, and from 66% to 69% (Needs a significance test here I think)

USING SOURCE SEPARATION TO IMPROVE TEMPO DETECTION

By Parag Chordia and Alex Rae – presented by George Tzanetakis

Well, this is unusual that George will be presenting Para and Alex’s work. Anssi suggests that we can use the wisdom of the crowds to anser the questions.

Motivation: Tempo detection is often unreliable for complex music.

Humans often resolve rhythms by entraining to a rhythmical regular part.

Idea: Separate music into components, some components may be more reliable.

Method:

- Source separation

- track tempo for each source

- decide global tempo by either:

- Pick one with most regular structure

- Look for common tempo across all sources/layers

Here’s the system:

PLCA is a source separation method (Probablistic Latent Component Analysis). Issues: Number of components need to be specified in advance. Could merge sources or one source could be split into multiple layers.

Autocorrelation is used for tempo detection. Regular sources will have higher peaks.

Other approach – a machine learning approach – a supervised learning problem

Global Tempo using Clustering – merge all tempo candidates into single vector (and others within a 5% tolerance (and .5x and 2x), to give a peak histogram showing confidence for each tempo.

Evaluation

- IDM09 – http://paragchordia.com/data.html

- mirex06 (20 mixed genre exceprts)

Accuracy: MIREX06: 0.50 THIS : 0.60

Question: How many sources were specified to PLCA, Answer: 8. George thinks it doesn’t matter too much.

Question: Other papers show that similar techniques do not show improvement for larger datasets

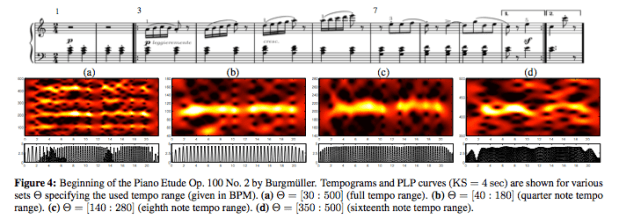

A MID-LEVEL REPRESENTATION FOR CAPTURING DOMINANT TEMPO AND PULSE INFORMATION IN MUSIC RECORDINGS

By Peter Grosche and Meinard Müller

Example – a waltz – where the downbeat is not too strong compared to beats 2 & 3. It is hard to find onsets in the energy curves. Instead, use:

- Create a spectogram

- Log compression of the spectrogram

- Derivative

- Accumulation

This yields a novelty curve, which can be used for onset detection. Downbeats are missing. How to beat track this? compute tempogram – a spectrogram of the novelty curve. This yields a periodicity kernel. All kernels are combined to obtain a single kernel – rectified – this gives a predominate local pulse curve. The PLP curve is dynamic but can be constrained to track at the bar, beat or tatum level.

Issues: PLP likes to fill in the gaps – which is not always appropriate. Trouble with the Borodin String Quartet No. 2. But when tempo is tightly constrained, it works much better.

This was a very good talk. Meinard presented lots of examples including examples where the system did not work well.

Question: Realtime? Currently kernels are 4 to 6 seconds. With a latency of 4 to 6 seconds it should work in an online scenario.

Question: How different from DTW on the tempogram? Not connected to DTW in anyway.

Question: How important is the hopsize? Not that important since a sliding window is used.