Archive for category data

Some preliminary Playlist Survey results

[tweetmeme source= ‘plamere’ only_single=false] I’m conducting a somewhat informal survey on playlisting to compare how well playlists created by an expert radio DJ compare to those generated by a playlisting algorithm and a random number generator. So far, nearly 200 people have taken the survey (Thanks!). Already I’m seeing some very interesting results. Here’s a few tidbits (look for a more thorough analysis once the survey is complete).

People expect human DJs to make better playlists:

The survey asks people to try to identify the origin of a playlist (human expert, algorithm or random) and also rate each playlist. We can look at the ratings people give to playlists based on what they think the playlist origin is to get an idea of people’s attitudes toward human vs. algorithm creation.

Predicted Origin Rating ---------------- ------ Human expert 3.4 Algorithm 2.7 Random 2.1

We see that people expect humans to create better playlists than algorithms and that algorithms should give better playlists than random numbers. Not a surprising result.

Human DJs don’t necessarily make better playlists:

Now lets look at how people rated playlists based on the actual origin of the playlists:

Actual Origin Rating ------------- ------ Human expert 2.5 Algorithm 2.7 Random 2.6

These results are rather surprising. Algorithmic playlists are rated highest, while human-expert-created playlists are rated lowest, even lower than those created by the random number generator. There are lots of caveats here, I haven’t done any significance tests yet to see if the differences here really matter, the survey size is still rather small, and the survey doesn’t present real-world playlist listening conditions, etc. Nevertheless, the results are intriguing.

I’d like to collect more survey data to flesh out these results. So if you haven’t already, please take the survey:

The Playlist Survey

Thanks!

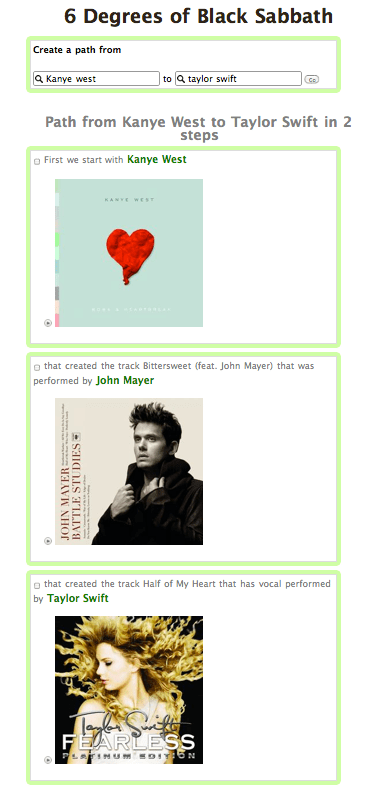

Six Degrees of Black Sabbath

[tweetmeme source= ‘plamere’ only_single=false] My hack at last week’s Music Hack Day San Francisco was Six Degrees of Black Sabbath – a web app that lets you find connections between artists based on a wide range of artist relations. It is like The Oracle of Bacon for music.

To make the connections between the artists I rely on the relation data from MusicBrainz. MusicBrainz has lots of deep data about how various artists are connected. For instance there are about 130,000 artist-to-artist connections – connections such as:

- member of band

- is person

- personal relationship

- parent

- sibling

- married

- involved with

- collaboration

- supporting musician

- vocal supporting musician

- instrumental supporting musician

- catalogued

So from this data we know that George Harrison and Paul McCartney are related because each was a ‘member of the band’ of The Beatles. In addition to the artist-to-artist data MusicBrainz has artist-track relations (Eric Clapton played on ‘While My Guitar Gently Weeps’), artist-album (Brian Eno produced U2’s Joshua Tree), track-track (Girl Talk samples ‘Rock You Like A Hurricane’ by the Scorpions for the track ‘Girl Talk Is Here’). All told there are about 130 different types of relations that can connect two artists.

Not all of these relationships are equally important. Two artists that are members of the same band have a much stronger relationship than an artist that covers another artist. To accommodate this I assign weights to the various different types of relationships – this was perhaps the most tedious and subjective part of building this app.

Once I have all the different types of relations I created a directed graph connecting all of the artists based upon these weighted relationships. The resulting graph has 220K artists connected by over a million edges. Finding a path between a pair of artists is a simple matter of finding the shortest weighted path through the graph.

We can learn a little bit about music by looking at some of the properties of the graph. First of all, the average distance in the graph between any two artists in the graph chosen at random is 7. Some of the top most connected artists along with the number of connections:

-

- 5372 Various Artists

- 1604 Wolfgang Amadeus Mozart

- 1275 Johann Sebastian Bach

- 905 Ludwig van Beethoven

- 696 Linda Ronstadt

- 611 Diana Ross

- 560 [traditional]

- 538 Antonio Vivaldi

- 534 Jay-Z

- 528 Georg Friedrich Händel

- 494 Giuseppe Verdi

- 491 Johannes Brahms

- 490 Bob Dylan

- 465 The Beatles

- 442 Aaron Neville

Here we see some of the anomalies in the connection data – any classical performer who performs a piece by Mozart is connected to Mozart – thus the high connectivity counts for classical composers. A more interesting metric is the ‘betweeness centrality’ – artists that occur on many shortest paths between other artists have higher betweenness than those that do not. Artists with high betweenness centrality are the connecting fibers of the music space. Here are the top connecting artists:

-

- 565 Pigface

- 312 Various Artists

- 135 Mick Harris

- 122 Black Sabbath

- 120 The The

- 115 Youth

- 93 Bill Laswell

- 79 J.G. Thirlwell

- 74 Painkiller

- 72 F.M. Einheit

- 71 Napalm Death

- 63 Paul McCartney

- 63 Flea

- 60 Material

- 60 Andrew Lloyd Webber

- 57 Luciano Pavarotti

- 57 Raimonds Macats

- 56 Ginger Baker

- 56 Mike Patton

- 54 Johnny Marr

- 54 Paul Raven

- 53 Brian Eno

I had never heard of Pigface before I started this project – and was doubtful that they could really be such a connecting node in the world of music – but a look a their wikipedia page makes it instantly clear why they are such a central node – they’ve had well over a hundred members in the band over their history. Black Sabbath, while not at the top of the list is still extremely well connected.

I wrote the app in python, relying on networkx for the graph building and path finding. The system performs well, even surviving an appearance on the front page of Reddit. It was a fun app to write – and I enjoy seeing all the interesting pathways people have found through the artist space.

Spying on how we read

Posted by Paul in data, Music, recommendation on March 26, 2010

[tweetmeme source=”plamere” only_single=false] I’ve been reading all my books lately using Kindle for iPhone. It is a great way to read – and having a library of books in my pocket at all times means I’m never without a book. One feature of the Kindle software is called Whispersync. It keeps track of where you are in a book so that if you switch devices (from an iPhone to a Kindle or an iPad or desktop), you can pick up exactly where you left off. Kindle also stores any bookmarks, notes, highlights, or similar markings you make in the cloud so they can be shared across devices. Whispersync is a useful feature for readers, but it is also a goldmine of data for Amazon. With Whispersync data from millions of Kindle readers Amazon can learn not just what we are reading but how we are reading. In brick-and-mortar bookstore days, the only thing a bookseller, author or publisher could really know about a book was how many copies it sold. But now with the Whispersync Amazon can get learn all sorts of things about how we are reading. With the insights that they gain from this data, they will, no doubt, find better ways to help people find the books they like to read.

[tweetmeme source=”plamere” only_single=false] I’ve been reading all my books lately using Kindle for iPhone. It is a great way to read – and having a library of books in my pocket at all times means I’m never without a book. One feature of the Kindle software is called Whispersync. It keeps track of where you are in a book so that if you switch devices (from an iPhone to a Kindle or an iPad or desktop), you can pick up exactly where you left off. Kindle also stores any bookmarks, notes, highlights, or similar markings you make in the cloud so they can be shared across devices. Whispersync is a useful feature for readers, but it is also a goldmine of data for Amazon. With Whispersync data from millions of Kindle readers Amazon can learn not just what we are reading but how we are reading. In brick-and-mortar bookstore days, the only thing a bookseller, author or publisher could really know about a book was how many copies it sold. But now with the Whispersync Amazon can get learn all sorts of things about how we are reading. With the insights that they gain from this data, they will, no doubt, find better ways to help people find the books they like to read.

I hope Amazon aggregates their Whispersync data and give us some Last.fm-style charts about how people are reading. Some charts I’d like to see:

- Most Abandoned – the books and/or authors that are most frequently left unfinished. What book is the most abandoned book of all time? (My money is on ‘A Brief History of Time’) A related metric – for any particular book where is it most frequently abandoned? (I’ve heard of dozens of people who never got past ‘The Council of Elrond’ chapter in LOTR).

- Pageturner – the top books ordered by average number of words read per reading session. Does the average Harry Potter fan read more of the book in one sitting than the average Twilight fan?

- Burning the midnight oil – books that keep people up late at night.

- Read Speed – which books/authors/genres have the lowest word-per-minute average reading rate? Do readers of Glenn Beck read faster or slower than readers of Jon Stewart?

- Most Re-read – which books are read over and over again? A related metric – which are the most re-read passages? Is it when Frodo claims the ring, or when Bella almost gets hit by a car?

- Mystery cheats – which books have their last chapter read before other chapters.

- Valuable reference – which books are not read in order, but are visited very frequently? (I’ve not read my Python in a nutshell book from cover to cover, but I visit it almost every day).

- Biggest Slogs – the books that take the longest to read.

- Back to the start – Books that are most frequently re-read immediately after they are finished.

- Page shufflers – books that most often send their readers to the glossary, dictionary, map or the elaborate family tree. (xkcd offers some insights)

- Trophy Books – books that are most frequently purchased, but never actually read.

- Dishonest rater – books that most frequently rated highly by readers who never actually finished reading the book

- Most efficient language – the average time to read books by language. Do native Italians read ‘Il nome della rosa‘ faster than native English speakers can read ‘The name of the rose‘?

- Most attempts – which books are restarted most frequently? (It took me 4 attempts to get through Cryptonomicon, but when I did I really enjoyed it).

- A turn for the worse – which books are most frequently abandoned in the last third of the book? These are the books that go bad.

- Never at night – books that are read less in the dark than others.

- Entertainment value – the books with the lowest overall cost per hour of reading (including all re-reads)

Whispersync is to books as the audioscrobbler is to music. It is an implicit way to track what you are really paying attention to. The data from Whispersync will give us new insights into how people really read books. A chart that shows that the most abandoned author is James Patterson may steer readers away from Patterson and toward books by better authors. I’d rather not turn to the New York Times Best Seller list to decide what to read. I want to see the Amazon Most Frequently Finished book list instead.

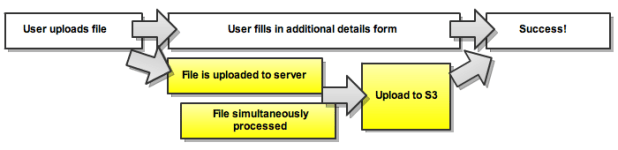

NodeJS and DonkDJ

Brian points me to RF Watson’s (creator of DonkDj) interesting post about how he’s using NodeJS to solve concurrency problems in his audio-uploading web apps. Worth a read.

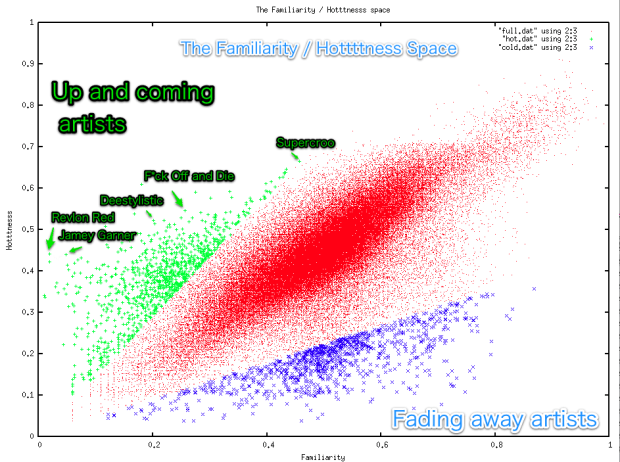

Hottt or Nottt?

Posted by Paul in code, data, Music, The Echo Nest on December 9, 2009

At the Echo Nest we have lots of data about millions of artists. It can be interesting to see what kind of patterns can be extracted from this data. Tim G suggested an experiment where we see if we can find artists that are on the verge of breaking out by looking at some of this data. I tried a simple experiment to see what we could find. I started with two pieces of data for each artist.

- Familiarity – this corresponds to how well known in artist is. You can look at familiarity as the likelihood that any person selected at random will have heard of the artist. Beatles have a familiarity close to 1, while a band like ‘Hot Rod Shopping Cart’ has a familiarity close to zero.

- Hotttnesss – this corresponds to how much buzz the artist is getting right now. This is derived from many sources, including mentions on the web, mentions in music blogs, music reviews, play counts, etc.

I collected these 2 pieces of data for 130K+ artists and plotted them. The following plot shows the results. The x-axis is familiarity and the y-axis is hotttnesss. Clearly there’s a correlation between hotttnesss and familiarity. Familiar artists tend to be hotter than non-familiar artists. At the top right are the Billboard chart toppers like Kanye West and Taylor Swift, while at the bottom left are artists that you’ve probably never heard of like Mystery Fluid. We can use this plot to find the up and coming artists as well as the popular artists that are cooling off. Outliers to the left and above the main diagonal are the rising stars (their hotttnesss exceeds their familiarity). Here we see artists like Willie the Kid, Ben*Jammin and ラディカルズ (a.k.a. Rock the Queen). While artists below the diagonal are well known, but no longer hot. Here we see artists like Simon & Garfunkel, Jimmy Page and Ziggy Stardust. Note that this is not a perfect science – for instance, it is not clear how to rate the familiarity for artist collaborations – you may know James Brown and you may know Luciano Pavarotti, but you may not be familiar with the Brown/Pavarotti collaboration – what should the familiarity of this collaboration be? the average of the two artists, or should it be related to how well known the collaboration itself is? Hotttnesss can also be tricky with extremely unfamiliar artists. If a Hot Rod Shopping Cart track gets 100 plays it could substantially increase the band’s hotttnesss (‘Hey! We are twice as popular as we were yesterday!’)

Despite these types of confounding factors, the familiarity / hotttnesss model still seems to be a good way to start exploring for new, potentially unsigned acts that are on the verge of breaking out. To select the artists, I did the simplest thing that could possibly work: I created a ‘break-out’ score which is simply ratio of hotttnesss to familiarity. Artists that have a high hotttnesss as compared to their familiarity are getting a lot of web buzz but are still relatively unknown. I calculated this break-out score for all artists and used it to select the top 1000 artists with break-out potential, as well as the bottom 1000 artists (the fade-aways). Here’s a plot showing the two categories:

Despite these types of confounding factors, the familiarity / hotttnesss model still seems to be a good way to start exploring for new, potentially unsigned acts that are on the verge of breaking out. To select the artists, I did the simplest thing that could possibly work: I created a ‘break-out’ score which is simply ratio of hotttnesss to familiarity. Artists that have a high hotttnesss as compared to their familiarity are getting a lot of web buzz but are still relatively unknown. I calculated this break-out score for all artists and used it to select the top 1000 artists with break-out potential, as well as the bottom 1000 artists (the fade-aways). Here’s a plot showing the two categories:

Here are 10 artists with high break-out scores that might be worth checking out:

- Ben*Jammin – German pop, with 249 Last.fm listeners with an awesome youtube video (really, you have to watch it)

- Lord Vampyr’s Shadowsreign – 32 Last.fm listeners – I’m not sure whether they are being serious or not in this video.

- Waking Vision Trio – 429 Last.fm Listeners – on youtube

- The Bart Crow Band – alt-country – 3K last.fm listeners – youtube

- Urine Festival – 500 last.fm listeners – really, not for the faint of heart – youtube

- Fictivision vs Phynn – 250 Last.fm listeners – trance – youtube

- korablove – 1,500 Last.fm listeners – minimal, deep house – youtube

- Deelstylistic – 1,800 Last.fm listeners – r&b – youtube

- Luke Doucet and the White Falcon – 900 Last.fm listeners – youtube

- i-sHiNe – 1,700 Last.fm listeners – on youtube

Spotifying over 200 Billboard charts

Posted by Paul in code, data, fun, web services on November 8, 2009

Yesterday, I Spotified the Billboard Hot 100 – making it easy to listen to the charts. This morning I went one step further and Spotified all of the Billboard Album and Singles charts.

The Spotified Billboard Charts

That’s 128 singles charts (which includes charts like Luxembourg Digital Songs, Hot Mainstream R&B/Hip-Hop Song and Hot Ringtones ) and 83 album charts including charts like Top Bluegrass Albums, Top Cast Albums and Top R&B Catalog Albums.

In these 211 charts you’ll find 6,482 Spotify tracks, 2354 being unique (some tracks, like Miley Cyrus’s ‘The Climb’ appear on many charts).

Building the charts stretches the API limits of the Billboard API (only 1,500 calls allowed per day!), as well as stretches my patience (making about 10K calls to the Spotify API while trying not to exceed the rate limit, means it takes a couple of hours to resolve all the tracks). Nevertheless, it was a fun little project. And it shows off the Spotify catalog quite well. For popular western music they have really good coverage.

Requests for the Billboard API: Please increase the usage limit by 10 times. 1,500 calls per day is really limiting, especially when trying to debug a client library.

Requests for the Spotify API: Please, Please Please!!! – make it possible to create and modify Spotify playlists via web services.

Using Visualizations for Music Discovery

Posted by Paul in code, data, events, fun, Music, music information retrieval, research, The Echo Nest, visualization on October 22, 2009

On Monday, Justin and I will present our magnum opus – a three-hour long tutorial entitled: Using Visualizations for Music Discovery. In this talk we look the various techniques that can be used for visualization of music. We include a survey of the many existing visualizations of music, as well as talk about techniques and algorithms for creating visualizations. My hope is that this talk will be inspirational as well as educational spawning new music discovery visualizations. I’ve uploaded a PDF of our slide deck to slideshare. It’s a big deck, filled with examples, but note that large as it is, the PDF isn’t the whole talk. The tutorial will include many demonstrations and videos of visualizations that just are not practical to include in a PDF. If you have the chance, be sure to check out the tutorial at ISMIR in Kobe on the 26th.

Radio Waves

Posted by Paul in data, Music, visualization on October 1, 2009

Radio Labs now has a new visualisation called Radio Waves that shows the kind of music that is played on the various BBC stations. The visualization shows info about what genres, artists, year of release, which DJs play which music. There’s lots of info presented in an interesting way. Read all the details at the Radio Labs blog and then check it out: Radio Waves

SoundEchoCloudNest

Posted by Paul in code, data, events, Music, The Echo Nest, web services on September 28, 2009

At the recent Berlin Music Hackday, developer Hannes Tydén developed a mashup between SoundCloud and The Echo Nest, dubbed SoundCloudEchoNest. The program uses the SoundCloud and Echo Nest APIs to automatically annotate your SoundCloud tracks with information such as when the track fades in and fades out, the key, the mode, the overall loudness, time signature and the tempo. Also each Echo Nest section is marked. Here’s an example:

This track is annotated as follows:

- echonest:start_of_fade_out=182.34

- echonest:mode=min

- echonest:loudness=-5.521

- echonest:end_of_fade_in=0.0

- echonest:time_signature=1

- echonest:tempo=96.72

- echonest:key=F#

Additionally, 9 section boundaries are annotated.

The user interface to SoundEchoCloudNest is refreshly simple, no GUIs for Hannes:

Hannes has open sourced his code on github, so if you are a Ruby programmer and want to play around with SoundCloud and/or the Echo Nest, check out the code.

Machine tagging of content is becoming more viable. Photos on Flicker can be automatically tagged with information about the camera and exposure settings, geolocation, time of day and so on. Now with APIs like SoundCloud and the Echo Nest, I think we’ll start to see similar machine tagging of music, where basic info such as tempo, key, mode, loudness can be automatically attached to the audio. This will open the doors for all sorts of tools to help us better organize our music.