Archive for category code

Using speechiness to make stand-up comedy playlists

Posted by Paul in code, data, The Echo Nest on March 20, 2013

One of the Echo Nest attributes calculated for every song is ‘speechiness’. This is an estimate of the amount of spoken word in a particular track. High values indicate that there’s a good deal of speech in the track, and low values indicate that there is very little speech. This attribute can be used to help create interesting playlists. For example, a music service like Spotify has hundreds of stand-up comedy albums in their collection. If you wanted to use the Echo Nest API to create a playlist of these routines you could create an artist-description playlist with a call like so:

However, this call wouldn’t generate the playlist that you want. Intermixed with stand-up routines would be comedy musical numbers by Tenacious D, The Lonely Island or “Weird Al”. That’s where the ‘speechiness’ attribute comes in. We can add a speechiness filter to our playlist call to give us spoken-word comedy tracks like so:

It is a pretty effective way to generate comedy playlists.

I made a demo app that shows this called The Comedy Playlister. It generates a Spotify playlist of comedy routines.

It does a pretty good job of finding comedy. Now I just need some way of filtering out Larry The Cable Guy. The app is on line here: The Comedy Playlister. The source is on github.

How the Bonhamizer works

Posted by Paul in code, fun, The Echo Nest on February 21, 2013

I spent this weekend at Music Hack Day San Franciso. Music Hack Day is an event where people who are passionate about music and technology get together to build cool, music-related stuff. This San Francisco event was the 30th Music Hack Day with around 200 hackers in attendance. Many cool hacks were built. Check out this Projects page on Hacker League for the full list.

My weekend hack is called The Bonhamizer. It takes just about any song and re-renders it as if John Bonham was playing the drums on the track. For many tracks it works really well (and others not so much). I thought I’d give a bit more detail on how the hack works.

Data Sources

For starters, I needed some good clean recordings of John Bonham playing the drums. Luckily, there’s

set of 23 drum out takes from the “In through the out door” recording session. For instance, there’s

this awesome recording of the drums for “All of my Love”. Not only do you get the sound of Bonhmam

pounding the crap out of the drums, you can hear him grunting and groaning. Super stuff. This particular

recording would become the ‘Hammer of the Gods’ in the Bonhamizer.

From this set of audio, I picked four really solid drum patterns for use in the Bonhamizer.

Of course you can’t just play the drum recording and any arbitrary song recording at the same time and expect the drums to align with the music. To make the drums play well with any song a number of things need to be done (1) Align the tempos of the recordings, (2) Align the beats of the recordings, (3) Play the drums at the right times to enhance the impact of the drums.

To guide this process I used the Echo Nest Analyzer to analyze the song being Bonhamized as well as the Bonham beats. The analyzer provides a detailed map for the beat structure and timing for the song and the beats. In particular, the analyzer provides a detailed map of where each beat starts and stops, the loudness of the audio at each beat, and a measure of the confidence of the beat (which can be interpreted as how likely the logical beat represents a real physical beat in the song).

Aligning the tempos (We don’t need no stinkin’ time stretcher)

Perhaps the biggest challenge of implementing the Bonhamizer is to align the tempo of the song and the Bonham beats. The tempo of the ‘Hammer of the Gods’ Bonham beat is 93 beats per minute. If fun.’s Some Nights is at 107 beats per minute we have to either speed Bonham up or slow fun. down to get the tempos to match.

Since the application is written in Javascript and intended to run entirely in the browser, I don’t have access to server side time stretching/shifting libraries like SoundTouch or Dirac. Any time shifting needs to be done in Javascript. Writing a real-time (or even an ahead-of-time) time-shifter in Javascript is beyond what I could do in a 24 coding session. But with the help of the Echo Nest beat data and the Web Audio API there’s a really hacky (but neat) way to adjust song tempos without too many audio artifacts.

The Echo Nest analysis data includes the complete beat timing info. I know where every beat starts and how long it is. With the Web Audio API I can play any snippet of audio from an MP3, with millisecond timing accuracy. Using this data I can speed a song up by playing a song beat-by-beat, but adjusting the starting time and duration of each beat to correspond to the desired tempo. If I want to speed up the Bonham beats by a dozen beats per minute, I just play each beat about 200 ms shorter than it should be. Likewise, if I need to slow down a song, I can just let each beat play for longer than it should before the next beat plays. Yes, this can lead to horrible audio artifacts, but many of the sins of this hacky tempo adjust will be covered up by those big fat drum hits that will be landing on top of them.

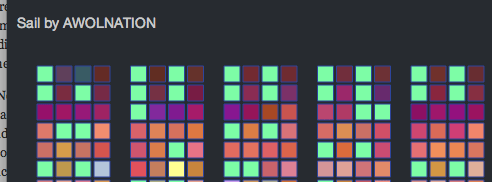

I first used this hacky tempo adjustment in the Girl Talk in a Box hack. Here’s a few examples of songs with large tempo adjustments. In this example, AWOLNation’s SAIL is sped up by 25% you can hear the shortened beats.

And in this example with Sail slowed down by 25% you can hear double strikes for each beat.

I use this hacky tempo adjust to align the tempo of the song and the Bonham beats, but since I imagine that if a band had John Bonham playing the drums they would be driven to play a little faster, so I make sure that I speed on the song a little bit when I can.

Speeding up the drum beats by playing each drum beat a bit shorter than it is supposed to play gives pretty good audio results. However, using the technique to slow beats down can get a bit touchy. If a beat is played for too long, we will leak into the next beat. Audibly this sounds like a drummer’s flam. For the Bonhamizer this weakness is really an advantage. Here’s an example track with the Bonham beats slowed down enough so that you can easily hear the flam.

When to play (and not play) the drums

If the Bonhamizer overlaid every track with Bonham drums from start to finish, it would not be a very good sounding app. Songs have loud parts and soft parts, they have breaks and drops, they have dramatic tempo changes. It is important for the Bonhamizer to adapt to the song. It is much more dramatic for drums to refrain from striking during the quiet a capella solos and then landing like the Hammer of the Gods when the full band comes in as in this fun. track.

There are a couple of pieces of data from the Echo Nest analysis that I can use to help decide when to the drums should play. First, there’s detailed loudness info. For each beat in the song I retrieve information on how loud the beat is. I can use this info to find the loud and the quiet parts of the song and react accordingly. The second piece of information is the beat confidence. Each beat is tagged with a confidence level by the Echo Nest analyzer. This is an indication of how sure the analyzer is that a beat really happened there. If a song has a very strong and steady beat, most of the beat confidences will be high. If a song is frequently changing tempo, or has weak beat onsets then many of the beat confidence levels will be low, especially during the tempo transition periods. We can use both the loudness and the confidence levels to help us decide when the drums should play.

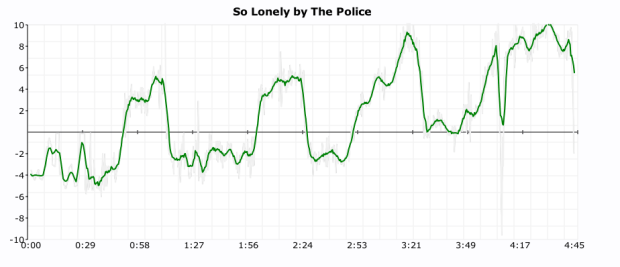

For example, here’s a plot that shows the beat confidence level for the first few hundred beats of Some Nights.

The red curve shows the confidence level of the beats. You can see that the confidence ebbs and flows in the song. Likewise we can look at the normalized loudness levels at the beat level, as shown by the green curve in the plot below:

We want to play the drums during the louder and high confidence sections of the song, and not play them otherwise. However, if just simply match the loudness and confidence curves we will have drums that stutter start and stop, yielding a very unnatural sound. Instead, we need to filter these levels so that we get a more natural starting and stopping of the drums. The filter needs to respond quickly to changes – so for instance, if the song suddenly gets loud, we’d like the drums to come in right away, but the filter also needs to reject spurious changes – so if the song is loud and confident for only a few beats, the drums should hold back. To implement this I used a simple forward looking runlength filter. The filter looks for state changes in the confidence and loudness levels. If it sees one and it looks like the new state is going to be stable for the near future, then the new state is accepted, otherwise it is rejected. The code is simple:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| function runLength(quanta, confidenceThreshold, loudnessThreshold, lookAhead, lookaheadMatch) { | |

| var lastState = false; | |

| for (var i = 0; i < quanta.length; i++) { | |

| quanta[i].needsDrums = false; | |

| } | |

| for (var i = 0; i < quanta.length -1; i+=2) { | |

| var newState = getState(quanta[i], confidenceThreshold, loudnessThreshold); | |

| if (newState != lastState) { | |

| // look ahead | |

| var matchCount = 0 | |

| var curCount = 0; | |

| for (var j = i + 1; j < quanta.length && j <= i + lookAhead; j++) { | |

| var q = quanta[j]; | |

| var nstate = getState(q, confidenceThreshold, loudnessThreshold); | |

| if (nstate == newState) { | |

| matchCount++; | |

| } | |

| curCount++; | |

| } | |

| if (matchCount > lookaheadMatch) { | |

| curState = newState; | |

| } else { | |

| curState = lastState; | |

| } | |

| } else { | |

| curState = newState; | |

| } | |

| quanta[i].needsDrums = curState; | |

| quanta[i+1].needsDrums = curState; | |

| lastState = curState; | |

| } | |

| } | |

| function getState(q, confidenceThreshold, loudnessThreshold) { | |

| var conf = q.confidence; | |

| var volume = q.oseg.loudness_max; | |

| var nstate = conf >= confidenceThreshold && volume > loudnessThreshold; | |

| return nstate; | |

| } |

When the filter is applied we get a nice drum transitions – they start when the music gets louder and the tempo is regular and they stop when its soft or the tempo is changing or irregular:

This above plot shows the confidence and loudness levels for the Some Nights by Fun. The blue curve shows when the drums play. Here’s a detailed view of the first 200 beats:

You can see how transient changes in loudness and confidence are ignored, while longer term changes trigger state changes in the drums.

Note that some songs are always too soft or most of the beats are not confident enough to warrent adding drums. If the filter rejects 80% of the song, then we tell the user that ‘Bonzo doesn’t really want to play this song’.

Aligning the song and the beats

At this point we now have tempo matched the song and the drums and have figured out when to and when not to play the drums. There’s just a few things left to do. First thing, we need to make sure that each of the song and drum beats in a bar lines up so the both the song and drums are playing the same beat within a bar (beat 1, beat 2, beat 3, beat 4, beat 1 …). This is easy to do since the Echo Nest analysis provides bar info along with the beat info. We align the beats by finding the bar position of the starting beat in the song and shifting the drum beats so that the bar position of the drum beats align with the song.

A seemingly more challenging issue is to deal with mismatched time signatures. All of the Bonham beats are in 4-4 time, but not all songs are in 4. Luckily, the Echo Nest analysis can tell us the time signature of the song. I use a simple but fairly effective strategy. If a song is in 3/4 time I just drop one of the beats in the Bonham beat pattern to turn the drumming into a matching 3/4 time pattern. It is 4 lines of code:

if (timeSignature == 3) {

if (drumInfo.cur % 4 == 1) {

drumInfo.cur+=1;

}

}

Here’s an example with Norwegian Wood (which is in 3/4 time). This approach can be used for more exotic time signatures (such as 5/4 or 7/4) as well. For songs in 5/4, an extra Bonham beat can be inserted into each measure. (Note that I never got around to implementing this bit, so songs that are not in 4/4 or 3/4 will not sound very good).

Playing it all

Once we have aligned tempos, filtered the drums and aligned the beats, it is time to play the song. This is all done with the Web Audio API, which makes it possible for us to play the song beat by beat with fine grained control of the timing.

Issues + To DOs

There are certainly a number of issues with the Bonhamizer that I may address some day.

I’d like to be able to pick the best Bonham beat pattern automatically instead of relying on the user to pick the beat. It may be interesting to try to vary the beat patterns within a song too, to make things a bit more interesting. The overall loudness of the songs vs. the drums could be dynamically matched. Right now, the songs and drums play at their natural volume. Most times that’s fine, but sometimes one will overwhelm the other. As noted above, I still need to make it work with more exotic time signatures too.

Reception

The Bonhamizer has been out on the web for about 3 days but in that time about 100K songs have been Bonhamized. There have been positive articles in The Verge, Consequence of Sound, MetalSucks, Bad Ass Digest and Drummer Zone. It is big in Japan. Despite all its flaws, people seem to like the Bonhamizer. That makes me happy. One interesting bit of feedback I received was from Jonathan Foote (father of MIR). He pointed me to Frank Zappa and his experiments with xenochrony The mind boggles. My biggest regret is the name. I really should have called it the autobonhamator. But it is too late now … sigh.

All the code for the Bonhamizer is on github. Feel free to explore it, fork and it make your own Peartifier or Moonimator or Palmerizer.

MIDEM Hack Day recap

What better way to spend a weekend on the French Riviera then in a conference room filled with food, soda, coffee and fellow coders and designers hacking on music! That’s what I and 26 other hackers did at the MIDEM Music Hack Day. Hackers came from all over the world to attend and participate in this 3rd annual hacking event to show what kind of of creative output can flow from those that are passionate about music and technology.

Unlike a typical Music Hack Day, this hack day has very limited space so only those with hardcore hacking cred were invited to attend. Hackers were from a wide range of companies and organizations including SoundCloud, Songkick, Gracenote, Musecore, We Make Awesome Sh.t, 7Digital, Reactify, Seevl, Webdoc, MuseCore, REEA, MTG-UPF, and Mint Digital and The Echo Nest. Several of the hackers were independent. The event was organized by Martyn Davies of Hacks & Bants along with help from the MIDEM organizers.

The hacker space provided for us is at the top of the Palais – which is the heart of MIDEM and Cannes. The hacking space has a terrace that overlooks the city, giving an excellent place to unwind and meditate while trying to figure out how to make something work.

The hack day started off with a presentation by Martyn explaining what a Music Hack Day is for the general MIDEM crowd. After which, members of the audience (the Emilys and Amelies) offered some hacking ideas in case any of the hyper-creative hackers attending the Hack Day were not able to come up with their own ideas.

After that, hacking started in force. Coders and designers paired up, hacking designs were sketched and github repositories were pulled and pushed.

The MIDEM Hack Day is longer than your usual Music Hack Day. Instead of the usual 24 hours, hackers have 45 hour to create their stuff. The extra time really makes a difference (especially if you hack like you only have 24 hours).

We had a few visitors during the course of the weekend. Perhaps the most notable was Robert Scoble. We gave him a few demos. My impression (based upon 3 minutes of interaction, so it is quite solid), is that Robert doesn’t care too much about music. (While I was giving him a demo of a very early version of Girl Talk in a Box, Robert reached out and hit the volume down key a few times on my computer. The effrontery of it all!). A number of developers gave Robert demos of their in-progress hacks, including Ben Fields, who at the time, didn’t know he was talking to someone who was Internet-famous.

As day turned to evening, the view from our terrace got more exciting. The NRJ Awards show takes place in the Palais and we had an awesome view of the red carpet. For 5 hours, the sounds of screaming teenagers lining the red carpet added to our hacking soundtrack. Carly Rae, Taylor Swift, One Direction and the great one (Psy) all came down the red carpet below us, adding excitement (and quite a bit of distraction) to the hack.

[youtube http://www.youtube.com/watch?v=OUkLAsphZDM]Yes, Psy did the horsey dance for us. Life is complete.

Finally, after 45 hours of hacking, we were ready to give our demos to the MIDEM audience.

There were 18 hacks created during the weekend. Check out the full list of hacks. Some of my favorites were:

- VidSwapper by Ben Fields. – swaps the audio from one video into another, syncronizing with video hit points along the way.

- RockStar by Pierre-loic Doulcet – RockStar let you direct a rockstar Band using Gesture.

- Miri by Aaron Randal – Miri is a personal assistant, controlled by voice, that specialises in answering music-related questions

- Ephemeral Playback by Alastair Porter – Ephemeral Playback takes the idea of slow music and slows it down even further. Only one song is active at a time. After you have listened to it you must share it to another user via twitter. Once you have shared it you can no longer listen to it.

- Music Collective by the Reactify team – A collaborative music game focussing on the phenomenon of how many people, when working together, form a collective ‘hive mind’.

- Leap Mix by Adam Howard – Control audio tracks with your hands.

It was fun demoing my own hack: Girl Talk in a Box – it is not everyday that a 50 something guy gets to pretend he’s Skrillex in front of a room full of music industry insiders.

All in all, it was a great event. Thanks to Martyn and MIDEM for making us hackers feel welcome at this event. MIDEM is an interesting place, where lots of music business happens. It is rather interesting for us hacker-types to see how this other world lives. No doubt, thanks to MIDEM Music Hack Day synergies were leveraged, silos were toppled, and ARPUs were maximized. Looking forward to next year!

Girl Talk in a Box

Here’s my music hack from Midem Music Hack Day: Girl Talk in a Box. It continues the theme of apps like Bohemian Rhapsichord and Bangarang Boomerang. It’s an app that lets you play with a song in your browser. You can speed it up and slow it down, you can skip beats, you can play it backwards, beat by beat. You can make it swing. You can make breaks and drops. It’s a lot of fun. With Girl Talk in a Box, you can play with any song you upload, or you can select songs from the Gallery.

My favorite song to play with today is AWOLNATION’s Sail. Have a go. There’s a whole bunch of keyboard controls (that I dedicate to @eelstretching). When you are done with that you can play with the code.

The Stockholm Python User Group

Posted by Paul in code, data, events, The Echo Nest on January 25, 2013

In a lucky coincidence I happened to be in Stockholm yesterday which allowed me to give a short talk at the Stockholm Python user Group. About 80 or so Pythonistas gathered at Campanja to hear talks about Machine Learning and Python. The first two talks were deep dives into particular aspects of machine learning and Python. My talk was quite a bit lighter. I described the Million Song Data Set and suggested that it would be a good source of data for anyone looking for a machine learning research. I then went on to show a half a dozen or so demos that were (or could be) built on top of the Million Song Data Set. A few folks at the event asked for links, so here you go:

Core Data: Echo Nest analysis for a million songs

Complimentary Data

- Second Hand Songs – 20K cover songs

- MusixMatch – 237K bucket-of-words lyric sets

- Last.fm tags – song level tags for 500K tracks. plus 57 million sim. track pairs

- Echo Nest Taste profile subset – 1M users, 48M user/song/play count triples

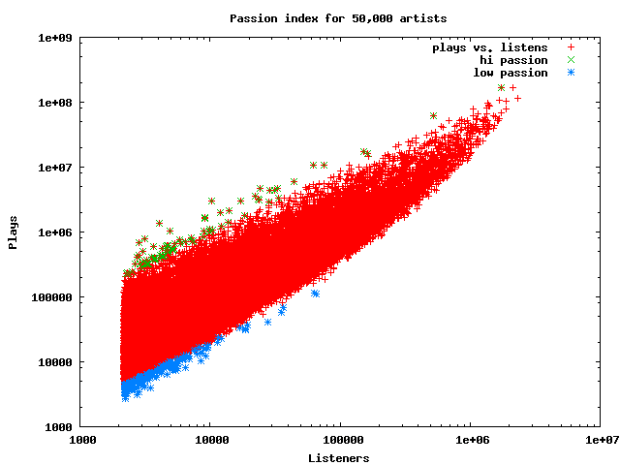

Data Mining Listening Data: The Passion Index

Fun with Artist Similarity Graphs: Boil the Frog

Post about In Search of the Click Track and a web app for exploring click tracks

Turning music into silly putty – Echo Nest Remix

[youtube http://www.youtube.com/watch?v=2oofdoS1lDg]Interactive Music

I really enjoyed giving the talk. The audience was really into the topic and remained engaged through out. Afterwards I had lots of stimulating discussions about the music tech world. The Stockholm Pythonistas were a very welcoming bunch. Thanks for letting me talk. Here’s a picture I took at the very end of the talk:

Joco vs. Glee

With all the controversy surrounding Glee’s ripoff of Jonathan Coulton’s Baby Got back I thought I would makes a remix that combines the two versions. The remix alternates between the two songs, beat by beat.

[audio http://static.echonest.com.s3.amazonaws.com/audio/combo.mp3]At first I thought I had a bug and only one of the two songs was making it into the output, but nope, they are both there. To prove it I made another version that alternates the same beat between the two songs – sort of a call and answer. You can hear the subtle differences, and yes, they are very subtle.

[audio http://static.echonest.com.s3.amazonaws.com/audio/combo-t1.mp3]The audio speaks for itself.

Here’s the code.

[gist https://gist.github.com/4632416]Going Undercover

My Music Hack Day Stockholm hack is ‘Going Undercover‘. This hack uses the extensive cover song data from SecondHandSongs to construct paths between artists by following chains of cover songs. Type in the name of a couple of your favorite artists and Going Undercover will try to find a chain of cover songs that connects the two artists. The resulting playlist will likely contain familiar songs played by artists that you never heard of before. Here’s a Going Undercover playlist from Carly Rae Jepsen to Johnny Cash:

For this hack I stole a lot of code from my recent Boil the Frog hack, and good thing I could do that otherwise I would never have finished the hack in time. I spent many hours working to reconcile the Second Hand Songs data with The Echo Nest and Rdio data (Second Hand Songs is not part of Rosetta stone, so I had to write lots of code to align all the IDs up). Even with leveraging the Boil the Frog code, I had a very late night trying to get all the pieces working (and of course, the bug that I spent 2 hours banging my head on at 3AM was 5 minutes of work after a bit of sleep).

I am pretty pleased with the results of the hack. It is fun to build a path between a couple of artists and listen to a really interesting mix of music. Cover songs are great for music discovery, they give you something familiar to hold on to while listening to a new artist.

Boil The Frog

Posted by Paul in code, fun, Music, The Echo Nest on January 2, 2013

You know the old story – if you put a frog in a pot of cold water and gradually heat the pot up, the frog won’t notice and will happily sit in the pot until the water boils and the frog is turned into frog soup. This story is at the core of my winter break programming project called Boil the Frog. Boil the Frog will take you from one music style to another gradually enough so that you may not notice the changes in music style. Just like the proverbial frog sitting in a pot of boiling water, with a Boil the Frog playlist, the Justin Bieber fan may find themselves listening to some extreme brutal death metal such as Cannibal Corpse or Deicide (the musical equivalent to sitting in a pot of boiling water).

To use Boil the Frog, you type in the names of any two artists you’ll be given a playlist that connects the two artists. Click on the first artist to start listening to the playlist. If you don’t like the route taken to connect two artists, you can make a new route by bypassing an offending artist. The app uses Rdio to play the music. If you are an Rdio subscriber, you’ll hear full tracks, if not you’ll hear a 30 second sample of the music.

You can create some fun playlists with this app such as:

- Miley Cyrus to Miles Davis

- Justin Bieber to Jimi Hendrix

- Patti Smith to the Smiths

- Elvis to Elvis

- The Carter Family to Rammstein

- Kanye West to Taylor Swift

- Cage the Elephant to John Cage

- Ryan Adams to Bryan Adams

- Righteous Brothers to Steven Wright

How does it work? To create this app, I use The Echo Nest artist similarity info to build an artist similarity graph of about 100,000 of the most popular artists. Each artist in the graph is connected to it’s most similar neighbors according to the Echo Nest artist similarity algorithm.

To create a new playlist between two artists, the graph is used to find the path that connects the two artists. The path isn’t necessarily the shortest path through the graph. Instead, priority is given to paths that travel through artists of similar popularity. If you start and end with popular artists, you are more likely to find a path that takes you though other popular artists, and if you start with a long-tail artist you will likely find a path through other long-tail artists. Without this popularity bias many routes between popular artists would venture into back alleys that no music fan should dare to tread.

Once the path of artists is found, we need to select the best songs for the playlist. To do this, we pick a well-known song for each artist that minimizes the difference in energy between this song, the previous song and the next song. Once we have selected the best songs, we build a playlist using Rdio’s nifty web api.

This is the second version of this app. I built the first version during a Spotify hack weekend. This was a Spotify app that would only run inside Spotify. I never released the app (the Spotify app approval process was a bit too daunting for my weekend effort), so I though I’d make a new version that runs on the web that anyone can use.

I enjoy using Boil the Frog to connect up artists that I like. I usually end up finding a few new artists that I like. For example, this Boil The Frog playlist connecting Deadmau5 and Explosions in the Sky is in excellent coding playlist.

Give Boil the Frog a try and if you make some interesting playlists let me know and I’ll add them to the Gallery.

TimesOpen 2012 Hack Day Wrap-Up

I spent Saturday at the New York Times attending the TimesOpen Hack Day. It was great fun, with lots of really smart folks creating neat (and not always musical stuff). Some great shots here:

Check out the wrap up at the Times: TimesOpen 2012 Hack Day Wrap-Up

Dynamically adjusting the tweet button

The Infinite Jukebox has a tweet button that allows you to tweet the URL of a song so you can share it with others. The recently added tuning feature encodes all of the tuning information into the URL, making it easy to share a ‘tuned’ infinite song. One challenge I encountered it making this work was dynamically changing the URL that the tweet button will ‘tweet’.

The ‘tweet’ button is a twitter supplied widget. When your page load, a script runs that finds the tweet button on the page and does a bunch of twitter magic to the button (for one thing, it turns the button into an iFrame). When you click the button, the twitter dialog has the URL ready to go. However, if the URL has been programmatically changed (as in my tuning feature), the tweet button won’t pick this up. To make this work, whenever you change the URL of the page, you need to remove the tweet button, re-attach it and then call twttr.widgets.load() to force the twitter button to update.

Here’s the gist:

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| <body> | |

| The tweet button: | |

| <span id='tweet-span'> | |

| <a href="https://twitter.com/share" id='tweet' | |

| class="twitter-share-button" | |

| data-lang="en" | |

| data-count='none'>Tweet</a> | |

| <script>!function(d,s,id){varjs,fjs=d.getElementsByTagName(s)[0];if(!d.getElementById(id)){js=d.createElement(s);js.id=id;js.src="https://platform.twitter.com/widgets.js";fjs.parentNode.insertBefore(js,fjs);}}(document,"script","twitter-wjs");</script> | |

| </span> | |

| <script> | |

| function tweetSetup() { | |

| $(".twitter-share-button").remove(); | |

| var tweet = $('<a>') | |

| .attr('href', "https://twitter.com/share") | |

| .attr('id', "tweet") | |

| .attr('class', "twitter-share-button") | |

| .attr('data-lang', "en") | |

| .attr('data-count', "none") | |

| .text('Tweet'); | |

| $("#tweet-span").prepend(tweet); | |

| tweet.attr('data-text', "#InfiniteJukebox of " + t.fixedTitle); | |

| tweet.attr('data-url', document.URL); | |

| twttr.widgets.load(); | |

| } | |

| </script> | |

| </body> |