At SXSW 2009, Anthony Volodkin and I presented a panel on music recommendation called “Help! My iPod thinks I’m emo”. Anthony and I share very different views on music recommendation. You can read Anthony’s notes for this session at his blog: Notes from the “Help! My iPod Thinks I’m Emo!” panel. This is Part 1 of my notes – and my viewpoints on music recommendation. (Note that even though I work for The Echo Nest, my views may not necessarily be the same as my employer).

The SXSW audience is a technical audience to be sure, but they are not as immersed in recommender technology as regular readers of MusicMachinery, so this talk does not dive down into hard core tech issues, instead it is a lofty overview of some of the problems and potential solutions for music recommendation. So lets get to it.

Music Recommendation is Broken.

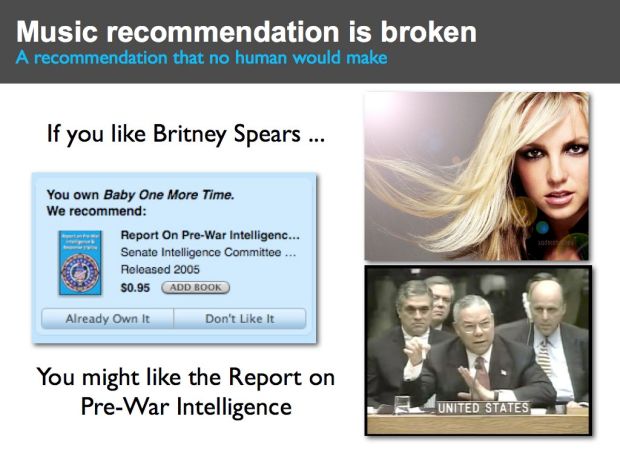

Even though Anthony and I disagree about a number of things, one thing that we do agree on is that music recommendation is broken in some rather fundamental ways. For example, this slide shows a recommendation from iTunes (from a few years back). iTunes suggests that if I like Britney Spears’ “Hit Me Baby One more time” that I might also like the “Report on Pre-War Intelligence for the Iraq war”.

Clearly this is a broken recommendation – this is a recommendation no human would make. Now if you’ve spent anytime visiting music sites on the web you’ve likely seen recommendations just as bad as this. Sometimes music recommenders just get it wrong – and they get it wrong very badly. In this talk we are going to talk about how music recommenders work, why they make such dumb mistakes, and some of the ideas coming from researchers and innovators like Anthony to fix music discovery.

Why do we even care about music recommendation and discovery?

The world of music has changed dramatically. When I was growing up, a typical music store had on the order of 1,000 unique artists to chose from. Now, online music stores like iTunes have millions of unique songs to chose from. Myspace has millions of artists, and the P2P networks have billions of tracks available for download. We are drowning in a sea of music. And this is just the beginning. In a few years time the transformation to digital, online music will be complete. All recorded music will be online – every recording of every performance of every artist, whether they are a mainstream artist or a garage band or just a kid with a laptop will be uploaded to the web. There will be billions of tracks to chose from, with millions more arriving every week. With all this music to chose from, this should be a music nirvana – we should all be listening to new and interesting music.

With all this music, classic long tail economics apply. Without the constraints of physical space, music stores no longer need to focus on the most popular artists. There should be less of a focus on the hits and the megastars. With unlimited virtual space, we should see a flattening of the long tail – music consumption should shift to less popular artists. This is good for everyone. It is good for business – it is probably cheaper for a music store to sell a no-name artist than it is to sell the latest Miley Cyrus track. It is good for the artist – there are millions of unknown artists that deserve a bit of attention, and it is good for the listener. Listeners get to listen to a larger variety of music, that better fits their taste, as opposed to music designed and produced to appeal to the broadest demographics possible. So with the increase in available music we should see less emphasis on the hits. In the future, with all this music, our music listening should be less like Walmart and more like SXSW. But is this really happening? Lets take a look.

The state of music discovery

If we look at some of the data from Nielsen Soundscan 2007 we see that although there were more than 4 million tracks sold only 1% of those tracks accounted for 80% of sales. What’s worse, a whopping 13% of all sales are from American Idol or Disney Artists. Clearly we are still focusing on the hits. One must ask, what is going on here? Was Chris Anderson wrong? I really don’t think so. Anderson says that to make the long tail ‘work’ you have to do two things (1) Make everything available and (2) Help me find it. We are certainly on the road to making everything available – soon all music will be online. But I think we are doing a bad job on step (2) help me find it. Our music recommenders are *not* helping us find music, in fact current music recommenders do the exact opposite, they tend to push us toward popular artists and limit the diversity of recommendations. Music recommendation is fundamentally broken, instead of helping us find music in the long tail they are doing the exact opposite. They are pushing us to popular content. To highlight this take a look at the next slide.

Help! I’m stuck in the head

This is a study done by Dr. Oscar Celma of MTG UPF (and now at BMAT). Oscar was interested in how far into the long tail a recommender would get you. He divided the 245,000 most popular artists into 3 sections of equal sales – the short head, with 83 artists, the mid tail with 6,659 artists, and the long tail with 239,798 artists. He looked at recommendations (top 20 similar artists) that start in the short head and found that 48% of those recommendations bring you right back to the short head. So even though there are nearly a quarter million artists to chose from, 48% of all recommendations are drawn from a pool of the 83 most popular artists. The other 52% of recommendations are drawn from the mid-tail. No recommendations at all bring you to the long tail. The nearly 240,000 artists in the long tail are not reachable directly from the short head. This demonstrates the problem with commercial recommendation – it focuses people on the popular at the expense of the new and unpopular.

Let’s take a look at why recommendation is broken.

The Wisdom of Crowds

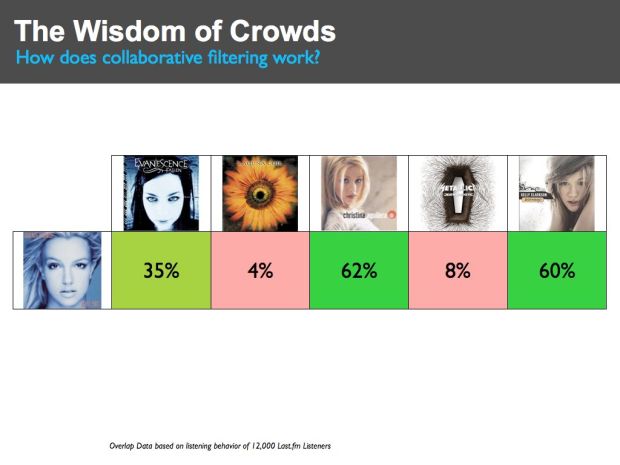

First lets take a look at how a typical music recommender works. Most music recommenders use a technique called Collaborative Filtering (CF). This is the type of recommendation you get at Amazon where they tell you that ‘people who bought X also bought Y’. The core of a CF recommender is actually quite simple. At the heart of the recommender is typically an item-to-item similarity matrix that is used to show how similar or dissimilar items are. Here we see a tiny excerpt of such a matrix. I constructed this by looking at the listening patterns of 12,000 last.fm listeners and looking at which artists have overlapping listeners. For instance, 35% of listeners that listen to Britney Spears also listen to Evancescence, while 62% also listen to Christina Aguilera. The core of a CF recommender is such a similarity matrix constructed by looking at this listener overlap. If you like Britney Spears, from this matrix we could recommend that you might like Christana and Kelly Clarkson, and we’d recommend that you probably wouldn’t like Metallica or Lacuna Coil.

CF recommenders have a number of advantages. First, they work really well for popular artists. When there are lots of people listening to a set of artists, the overlap is a good indicator of overall preference. Secondly, CF systems are fairly easy to implement. The math is pretty straight forward and conceptually they are very easy to understand. Of course, the devil is in the details. Scaling a CF system to work with millions of artists and billions of tracks for millions of users is an engineering challenge. Still, it is no surprise that CF systems are so widely used. They give good recommendations for popular items and they are easy to understand and implement. However, there are some flaws in CF systems that ultimately makes them not suitable for long-tail music recommendation. Let’s take a look at some of the issues.

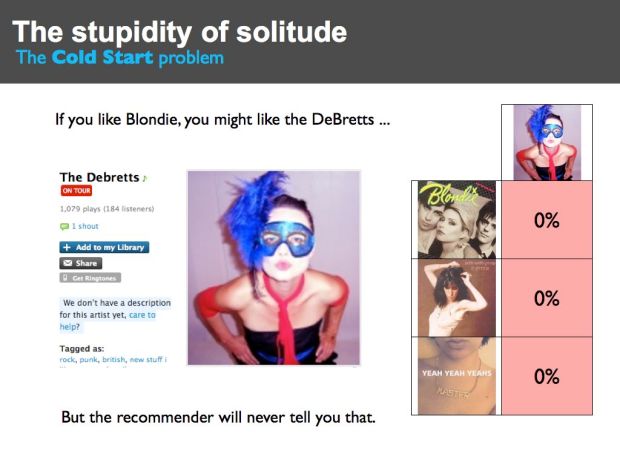

The Stupidity of Solitude

The DeBretts are a long tail artist. They are a punk band with a strong female vocalist that is reminiscent of Blondie or Patti Smith. (Be sure to listen to their song ‘The Rage’) .The DeBretts haven’t made it big yet. At last.fm they have about 200 listeners. They are a really good band and deserve to be heard. But if you went to an online music store like iTunes that uses a Collaborative Filterer to recommend music, you would *never* get a recommendation for the DeBretts. The reason is pretty obvious. The DeBretts may appeal to listeners that like Blondie, but even if all of the DeBretts listeners listen to Blondie the percentage of Blondie listeners that listen to the DeBretts is just too low. If Blondie has a million listeners then the maximum potential overlap(200/1,000,000) is way too small to drive any recommendations from Blondie to the DeBretts. The bottom line is that if you like Blondie, even though the DeBretts may be a perfect recommendation for you, you will never get this recommendation. CF systems rely on the wisdom of the crowds, but for the DeBretts, there is no crowd and without the crowd there is no wisdom. Among those that build recommender systems, this issue is called ‘the cold start’ problem. It is one of the biggest problems for CF recommenders. A CF-based recommender cannot make good recommendations for new and unpopular items.

Clearly we can see that this cold start problem is going to make it difficult for us to find new music in the long tail. The cold start problem is one of the main reasons why are recommenders are still’ stuck in the head’.

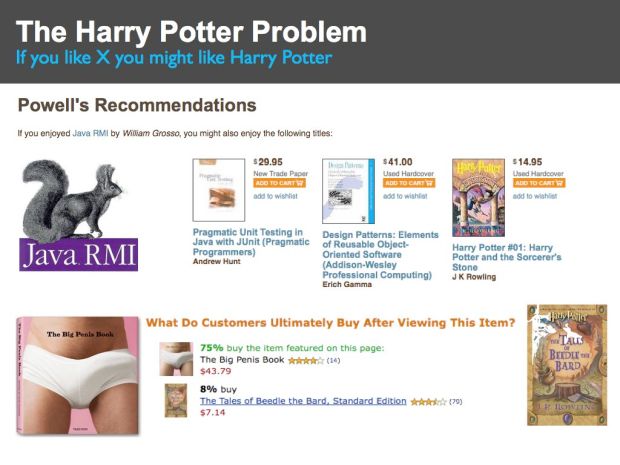

The Harry Potter Problem

This slide shows a recommendation “If you enjoy Java RMI” you many enjoy Harry Potter and the Sorcerers Stone”. Why is Harry Potter being recommended for a reader of a highly technical programming book?

Certain items, like the Harry Potter series of books, are very popular. This popularity can have an adverse affect on CF recommenders. Since popular items are purchased often they are frequently purchased with unrelated items. This can cause the recommender to associate the popular item with the unrelated item, as we see in this case. This effect is often called the Harry Potter effect. People who bought just about any book that you can think of, also bought a Harry Potter book.

Case in point is the “The Big Penis Book” – Amazon tells us that after viewing “The Big Penis Book” 8% of customers go on to by the Tales of Beedle the Bard from the Harry Potter series. It may be true that people who like big penises also like Harry Potter but it may not be the best recommendation.

(BTW, I often use examples from Amazon to highlight issues with recommendation. This doesn’t mean that Amazon has a bad recommender – in fact I think they have one of the best recommenders in the world. Whenever I go to Amazon to buy one book, I end up buying five because of their recommender. The issues that I show are not unique to the Amazon recommender. You’ll find the same issues with any other CF-based recommender.)

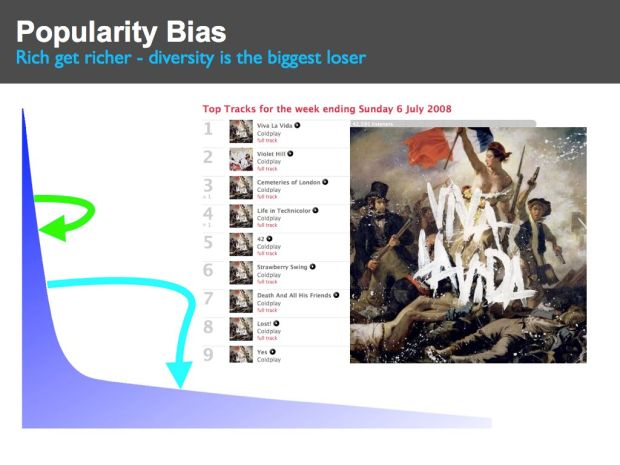

Popularity Bias

One effect of this Harry Potter problem is that a recommender will associate the popular item with many other items. The result is that the popular item tends to get recommended quite often and since it is recommended often, it is purchased often. This leads to a feedback loop where popular items get purchased often because they are recommended often and are recommended often because they are purchased often. This ‘rich-get-richer’ feedback loop leads to a system where popular items become extremely popular at the expense of the unpopular. The overall diversity of recommendations goes down. These feedback loops result in a recommender that pushes people toward more popular items and away from the long tail. This is exactly the opposite of what we are hoping that our recommenders will do. Instead of helping us find new and interesting music in the long tail, recommenders are pushing us back to the same set of very popular artists.

Note that you don’t need to have a fancy recommender system to be susceptible to these feedback loops. Even simple charts such as we see at music sites like the hype machine can lead to these feedback loops. People listen to tracks that are on the top of the charts, leading these songs to continue to be popular, and thus cementing their hold on the top spots in the charts.

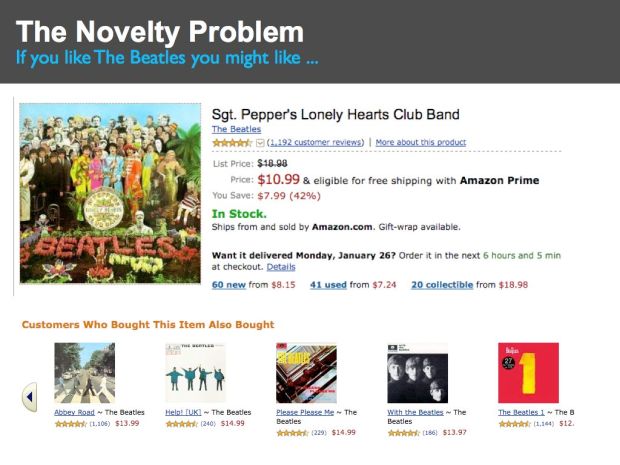

The Novelty Problem

There is a difference between a recommender that is designed for music discovery and one that is designed for music shopping. Most recommenders are intended to help a store make more money by selling you more things. This tends to lead to recommendations such as this one from Amazon – that suggests that since I’m interested in Sgt. Pepper’s Lonely Hearts Club Band that I might like Abbey Road and Please Please Me and every other Beatles album. Of course everyone in the world already knows about these items so these recommendations are not going to help people find new music. But that’s not the point, Amazon wants to sell more albums and recommending Beatles albums is a great way to do that.

One factor that is contributing to the Novelty Problem is high stakes evaluations like the Netflix prize. The Netflix prize is a competition that offers a million dollars to anyone that can improve the Netflix movie recommender by 10%. The evaluation is based on how well a recommender can predict how a movie viewer will rate a movie on a 1-5 star scale. This type of evaluation focuses on relevance – a recommender that can correctly predict that I’ll rate the movie ‘Titanic’ 2.2 stars instead of 2.0 stars – may score well in this type of evaluation, but that probably hasn’t really improved the quality of the recommendation. I won’t watch a 2.0 or a 2.2 star movie, so what does it matter. The downside of the Netflix prize is that only one metric – relevance – is being used to drive the advancement of recommender state-of-the-art when there are other equally import metrics – novelty is one of them.

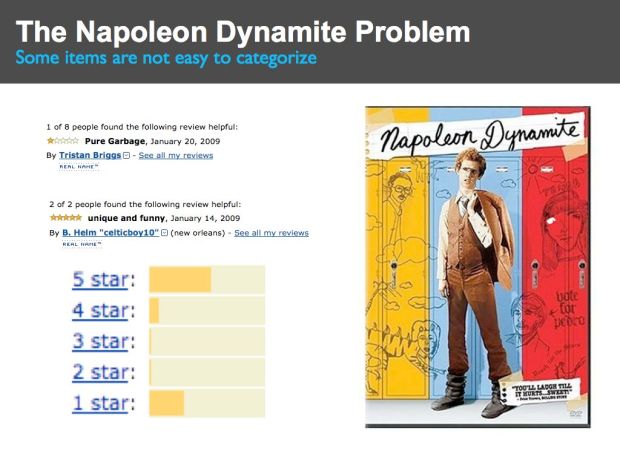

The Napoleon Dynamite Problem

Some items are not always so easy to categorize. For instance, if you look at the ratings for the movie Napoleon Dynamite you see a bimodal distribution of 5 stars and 1 stars. People either like it or hate it, and it is hard to predict how an individual will react.

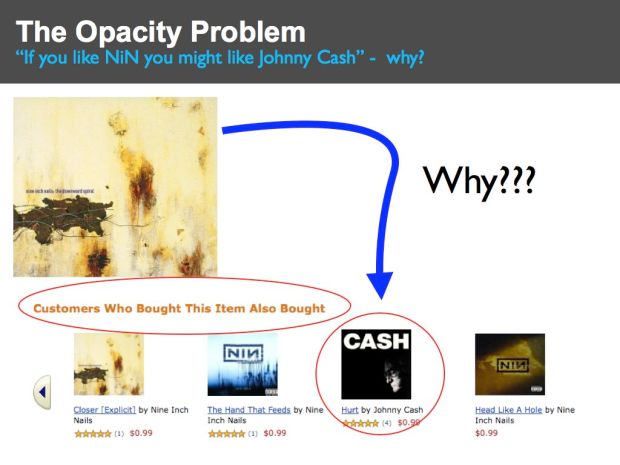

The Opacity Problem

Here’s an Amazon recommendation that suggests that if I like Nine Inch Nails that I might like Johnny Cash. Since NiN is an industrial band and Johnny Cash is a country/western singer, at first blush this seems like a bad recommendation, and if you didn’t know any better you may write this off as just another broken recommender. It would be really helpful if the CF recommender could explain why it is recommending Johnny Cash, but all it can really tell you is that ‘Other people who listened to NiN also listened to Johnny Cash’ which isn’t very helpful. If the recommender could give you a better explanation of why it was recommending something – perhaps something like “Johnny Cash has an absolutely stunning cover of the NiN song ‘hurt’ that will make you cry.” – then you would have a much better understanding of the recommendation. The explanation would turn what seems like a very bad recommendation into a phenomenal one – one that perhaps introduces you to whole new genre of music – a recommendation that may have you listening ‘Folsom Prison’ in a few weeks.

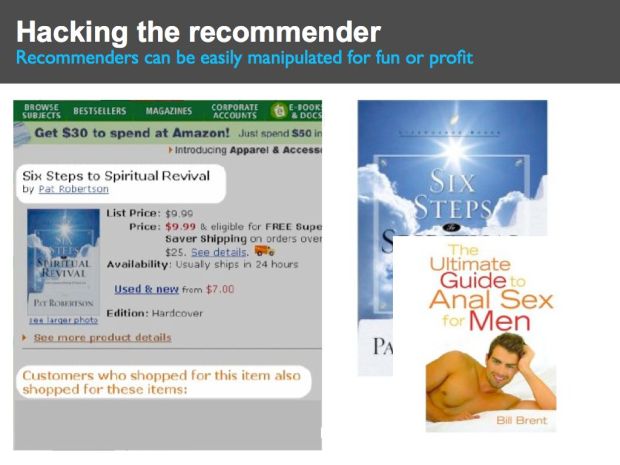

Hacking the Recommender

Here’s a recommendation based on a book by Pat Robertson called Six Steps to Spiritual Revival (courtesy of Bamshad Mobasher). This is a book by notorious televangelist Pat Roberston that promises to reveal “Gods’s Awesome Power in your life.” Amazon offers a recommendation suggesting that ‘Customers who shopped for this item also shopped for ‘The Ultimate Guide to Anal Sex for Men’. Clearly this is not a good recommendation. This bad recommendation is the result of a loosely organized group who didn’t like Pat Roberston, so they managed to trick the Amazon recommender into recommending a rather inappropriate book just by visiting the Amazon page for Robertson’s book and then visiting the Amazon page for the sex guide.

This manipulation of the Amazon recommender was easy to spot and can be classified as a prank, but it is not hard to image that an artist or a label may use similar techniques, but in a more subtle fashion to manipulate a recommender to promote their tracks (or to demote the competition). We already live in a world where search engine optimization is an industry. It won’t be long before recommender engine optimization will be an equally profitable (and destructive) industry.

Wrapping up

This is the first part of a two part post. In this post I’ve highlighted some of the issues in traditional music recommendation. Next post is all about how to fix these problems. For an alternative view be sure to visit Anthony Volodkin’s blog where he presents a rather different viewpoint about music recommendation.

#1 by Tam on March 27, 2009 - 4:15 am

Thanks a lot for sharing your notes. This is a very interesting topic.

May I add that recommendation is lacking a cultural context approach? Certainly a German user of such a recommendation system wouldn’t be exposed to the same items as a Texan one, let alone a Taiwanese or a Senegalese one. :)

#2 by Dan on March 27, 2009 - 9:39 am

Fascinating post!

I do think this technology is in its infancy and will improve greatly over the coming years. I also agree that Amazon does often get it right, even though it occasionally generates some ludicrous recommendations.

One thing that annoys me is Amazon will reccomend something that I’ve already bought, from them! That SHOULD be an easy thing to fix.

#3 by Andrey on March 30, 2009 - 7:33 am

Hi, seems that all your text is based on assumption that there is only one algorithm for recommendation systems and it’s broken. This is wrong.

Sure, KNN algorithm (that is known as “users that acted like me act the following way”) is tended to give most popular items. But it’s not the only one. There are some more clever aglorithms, like one that are based on SVD.

Their problem is computational complexity, but with help of Moore’s law we’ll see some more advanced recommendation systems sooner or later.

#4 by plamere on March 31, 2009 - 7:08 am

Andry: Every commercial music site except for one, as far as I know use some variant of collaborative filtering, which will be subject to the coldstart, feedback loops, hacking, lack of transparency etc. that I describe here.

#5 by Adondai on April 1, 2009 - 10:40 pm

Hey – couldn’t agree more with your thoughts.

However what I don’t understand is there are already dozens of metrics and APIs which if a music recommender used, should be able to do a lot of what you suggest?

I don’t know anything about programming, but all of these music recommenders seem to try and create the perfect engine themselves when any of them could use information provided by all these other sites? You posted about new services and APIs on your old site all the time…

#6 by plamere on April 2, 2009 - 6:46 am

I agree that there is lots of data out there already that would make recommendation much easier. But it is difficult to share this data – it is hard to reconcile users (my last.fm name and my pandora name are different), and most of the APIs only allow for non-commercial use.

#7 by Adondai on April 1, 2009 - 10:44 pm

Btw can you do something with the trackbacks on this blog?

#8 by plamere on April 2, 2009 - 6:44 am

Not sure what you mean. Is there a problem?

#9 by Adondai on April 2, 2009 - 8:23 am

If you take a look at some other blogs you’ll see most have a seperate spot where the trackbacks go – either above or below the normal comments – and usually consisting of just one line.

Their messy and distrupt the conversation that go on in the comments.

Disqus is the easiest solution =P It has good spam filtering as well.

#10 by bcurtu on April 12, 2009 - 12:02 pm

I have implemented a events recommender for dooplan, using mainly CF algortihms, but also Content Based algorithms in order to avoid problems like novelty problem…

When the second part of this article for? it’s really well explained.

#11 by OlegG on April 18, 2009 - 2:15 am

Hi Paul,

I do not listen to a music very often and I have a big trouble when I am trying to buy a new disk: a choice is so huge, but my budget is pretty limited.

I suppose that some experts should create a structure of music categories big enough to reflect most of people tastes.

After that (I am very sure) people would love to use them.

As a software developer I can provide a lot of ideas and algorithms to support such activity.

I am absolutely sure that a usage of just a 100 criteria will be able to produce billions and billions music categories.

Is it so difficult to find out 100 criteria to categorize a music?