Archive for category recommendation

The Time Empire Strikes Back

Posted by Paul in recommendation, research on April 17, 2009

It looks like Time has taken some action to combat the hack of the Time 100 Poll. They are now using a captcha to verify that the voter is a human – the result being that the 4chan autovoters are now being banned.

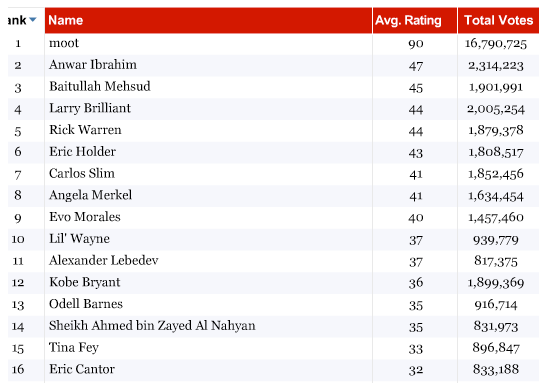

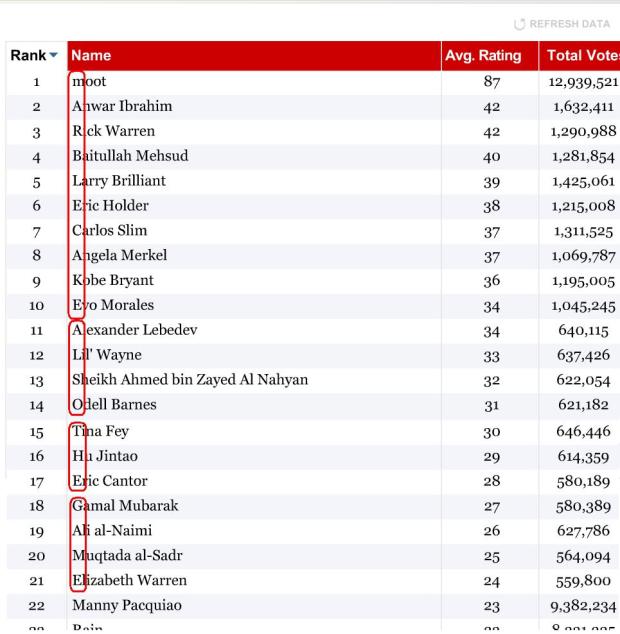

With the new defenses in place, the delicate balance of the poll results order can no longer be maintained by the /b/tards. The Message is no more:

After just a couple of hours, the Message has decayed from “marblecake also the game” to “mablre caelakosteghamm”.

I don’t think this is the final shot in the war. I suspect that even as I type this the 4chan folks are poking and prodding, looking for another chink in Time’s armor. It will be interesting to see if and when they respond. Still, 4chan has awoken the sleeping giant. They’ve been noticed, and whatever they do, now that the giant is awake and paying attention, it will be much harder for them. But, I wouldn’t bet against the /b/tards yet. It’s like the final moments in Star Wars Episode V. Yes, Han Solo is currently frozen in carbonite, but honestly, you know he’s going to make it out in the next episode.

I don’t think this is the final shot in the war. I suspect that even as I type this the 4chan folks are poking and prodding, looking for another chink in Time’s armor. It will be interesting to see if and when they respond. Still, 4chan has awoken the sleeping giant. They’ve been noticed, and whatever they do, now that the giant is awake and paying attention, it will be much harder for them. But, I wouldn’t bet against the /b/tards yet. It’s like the final moments in Star Wars Episode V. Yes, Han Solo is currently frozen in carbonite, but honestly, you know he’s going to make it out in the next episode.

Inside the precision hack

Posted by Paul in recommendation, research on April 15, 2009

There’s a scene toward the end of the book Contact by Carl Sagan, where the protagonist Ellie Arroway finds a Message embedded deep in the digits of PI. The Message is perhaps an artifact of an extremely advanced intelligence that apparently manipulated one of the fundamental constants of the universe as a testament to their power as they wove space and time. I’m reminded of this scene by the Time.com 100 Poll where millions have voted on who are the world’s most influential people in government, science, technology and the arts. Just as Ellie found a Message embedded in PI, we find a Message embedded in the results of this poll. Looking at the first letters of each of the top 21 leading names in the poll we find the message “marblecake, also the game”. The poll announces (perhaps subtly) to the world, that the most influential are not the Obamas, Britneys or the Rick Warrens of the world, the most influential are an extremely advanced intelligence: the hackers.

There’s a scene toward the end of the book Contact by Carl Sagan, where the protagonist Ellie Arroway finds a Message embedded deep in the digits of PI. The Message is perhaps an artifact of an extremely advanced intelligence that apparently manipulated one of the fundamental constants of the universe as a testament to their power as they wove space and time. I’m reminded of this scene by the Time.com 100 Poll where millions have voted on who are the world’s most influential people in government, science, technology and the arts. Just as Ellie found a Message embedded in PI, we find a Message embedded in the results of this poll. Looking at the first letters of each of the top 21 leading names in the poll we find the message “marblecake, also the game”. The poll announces (perhaps subtly) to the world, that the most influential are not the Obamas, Britneys or the Rick Warrens of the world, the most influential are an extremely advanced intelligence: the hackers.

At 4AM this morning I received an email inviting me to an IRC chatroom where someone would explain to me exactly how the Time.com 100 Poll was precision hacked. Naturally, I was a bit suspicious. Anyone could claim to be responsible for the hack – but I ventured onto the IRC channel (feeling a bit like a Woodward or Bernstein meeting Deep Throat in a parking garage). After talking to ‘Zombocom’ (not his real nick) for a few minutes, it was clear that Zombocom was a key player in the hack. He explained how it all works.

The Beginning

Zombocom told me that it all started out when the folks that hang out on the random board of 4chan (sometimes known as /b/) became aware that Time.com had enlisted moot (the founder of 4chan) as one of the candidates in the Time.com 100 poll. A little investigation showed that a poll vote could be submitted just by doing an HTTP get on the URL:

http://www.timepolls.com/contentpolls/Vote.do

?pollName=time100_2009&id=1883924&rating=1

where ID is a number associated with the person being voted for (in this case 1883924 is Rain’s ID).

Soon afterward, several people crafted ‘autovoters’ that would use the simple voting URL protocol to vote for moot. These simple autovoters could be triggered by an easily embeddable ‘spam URL’. The autovoters were very flexible allowing the rating to be set for any poll candidate. For example, the URL

http://fun.qinip.com/gen.php?id=1883924

&rating=1&amount=160

could be used to push 160 ratings of 1 (the worst rating) for the artist Rain to the Time.com poll.

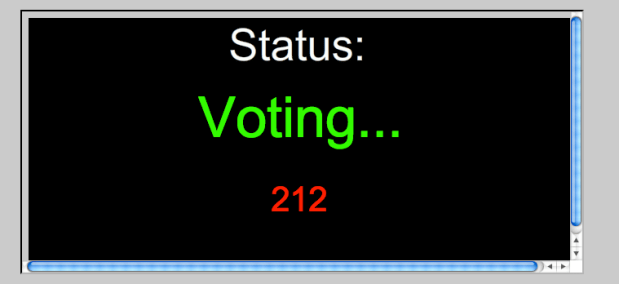

In early stages of the poll, Time.com didn’t have any authentication or validation – the door was wide open to any client that wanted to stuff the ballot box. Soon these autovoting spam urls were sprinkled around the web voting up moot. If you were a fan of Rain, it is likely that when you visited a Rain forum, you were really voting for moot via one of these spam urls.

Soon afterward, it was discovered that the Time.com Poll didn’t even range check its parameters to ensure that the ratings fell within the 1 to 100 range. The autovoters were adapted to take advantage of this loophole, which resulted in the Time.com poll showing moot with a 300% rating, while all other candidates had ratings far below zero. Time.com apparently noticed this and intervened by eliminating millions of votes for moot and restoring the poll to a previous state (presumably) from a backup. Shortly afterward, Time.com changed the protocol to attempt to authenticate votes by requiring that a key be appended to the poll submission URL that consisted of an MD5 hash of the URL + a secret word (AKA ‘the salt’).

“Needless to say, we were enraged” says Zombocom. /b/ responded by getting organized – they created an IRC channel (#time_vote) devoted to the hack, and started to recruit. Shortly afterward, one of the members discovered that the ‘salt’, the key to authenticating requests, was poorly hidden in Time.com’s voting flash application and could be extracted. With the salt in hand – the autovoters were back online, rocking the vote.

Another challenge faced by the autovoters was that if you voted for the same person more often than once every 13 seconds, your IP would be banned from voting. However, it was noticed that you could cycle through votes for other candidates during those 13 seconds. The autovoters quickly adapted to take advantage of this loophole interleaving up-votes for moot with down-votes for the competition ensuring that no candidate received a vote more frequently than once every 13 seconds, while maximizing the voting leverage.

One of the first autovoters was MOOTHATTAN. This is a simple moot up-voter that will vote for moot about 100 times per minute. (Warning, just by visiting that site, you’ll invoke the autovoter – so if you don’t want to hack the vote, you should probably skip the visit).

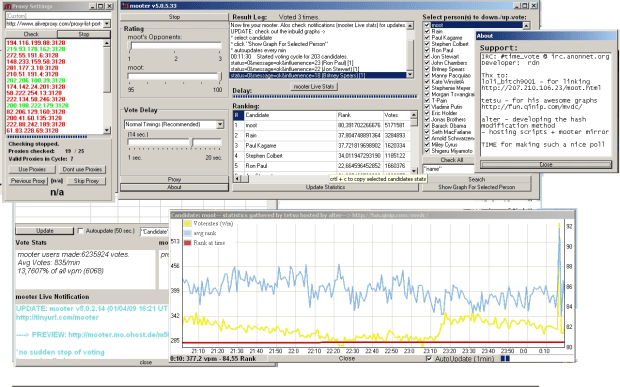

Here’s a screenshot of another autovoter, a program called Mooter, developed by rdn:

Mooter is a Delphi app (windows only) that can submit about 300 votes per minute from a single IP address. It will also take advantage of any proxies and cycle through them so that the votes appear to be coming from multiple IP addresses. rdn, the author of Mooter, has used Mooter to submit 20 thousand votes in a single 15 minute period. In the last two weeks, (when rdn started keeping track) Mooter alone has submitted 10,000,000 votes (about 3.3% of the total number of poll votes).

From the screenshot you can see that Mooter is quite a sophisticated application. It allows fine grained control over who receives votes, what type of rating they get, voting frequency, the proxy cycle, along with charts and graphs showing all sorts of nifty data.

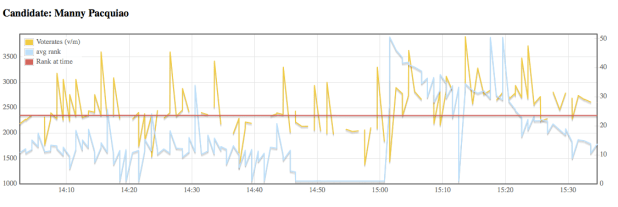

In addition to highly configurable autovoting apps, the loose collective of #time_vote maintains charts and graphs of the various candidate voting histories. Here’s a voting graph that shows the per-minute frequency of votes for boxer Manny Pacquiao.

More charts are available for browsing at (the very slow to load) http://fun.qinip.com/mvdc/mootvote.php

More charts are available for browsing at (the very slow to load) http://fun.qinip.com/mvdc/mootvote.php

So with the charts, graphs, spam URLs and autovoters #time_vote had things well in hand. Moot would easily cruise to a victory. Although they still had some annoying competition, especially from fans of the boxer Manny Paquoia. Zombocom says that “it can take upwards of 4.5K votes a minute to keep Manny in his place”. Despite the Manny problem, the #time_vote collective had complete dominance of the poll.

The Ultimate Precision Hack

At this point Zombocom was starting to get bored and so he started fiddling with his voting scripts. Much to his surprise, he found that no matter what he did, he was never getting banned by Time.com. Zombocom suspects that his ban immunity may be because he’s running an ipv6 stack which may be confusing Time.com’s IP blocker. With no 13 second rate limit to worry about, he was able to crank out votes as fast as his computer would let him – about 5,000 votes a minute (and soon he’ll have a new server online that should give him up to 50,000 votes a minute.) With this new found power, Zombocom was able to take the hack to the next level.

Zombocom joked to one of his friends “it would be funny to troll Time.com and put us up as most influential, but since we are not explicitly on the list we’ll have to spell it out. ” His friend thought it was impossible. But two weeks later, “marblecake’ was indeed spelled out for all to see at the top of the Time.com poll.

So what is the significance of ‘marblecake’? Zombocom says: ” Marblecake was an irc channel where the “Message to Scientology” video originated. Many believe we are “dead” or only doing hugraids etc, so I thought it would also be a way of saying : we’re still around and we don’t just do only “moralfag” stuff .

To actually manipulate the poll, Zombocom wrote two perl scripts. The first one, auto.pl is pretty simple. It finds the highest rated person in the poll that is not in the desired top 21 (recall, there are 21 characters in the Message) and down-votes them (you can view this as eliminating the riff-raff). The second perl script, the_game.pl is responsible for maintaining the proper order of the top 21 by inspecting the rating of a particular person and comparing that rating to what it should be to maintain the proper order and then up-voting or down-voting as necessary to get the desired rating. With these two scripts, (less than 200 lines of perl) Zombocom can put the poll in any order he wants.

Ultimately, this hack involved lots of work and a little bit of luck. Someone figured out the voting URL protocol. A bunch of folks wrote various autovoters, which were then used by a thousand or more to stack the vote in moots favor. Others, sprinkled the spam urls throughout the forums tricking the ‘competition’ into voting for moot. When Time.com responded by trying to close the door on the hacks, the loose collective rallied and a member discovered the ‘salt’ that would re-open the poll to the autovoters. The lucky bit was when Zombocom discovered that no matter what he did, he wouldn’t get banned. This opened the door to the fine grained manipulation that led to the embedding of the Message.

At the core of the hack is the work of a dozen or so, backed by an army of a thousand who downloaded and ran the autovoters and also backed by an untold number of others that unwittingly fell prey to the spam url autovoters. So why do they do it? Why do they write code, build complex applications, publish graphs – why do they organize a team that is more effective than most startup companies? Says Zombocom: “For the lulz”.

Precision Hacking

Posted by Paul in recommendation on April 13, 2009

I’ve seen a few examples where recommenders, polls and top-ten lists have been manipulated. Generally a central coordinator sends a message to the hoard that descend on the to-be-hacked site. Ron Paul’s sheeple, or pharyngula‘s followers are prime examples of the type of group that can turn a poll upside down in a matter of minutes.

It has always seemed to me that such coordinating manipulation was a blunt instrument. The commanded horde could push a specific item to the top of a poll faster than a Kansas school board could lose Darwin’s notebook, but the horde lacked any subtlety or finesse. Sure you could promote or demote an individual or issue, but fine tuned manipulation would just be too difficult. Well, I’ve been proved wrong. Take a look at this Time Poll.

Not only has the poll been swamped to promote Moot (the pseudonym of the creator of 4chan, an image board and the birthplace for many internet memes) as the most influential of people, the poll crashers have manipulated the order of all the other nominees so that the first letter of each line spells out ‘marble cake, also the game’ (marble cake is not really a kind of cake btw). This is pretty phenomenal, precision hacking. Precision hacking of an extremely high profile poll run by a top notch media company. Now, imagine if the same energy was put into getting that latest Lordi album to the top of the pop 100 charts. I’m sure it could be done (and I’m already wondering if perhaps it has already been done, and we just don’t know it).

Polls, top-N lists, and recommenders based upon the wisdom of the crowds are susceptible to this type of manipulation. Better defenses are going to be needed otherwise we will all be listening to whatever 4chan wants us to listen to. (via reddit)

Music discovery is a conversation not a dictatorship

Posted by Paul in Music, recommendation, research, tags on April 3, 2009

Two big problems with music recommenders: (1) They can’t tell me why they recommended something beyond the trivial “People who liked X also liked Y” and (2) If I want to interact with a recommender I’m all thumbs – I can usually give a recommendation a thumbs up or thumbs down but there is no way to use steer the recommender (“no more emo please!”). These problems are addressed in The Music Explaura – a web-based music exploration tool just released by Sun Labs.

The Music Explaura lets you explore the world of music artists. If gives you all the context you need – audio, artist bio, videos, photos, discographies to help you decided whether or not a particular artist is interesting. The Explaura also gives you similar-artist style recommendations. For any artist, you are given a list of similar artists for you to explore. The neat thing is, for any recommended artist, you can ask why that artist was recommended and the Explaura will give you an explanation of why that artist was recommended (in the form of an overlapping tag cloud).

The really cool bit (and this is the knock your socks off type of cool) is that you can use an artists tag cloud to steer the recommender. If you like Jimi Hendrix, but want to find artists that are similar but less psychedelic and more blues oriented, you can just grab the ‘psychedelic’ tag with your mouse and shrink it and grab the ‘blues’ tag and make it bigger – you’ll instantly get an updated set of artists that are more like Cream and less like The Doors.

I strongly suggest that you go and play with the Explaura – it lets you take an active role in music exploration. What’s a band that is an emo version of Led Zeppelin? Blue Öyster Cult of course! What’s an artist like Britney Spears but with an indie vibe? Katy Perry! How about a band like The Beatles but recording in this decade? Try The Coral. A band like Metallica but with a female vocalists? Try Kittie. Was there anything like emo in the 60s? Try Leonard Cohen. The interactive nature of the Explaura makes it quite addicting. I can get lost for hours exploring some previously unknown corner of the music world.

Steve (the search guy) has a great post describing the Music Explaura in detail. One thing he doesn’t describe is the backend system architecture. Behind the Music Explaura is a distributed data store with item search and and similarity capabilities built into the core. This makes scaling the system up to millions of items with requests from thousands of simultaneous users possible. It really is a nice system. (Full disclosure here: I spent the last several years working on this project – so naturally I think it is pretty cool).

The Music Explaura gives us a hint of what music discovery will be like in the future. Instead of a world where a music vendor gives you a static list of recommended artists we’ll live in a world where the recommender can tell you why it is recommending an item, and you can respond by steering the recommendations away from things you don’t like and toward the things that you do like. Music discovery will no longer be a dictatorship, it will be a two-way conversation.

Killer music technology

Posted by Paul in fun, Music, recommendation, The Echo Nest, web services on April 1, 2009

We’ve been head down here at the Echo Nest putting the finishing touches on what I think is a game changer for music discovery. For years, music recommendation companies have been trying to get collaborative filtering technologies to work. These CF systems work pretty well, but sooner or later, you’ll get a bad recommendation. There are just too many ways for a CF recommender to fail. Here at the ‘nest we’ve decided to take a completely different approach. Instead of recommending music based on the wisdom of the crowds or based upon what your friends are listening to, we are going to recommend music just based on whether or not the music is good. This is such an obvious idea – recommend music that is good, and don’t recommend music that is bad – that it is a puzzle as to why this approach hasn’t been taken before. Of course deciding which music is good and which music is bad can be problematic. But the scientists here at The Echo Nest have spent years building machine learning technologies so that we can essentially reproduce the thought process of a Pitchfork music critic. Think of this technology as Pitchfork-in-a-box.

Our implementation is quite simple. We’ve added a single API method ‘get_goodness’ to our set of developer offerings. You give this method an Echo Nest artist ID (that you can obtain via an artist search call) and get_goodness returns a number between zero and one that indicates how good or bad the artist is. Here’s an example call for radiohead:

http://developer.echonest.com/api/get_goodness?api_key=EHY4JJEGIOFA1RCJP&id=music://id.echonest.com/~/AR/ARH6W4X1187B99274F&version=3

The results are:

<response version="3">

<status>

<code>0</code>

<message>Success</message>

</status>

<query>

<parameter name="api_key">EHY4JJEGIOFA1RCJP</parameter>

<parameter name="id">music://id.echonest.com/~/AR/ARH6W4X1187B99274F</parameter>

</query>

<artist>

<name>Radiohead</name>

<id>music://id.echonest.com/~/AR/ARH6W4X1187B99274F</id>

<goodness>0.47</goodness>

<instant_critic>More enjoyable than Kanye bitching.</instant_critic>

</artist>

</response>

We also include in the response, a text string that indicates how you should feel about this artist. This is just the tip of the iceberg for our forthcoming automatic music review technology that will generate blog entries, amazon reviews, wikipedia descriptions and press releases automatically, just based upon the audio.

We’ve made a web demo of this technology that will allow you try out the goodness API. Check it out at: demo.echonest.com.

We’ve had lots of late nights in the last few weeks, but now that this baby is launched, time to celebrate (briefly) and then on to the next killer music tech!

Something is fishy with this recommender

Posted by Paul in Music, recommendation, research, The Echo Nest on April 1, 2009

In my rather long winded post on the problems with current music recommenders, I pointed out the Harry Potter Effect. Collaborative Filtering recommenders tend to recommend things that are popular which makes those items even more popular, creating a feedback loop – (or as the podcomplex calls it – the similarity vortex) that results in certain items becoming extremely popular at the expense of overall diversity. (For an interesting demonstration of this effect, see ‘Online monoculture and the end of the niche‘).

In my rather long winded post on the problems with current music recommenders, I pointed out the Harry Potter Effect. Collaborative Filtering recommenders tend to recommend things that are popular which makes those items even more popular, creating a feedback loop – (or as the podcomplex calls it – the similarity vortex) that results in certain items becoming extremely popular at the expense of overall diversity. (For an interesting demonstration of this effect, see ‘Online monoculture and the end of the niche‘).

Oscar sent me an example of this effect. At the popular British online music store HMV, a rather large fraction of artists recommendations point to the Kings of Leon. Some examples:

- PJ Harvey

- U2

- The Beatles

- Franz Ferdinand

- Amy Winehouse

- The Rolling Stones

- Fratellis

- Last Shadow of Puppets

- Fleet Foxes

- Roxy Music

- Green Day

- Newton Faulkner

- Paul Weller

- Guns’N’Roses

- Quireboys

- Nickelback

- Bon Iver

- Emerson, Lake and Palmer

- Miley Cyrus

- Nirvana

- Led Zeppelin

Oscar points out that even for albums that haven’t been released, HMV will tell you that ‘customers that bought the new unreleased album by Depeche Mode also bought the Kings of Leon’. Of course it is no surprise that if you look at the HMV bestsellers, The Kings Of Leon is way up there at position #3.

At first blush, this does indeed look like a classic example of the Harry Potter Effect, but I’m a bit suspicious that what we are seeing is not an example of a feedback loop, but is an example of shilling – using the recommender to explicitly promote a particular item. It may be that HMV has decided to hardwire a slot in their ‘customers who bought this also bought’ section to point to an item that they are trying to promote – perhaps due to a sweetheart deal with a music label. I don’t have any hard evidence of this, but when you look at the wide variety of artists that point to Kings of Leon – from Miley Cyrus, to Led Zeppelin and Nirvana it is hard to imagine that this is a result of natural collaborative filtering. Music promotion that disguises itself as music recommendation has been around for about as long as there have been people looking for new music. Payola schemes have dogged radio for decades. It is not hard to believe that this type of dishonest marketing will find its way into recommender systems. We’ve already seen the rise of ‘search engine optimization’ companies that will get your web site on the first page of google search results – it won’t be long before we see a recommender engine optimizer industry that will promote your items by manipulating recommenders. It may already be happening now, and we just don’t know about it. The next time you get a recommendation for The Kings of Leon because you like The Rolling Stones, ask yourself if this is a real and honest recommendation or are they just trying to sell you something.

Help! My iPod thinks I’m emo – Part 1

Posted by Paul in Music, recommendation, research, The Echo Nest on March 26, 2009

At SXSW 2009, Anthony Volodkin and I presented a panel on music recommendation called “Help! My iPod thinks I’m emo”. Anthony and I share very different views on music recommendation. You can read Anthony’s notes for this session at his blog: Notes from the “Help! My iPod Thinks I’m Emo!” panel. This is Part 1 of my notes – and my viewpoints on music recommendation. (Note that even though I work for The Echo Nest, my views may not necessarily be the same as my employer).

The SXSW audience is a technical audience to be sure, but they are not as immersed in recommender technology as regular readers of MusicMachinery, so this talk does not dive down into hard core tech issues, instead it is a lofty overview of some of the problems and potential solutions for music recommendation. So lets get to it.

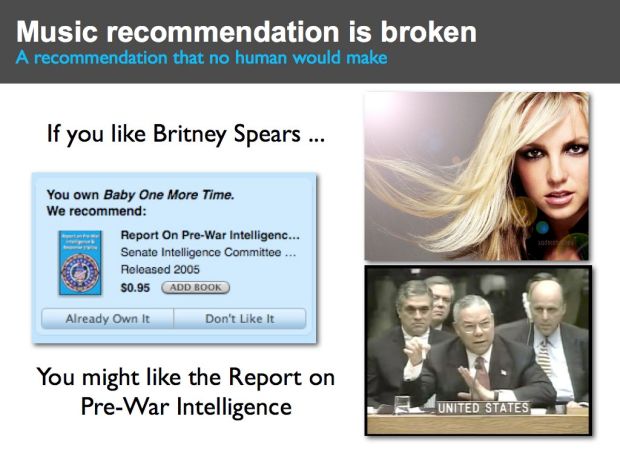

Music Recommendation is Broken.

Even though Anthony and I disagree about a number of things, one thing that we do agree on is that music recommendation is broken in some rather fundamental ways. For example, this slide shows a recommendation from iTunes (from a few years back). iTunes suggests that if I like Britney Spears’ “Hit Me Baby One more time” that I might also like the “Report on Pre-War Intelligence for the Iraq war”.

Clearly this is a broken recommendation – this is a recommendation no human would make. Now if you’ve spent anytime visiting music sites on the web you’ve likely seen recommendations just as bad as this. Sometimes music recommenders just get it wrong – and they get it wrong very badly. In this talk we are going to talk about how music recommenders work, why they make such dumb mistakes, and some of the ideas coming from researchers and innovators like Anthony to fix music discovery.

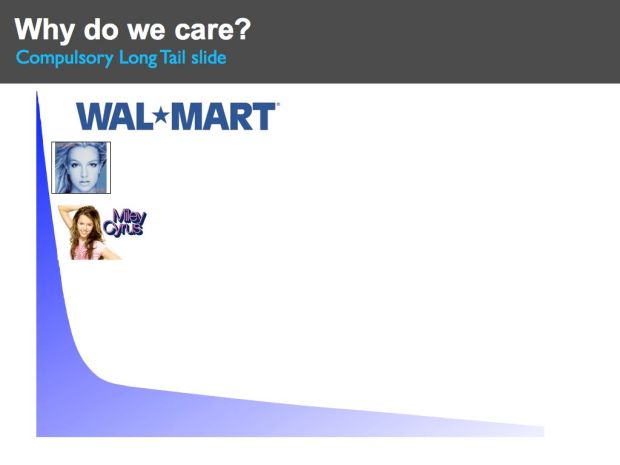

Why do we even care about music recommendation and discovery?

The world of music has changed dramatically. When I was growing up, a typical music store had on the order of 1,000 unique artists to chose from. Now, online music stores like iTunes have millions of unique songs to chose from. Myspace has millions of artists, and the P2P networks have billions of tracks available for download. We are drowning in a sea of music. And this is just the beginning. In a few years time the transformation to digital, online music will be complete. All recorded music will be online – every recording of every performance of every artist, whether they are a mainstream artist or a garage band or just a kid with a laptop will be uploaded to the web. There will be billions of tracks to chose from, with millions more arriving every week. With all this music to chose from, this should be a music nirvana – we should all be listening to new and interesting music.

With all this music, classic long tail economics apply. Without the constraints of physical space, music stores no longer need to focus on the most popular artists. There should be less of a focus on the hits and the megastars. With unlimited virtual space, we should see a flattening of the long tail – music consumption should shift to less popular artists. This is good for everyone. It is good for business – it is probably cheaper for a music store to sell a no-name artist than it is to sell the latest Miley Cyrus track. It is good for the artist – there are millions of unknown artists that deserve a bit of attention, and it is good for the listener. Listeners get to listen to a larger variety of music, that better fits their taste, as opposed to music designed and produced to appeal to the broadest demographics possible. So with the increase in available music we should see less emphasis on the hits. In the future, with all this music, our music listening should be less like Walmart and more like SXSW. But is this really happening? Lets take a look.

The state of music discovery

If we look at some of the data from Nielsen Soundscan 2007 we see that although there were more than 4 million tracks sold only 1% of those tracks accounted for 80% of sales. What’s worse, a whopping 13% of all sales are from American Idol or Disney Artists. Clearly we are still focusing on the hits. One must ask, what is going on here? Was Chris Anderson wrong? I really don’t think so. Anderson says that to make the long tail ‘work’ you have to do two things (1) Make everything available and (2) Help me find it. We are certainly on the road to making everything available – soon all music will be online. But I think we are doing a bad job on step (2) help me find it. Our music recommenders are *not* helping us find music, in fact current music recommenders do the exact opposite, they tend to push us toward popular artists and limit the diversity of recommendations. Music recommendation is fundamentally broken, instead of helping us find music in the long tail they are doing the exact opposite. They are pushing us to popular content. To highlight this take a look at the next slide.

Help! I’m stuck in the head

This is a study done by Dr. Oscar Celma of MTG UPF (and now at BMAT). Oscar was interested in how far into the long tail a recommender would get you. He divided the 245,000 most popular artists into 3 sections of equal sales – the short head, with 83 artists, the mid tail with 6,659 artists, and the long tail with 239,798 artists. He looked at recommendations (top 20 similar artists) that start in the short head and found that 48% of those recommendations bring you right back to the short head. So even though there are nearly a quarter million artists to chose from, 48% of all recommendations are drawn from a pool of the 83 most popular artists. The other 52% of recommendations are drawn from the mid-tail. No recommendations at all bring you to the long tail. The nearly 240,000 artists in the long tail are not reachable directly from the short head. This demonstrates the problem with commercial recommendation – it focuses people on the popular at the expense of the new and unpopular.

Let’s take a look at why recommendation is broken.

The Wisdom of Crowds

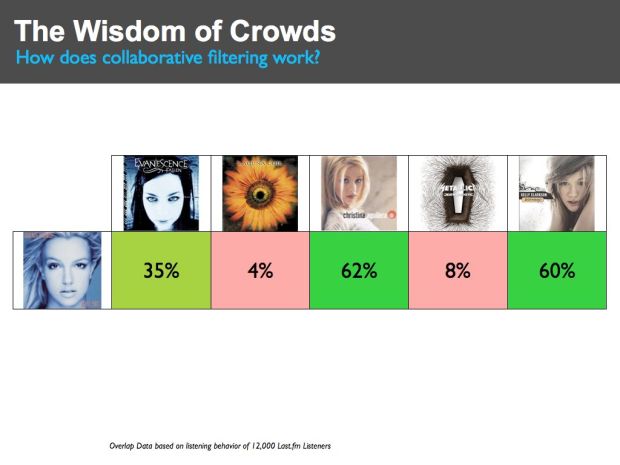

First lets take a look at how a typical music recommender works. Most music recommenders use a technique called Collaborative Filtering (CF). This is the type of recommendation you get at Amazon where they tell you that ‘people who bought X also bought Y’. The core of a CF recommender is actually quite simple. At the heart of the recommender is typically an item-to-item similarity matrix that is used to show how similar or dissimilar items are. Here we see a tiny excerpt of such a matrix. I constructed this by looking at the listening patterns of 12,000 last.fm listeners and looking at which artists have overlapping listeners. For instance, 35% of listeners that listen to Britney Spears also listen to Evancescence, while 62% also listen to Christina Aguilera. The core of a CF recommender is such a similarity matrix constructed by looking at this listener overlap. If you like Britney Spears, from this matrix we could recommend that you might like Christana and Kelly Clarkson, and we’d recommend that you probably wouldn’t like Metallica or Lacuna Coil.

CF recommenders have a number of advantages. First, they work really well for popular artists. When there are lots of people listening to a set of artists, the overlap is a good indicator of overall preference. Secondly, CF systems are fairly easy to implement. The math is pretty straight forward and conceptually they are very easy to understand. Of course, the devil is in the details. Scaling a CF system to work with millions of artists and billions of tracks for millions of users is an engineering challenge. Still, it is no surprise that CF systems are so widely used. They give good recommendations for popular items and they are easy to understand and implement. However, there are some flaws in CF systems that ultimately makes them not suitable for long-tail music recommendation. Let’s take a look at some of the issues.

The Stupidity of Solitude

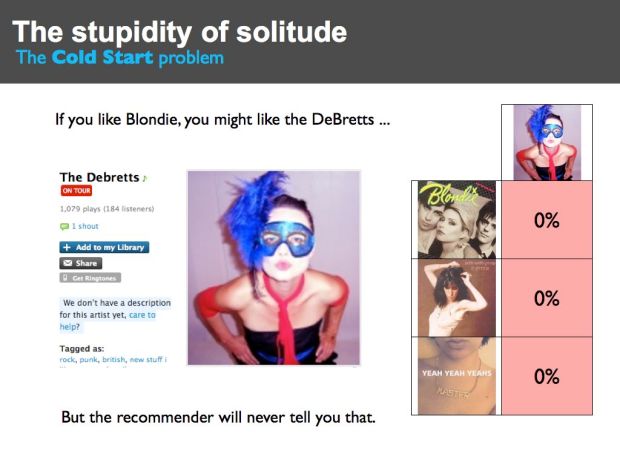

The DeBretts are a long tail artist. They are a punk band with a strong female vocalist that is reminiscent of Blondie or Patti Smith. (Be sure to listen to their song ‘The Rage’) .The DeBretts haven’t made it big yet. At last.fm they have about 200 listeners. They are a really good band and deserve to be heard. But if you went to an online music store like iTunes that uses a Collaborative Filterer to recommend music, you would *never* get a recommendation for the DeBretts. The reason is pretty obvious. The DeBretts may appeal to listeners that like Blondie, but even if all of the DeBretts listeners listen to Blondie the percentage of Blondie listeners that listen to the DeBretts is just too low. If Blondie has a million listeners then the maximum potential overlap(200/1,000,000) is way too small to drive any recommendations from Blondie to the DeBretts. The bottom line is that if you like Blondie, even though the DeBretts may be a perfect recommendation for you, you will never get this recommendation. CF systems rely on the wisdom of the crowds, but for the DeBretts, there is no crowd and without the crowd there is no wisdom. Among those that build recommender systems, this issue is called ‘the cold start’ problem. It is one of the biggest problems for CF recommenders. A CF-based recommender cannot make good recommendations for new and unpopular items.

Clearly we can see that this cold start problem is going to make it difficult for us to find new music in the long tail. The cold start problem is one of the main reasons why are recommenders are still’ stuck in the head’.

The Harry Potter Problem

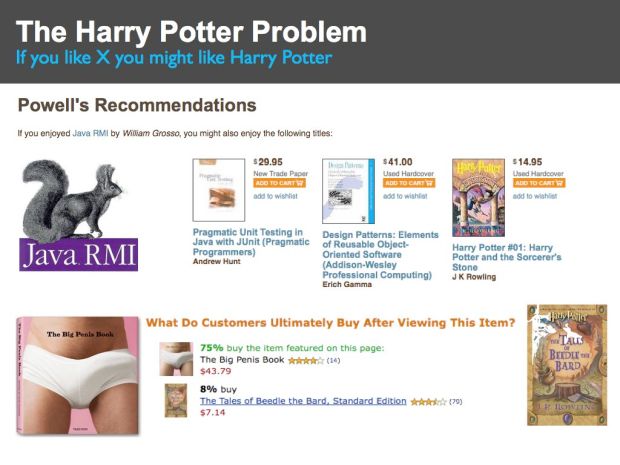

This slide shows a recommendation “If you enjoy Java RMI” you many enjoy Harry Potter and the Sorcerers Stone”. Why is Harry Potter being recommended for a reader of a highly technical programming book?

Certain items, like the Harry Potter series of books, are very popular. This popularity can have an adverse affect on CF recommenders. Since popular items are purchased often they are frequently purchased with unrelated items. This can cause the recommender to associate the popular item with the unrelated item, as we see in this case. This effect is often called the Harry Potter effect. People who bought just about any book that you can think of, also bought a Harry Potter book.

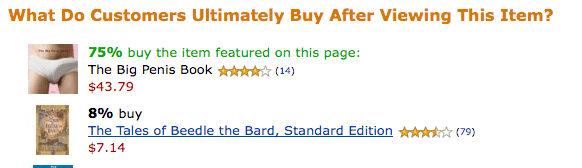

Case in point is the “The Big Penis Book” – Amazon tells us that after viewing “The Big Penis Book” 8% of customers go on to by the Tales of Beedle the Bard from the Harry Potter series. It may be true that people who like big penises also like Harry Potter but it may not be the best recommendation.

(BTW, I often use examples from Amazon to highlight issues with recommendation. This doesn’t mean that Amazon has a bad recommender – in fact I think they have one of the best recommenders in the world. Whenever I go to Amazon to buy one book, I end up buying five because of their recommender. The issues that I show are not unique to the Amazon recommender. You’ll find the same issues with any other CF-based recommender.)

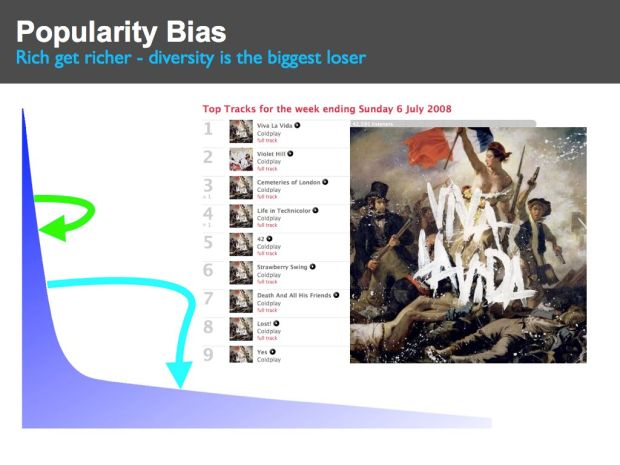

Popularity Bias

One effect of this Harry Potter problem is that a recommender will associate the popular item with many other items. The result is that the popular item tends to get recommended quite often and since it is recommended often, it is purchased often. This leads to a feedback loop where popular items get purchased often because they are recommended often and are recommended often because they are purchased often. This ‘rich-get-richer’ feedback loop leads to a system where popular items become extremely popular at the expense of the unpopular. The overall diversity of recommendations goes down. These feedback loops result in a recommender that pushes people toward more popular items and away from the long tail. This is exactly the opposite of what we are hoping that our recommenders will do. Instead of helping us find new and interesting music in the long tail, recommenders are pushing us back to the same set of very popular artists.

Note that you don’t need to have a fancy recommender system to be susceptible to these feedback loops. Even simple charts such as we see at music sites like the hype machine can lead to these feedback loops. People listen to tracks that are on the top of the charts, leading these songs to continue to be popular, and thus cementing their hold on the top spots in the charts.

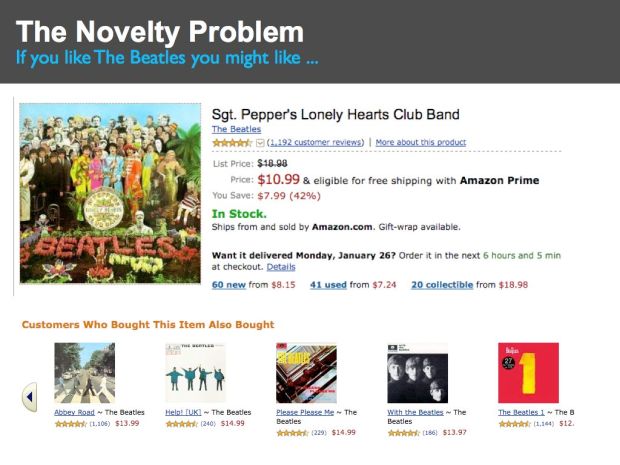

The Novelty Problem

There is a difference between a recommender that is designed for music discovery and one that is designed for music shopping. Most recommenders are intended to help a store make more money by selling you more things. This tends to lead to recommendations such as this one from Amazon – that suggests that since I’m interested in Sgt. Pepper’s Lonely Hearts Club Band that I might like Abbey Road and Please Please Me and every other Beatles album. Of course everyone in the world already knows about these items so these recommendations are not going to help people find new music. But that’s not the point, Amazon wants to sell more albums and recommending Beatles albums is a great way to do that.

One factor that is contributing to the Novelty Problem is high stakes evaluations like the Netflix prize. The Netflix prize is a competition that offers a million dollars to anyone that can improve the Netflix movie recommender by 10%. The evaluation is based on how well a recommender can predict how a movie viewer will rate a movie on a 1-5 star scale. This type of evaluation focuses on relevance – a recommender that can correctly predict that I’ll rate the movie ‘Titanic’ 2.2 stars instead of 2.0 stars – may score well in this type of evaluation, but that probably hasn’t really improved the quality of the recommendation. I won’t watch a 2.0 or a 2.2 star movie, so what does it matter. The downside of the Netflix prize is that only one metric – relevance – is being used to drive the advancement of recommender state-of-the-art when there are other equally import metrics – novelty is one of them.

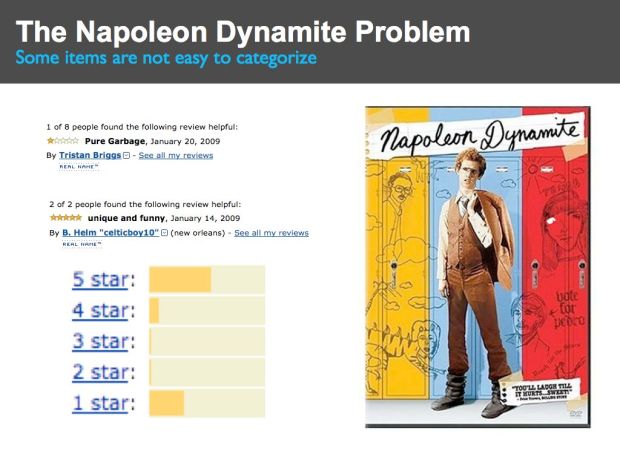

The Napoleon Dynamite Problem

Some items are not always so easy to categorize. For instance, if you look at the ratings for the movie Napoleon Dynamite you see a bimodal distribution of 5 stars and 1 stars. People either like it or hate it, and it is hard to predict how an individual will react.

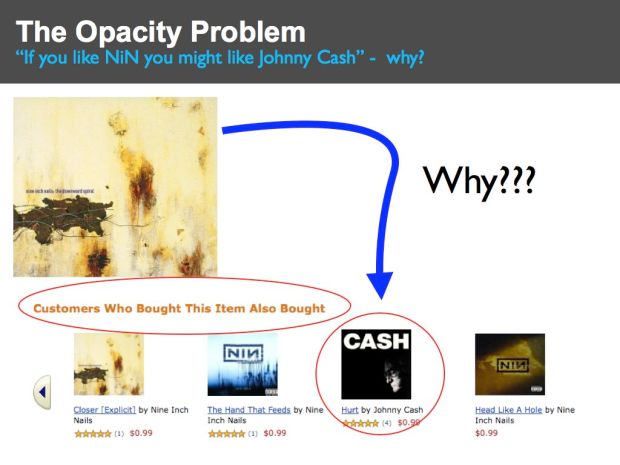

The Opacity Problem

Here’s an Amazon recommendation that suggests that if I like Nine Inch Nails that I might like Johnny Cash. Since NiN is an industrial band and Johnny Cash is a country/western singer, at first blush this seems like a bad recommendation, and if you didn’t know any better you may write this off as just another broken recommender. It would be really helpful if the CF recommender could explain why it is recommending Johnny Cash, but all it can really tell you is that ‘Other people who listened to NiN also listened to Johnny Cash’ which isn’t very helpful. If the recommender could give you a better explanation of why it was recommending something – perhaps something like “Johnny Cash has an absolutely stunning cover of the NiN song ‘hurt’ that will make you cry.” – then you would have a much better understanding of the recommendation. The explanation would turn what seems like a very bad recommendation into a phenomenal one – one that perhaps introduces you to whole new genre of music – a recommendation that may have you listening ‘Folsom Prison’ in a few weeks.

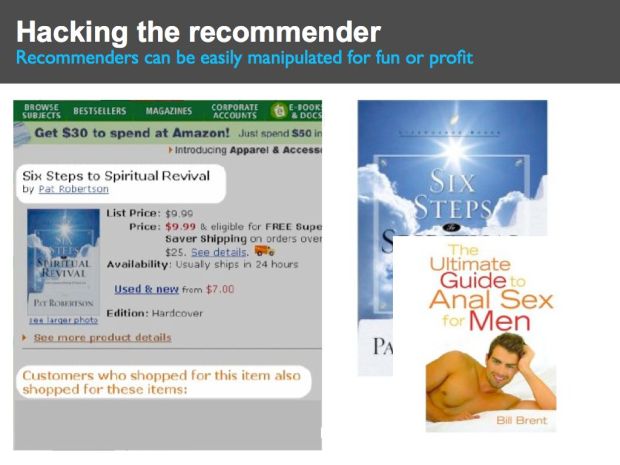

Hacking the Recommender

Here’s a recommendation based on a book by Pat Robertson called Six Steps to Spiritual Revival (courtesy of Bamshad Mobasher). This is a book by notorious televangelist Pat Roberston that promises to reveal “Gods’s Awesome Power in your life.” Amazon offers a recommendation suggesting that ‘Customers who shopped for this item also shopped for ‘The Ultimate Guide to Anal Sex for Men’. Clearly this is not a good recommendation. This bad recommendation is the result of a loosely organized group who didn’t like Pat Roberston, so they managed to trick the Amazon recommender into recommending a rather inappropriate book just by visiting the Amazon page for Robertson’s book and then visiting the Amazon page for the sex guide.

This manipulation of the Amazon recommender was easy to spot and can be classified as a prank, but it is not hard to image that an artist or a label may use similar techniques, but in a more subtle fashion to manipulate a recommender to promote their tracks (or to demote the competition). We already live in a world where search engine optimization is an industry. It won’t be long before recommender engine optimization will be an equally profitable (and destructive) industry.

Wrapping up

This is the first part of a two part post. In this post I’ve highlighted some of the issues in traditional music recommendation. Next post is all about how to fix these problems. For an alternative view be sure to visit Anthony Volodkin’s blog where he presents a rather different viewpoint about music recommendation.

Help! My iPod thinks I’m emo.

Posted by Paul in Music, recommendation, research, The Echo Nest on March 23, 2009

Here are the slides for the panel “Help! My iPod thinks I’m emo.” that Anthony Volodkin (from The Hype Machine) and I gave at SXSW on music recommendation:

Collaborative Filtering and Diversity

Posted by Paul in recommendation, research, Uncategorized on March 23, 2009

One of the things Anthony and I talked about at our “Help! My iPod thinks I’m emo.” SXSW panel last week is the ‘Harry Potter Effect’ – how popular items in a recommender can lead to (among other things) feedback loops that lead to a situation where the rich get richer. A popular item like that latest Coldplay or Metallica album get purchased often with other albums and therefore end up getting recommended more frequently – and because it gets recommended – it gets purchased more often until it is sitting on the top of the charts. The Harry Potter effect can result in a lowering of the diversity of items consumed.

In his post, Online Monculture and the End of the Niche, Tom Slee over at whimsley has run a simulation that shows how this drop in diversity occurs – and also explains the non-intuitive result that while the use of a recommender can lead to decreased diversity overall, it can lead to increased diversity for an individual. Tom explains this with a metaphor: In the Internet World the customers see further, but they are all looking out from the same tall hilltop. While without a recommender individual customers are standing on different, lower, hilltops. They may not see as far individually, but more of the ground is visible to someone.

As an example of this effect, here’s a recommendation from Amazon that shows how 8% of those that shopped for The Big Penis Book

went on to buy a Harry Potter book. A recommender that pushes those that are buying books about big penises toward Harry Potter may indeed increase the diversity of those individuals (they may never have considered harry potter before, because of all those penises), but does indeed lower the overall diversity of the community as a whole (everyone is buying harry potter).

went on to buy a Harry Potter book. A recommender that pushes those that are buying books about big penises toward Harry Potter may indeed increase the diversity of those individuals (they may never have considered harry potter before, because of all those penises), but does indeed lower the overall diversity of the community as a whole (everyone is buying harry potter).

It is an interesting post, with charts and graphs and a good comment thread. Worth a read. (Thanks for the tip Jeremy)

sxsw music discovery chaos?

Posted by Paul in Music, recommendation on March 18, 2009

The very last panel I attended at SXSW Interactive was a panel called “Music 2.0 = Music Discovery Chaos?” This was a roundtable discussion as opposed to a more traditional panel where ‘experts’ do most of the talking. Elliot and Sandy Hurst of Supernova.com guided a conversation about the state of music discovery.

To tell the truth, I had low expectations for this panel. These things often devolve into (a) discussion about business models, (b) people pimping their new site, (c) some self-proclaimed expert dominating the discussion. But instead of a trainwreck, this panel turned into one my highlights of SXSW.

There was a wide range of people with a wide range of views that participated in the discussion. There were music fans (of course) that touted their favorite discovery mechanisms (friends, last.fm, hype machine). There were music critics who reminded us of the role of the expert in filtering music, but who also admitted that there’s just too much music for them to deal with, so they need their own filters. There were music programmers who talked about the different levels of listening adventurousness based on demographics (us old people apparently are less adventurous). And there was the gadfly in the back of the room, who wondered why we cared so much about this – he had no problems finding music – and if people want to listen to American Idol, so what?

Early on in the discussion Elliot took a poll of the room that seemed to indicate that for many the primary way people found music was through friends. After this poll he ask me “why, since it seemed that most people found new music through their friends do we need machines to help us find music?”. I got to paraphrase the line from Mike McGuire: “music recommendation is for people that don’t have friends”. That got a bit of a laugh.

Of course, for a discussion like this, there’s never an ultimate agreement on anything. But it was fantastic to listen to the debate – especially by so many really smart people who are very passionate about music. Awesome panel! Good job Elliot and Sandy.