Archive for category events

The ISMIR business meeting

Posted by Paul in events, ismir, music information retrieval, research on August 12, 2010

Notes from the ISMIR business meeting – this is a meeting with the board of ISMIR.

Officers

- President: J. Stephen Downie, University of Illinois at Urbana-Champaign, USA

- Treasurer: George Tzanetakis, University of Victoria, Canada

- Secretary: Jin Ha Lee, University of Illinois at Urbana-Champaign, USA

- President-elect: Tim Crawford, Goldsmiths College, University of London, UK

- Member-at-large: Doug Eck, University of Montreal, Canada

- Member-at-large: Masataka Goto, National Institute of Advanced Industrial Science and Technology, Japan

- Member-at-large: Meinard Mueller, Max-Planck-Institut für Informatik, Germany

Stephen reviewed the roles of the various officers and duties of the various committees. He reminded us that one does not need to be on the board to serve on a subcommittee.

Publication Issues

- website redesign

- Other communities hardly know about ISMIR. Want to help other communities be aware of our research. One way is to make more links to other communities. Entering committees in other communities.

Hosting Issue – will formalize documentation, location planning, site selection.

Name change? There was a nifty debate around the meaning of ISMIR. There was a proposal to change it to ‘International Society for Music Informatics Research’. I recommend, given Doug’s comments about Youtube from this morning that we change the name to: ‘ International Society for Movie Informatics Research’

Review Process: Good discussion about the review process – we want paper bidding and double-blind reviews. Helps avoid gender bias:

Doug snuck in the secret word ‘youtube’ too, just for those hanging out on IRC.

MIR at Google: Strategies for Scaling to Large Music Datasets Using Ranking and Auditory Sparse-Code Representations

Posted by Paul in events, ismir, music information retrieval, research on August 12, 2010

MIR at Google: Strategies for Scaling to Large Music Datasets Using Ranking and Auditory Sparse-Code Representations

Douglas Eck (Google) (Invited speaker) – There’s no paper associated with this talk.

Machine Listening / Audio analysis – Dick Lyon and Samy Bengio

Main strength:

- Scalable algorithms

- When they do work, they use large sets (like all audio on Youtube, or all audio on the web)

- Sparse High dimensional Representations

- 15 numbers to describe a track

- Auditory / Cohchlear Modeling

- Autotagging at Youtube –

- Retrieval, annotation, ranking, recommendation

Collaboration Opportunities

- Faculty research awards

- Google visiting faculty program

- Student internships

- Google summer of code

- Research Infrastructure

The Future of MIR is already here

- Next generation of listeners are using Youtube – because of the on-demand nature

- Youtube – 2 billion views a day

- Content ID scans over 100 years of video every day

The Bar is already set very high ..

- Current online recommendation is pretty good

- Doug wants to close the loop between music making and music listening

What would you like Google to give back to MIR?

A Roadmap Towards Versatile MIR

Posted by Paul in events, ismir, music information retrieval, research on August 12, 2010

A Roadmap Towards Versatile MIR

Emmanuel Vincent, Stanislaw A. Raczyński, Nobutaka Ono and Shigeki Sagayama

ABSTRACT – Most MIR systems are specifically designed for one appli- cation and one cultural context and suffer from the seman- tic gap between the data and the application. Advances in the theory of Bayesian language and information process- ing enable the vision of a versatile, meaningful and accu- rate MIR system integrating all levels of information. We propose a roadmap to collectively achieve this vision.

Wants to increase versatility of MIR systems across different types of music. Systems adopt a fixed expert viewpoint ( musicologist, musician). Have limited accuracy due to general pattern recognition techniques applied to a bag of features.

Emannuel wants to build an overarching scalable MIR system that successfully deals with the challenge on scalable unsupervised methods and refocuses MIR on symbolic methods. This is the core roadmap of VERSAMUS.

The aim of VERSAMUS is to investigate, design and validate such representations in the framework of Bayesian data analysis, which provides a rigorous way of combining separate feature models in a modular fashion. Tasks to be addressed include the design of a versatile model structure, of a library of feature models and of efficient algorithms for parameter inference and model selection. Efforts will also be dedicated towards the development of a shared modular software platform and a shared corpus of multi-feature annotated music which will be reusable by both partners in the future and eventually disseminated

Accurate Real-time Windowed Time Warping

Posted by Paul in events, ismir, music information retrieval, research on August 11, 2010

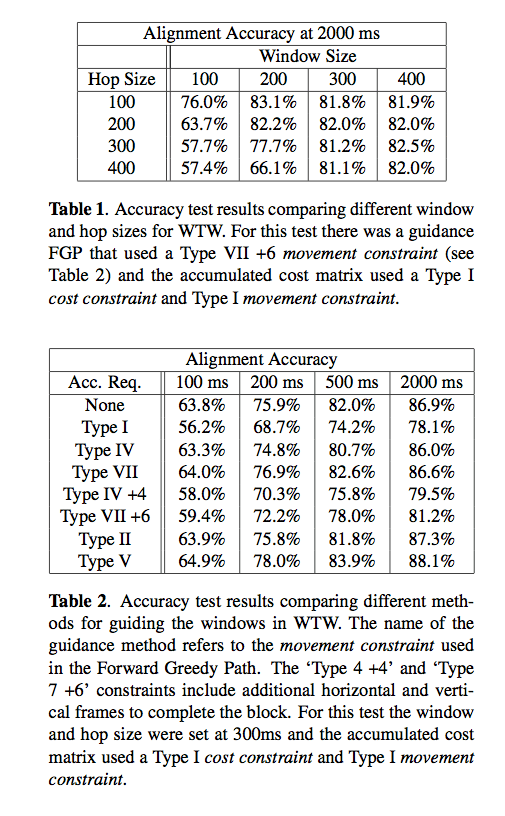

Accurate Real-time Windowed Time Warping

Robert Macrae and Simon Dixon

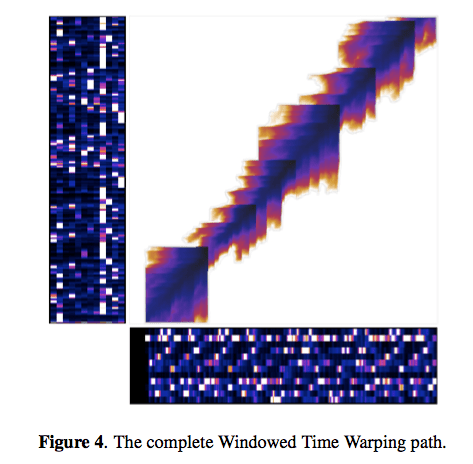

ABSTRACT – Dynamic Time Warping (DTW) is used to find alignments between two related streams of information and can be used to link data, recognise patterns or find similarities. Typically, DTW requires the complete series of both input streams in advance and has quadratic time and space requirements. As such DTW is unsuitable for real-time applications and is inefficient for aligning long sequences. We present Windowed Time Warping (WTW), a variation on DTW that, by dividing the path into a series of DTW windows and making use of path cost estimation, achieves alignments with an accuracy and efficiency superior to other leading modifications and with the capability of synchronising in real-time. We demonstrate this method in a score following application. Evaluation of the WTW score following system found 97.0% of audio note onsets were correctly aligned within 2000 ms of the known time. Results also show reductions in execution times over state-of-the- art efficient DTW modifications.

Idea: Frame window features – (sub dtw frames). Each path can be calculated sequentially, so less history needs to be retained which is important for performance.

Works in linear time like previous systems, but with the smaller history it can work entirely in memory, so it avoids the problem of needing to store the history on disk. Nice demo of a real-time time warping.

Understanding Features and Distance Functions for Music Sequence Alignment

Posted by Paul in events, ismir, music information retrieval, research on August 11, 2010

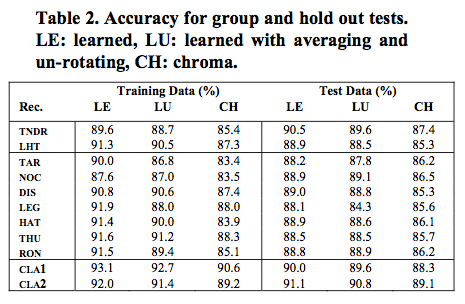

Understanding Features and Distance Functions for Music Sequence Alignment – Ozgur Izmirli and Roger Dannenberg

ABSTRACT We investigate the problem of matching symbolic representations directly to audio based representations for applications that use data from both domains. One such application is score alignment, which aligns a sequence of frames based on features such as chroma vectors and distance functions such as Euclidean distance. Good representations are critical, yet current systems use ad hoc constructions such as the chromagram that have been shown to work quite well. We investigate ways to learn chromagram-like representations that optimize the classification of “matching” vs. “non-matching” frame pairs of audio and MIDI. New representations learned automatically from examples not only perform better than the chromagram representation but they also reveal interesting projection structures that differ distinctly from the traditional chromagram.

Roger and Ozgur present a method for learning features for score alignment. They bypass the traditional chromagram feature with a feature that is learned projection of the audio spectrum. Results show that the new features work better than chroma.

Predicting High-level Music Semantics Using Social Tags via Ontology-based Reasoning

Predicting High-level Music Semantics Using Social Tags via Ontology-based Reasoning

Jun Wang, Xiaoou Chen, Yajie Hu and Tao Feng

ABSTRACT – High-level semantics such as “mood” and “usage” are very useful in music retrieval and recommendation but they are normally hard to acquire. Can we predict them from a cloud of social tags? We propose a semantic iden- tification and reasoning method: Given a music taxonomy system, we map it to an ontology’s terminology, map its finite set of terms to the ontology’s assertional axioms, and then map tags to the closest conceptual level of the referenced terms in WordNet to enrich the knowledge base, then we predict richer high-level semantic informa- tion with a set of reasoning rules. We find this method predicts mood annotations for music with higher accuracy, as well as giving richer semantic association information, than alternative SVM-based methods do.

In this paper, the authors use word-net to map social tags to a professional taxonomy and then use these for traditional tagging tasks such as classification and mood identification.

Learning Tags that Vary Within a Song

Posted by Paul in events, ismir, music information retrieval, research on August 11, 2010

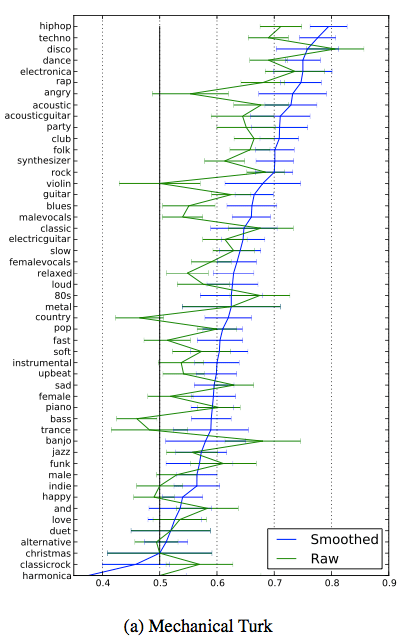

Learning Tags that Vary Within a Song

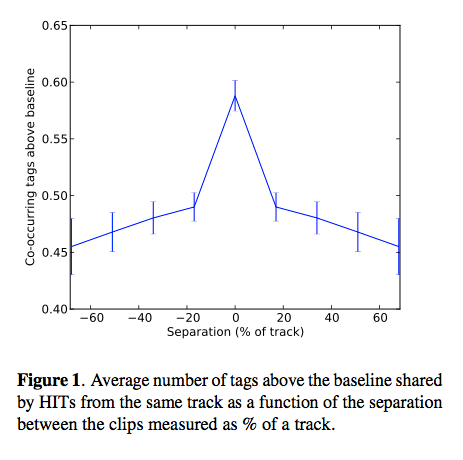

Michael I. Mandel, Douglas Eck and Yoshua Bengio

Abstract:This paper examines the relationship between human generated tags describing different parts of the same song. These tags were collected using Amazon’s Mechanical Turk service. We find that the agreement between different people’s tags decreases as the distance between the parts of a song that they heard increases. To model these tags and these relationships, we describe a conditional restricted Boltzmann machine. Using this model to fill in tags that should probably be present given a context of other tags, we train automatic tag classifiers (autotaggers) that outperform those trained on the original data.

Michael enlisted the Amazon Turk to tag music. They paid for about a penny per tag. About 11% of the tags were spam. He then looked at co-occurence data that showed interesting patterns, especially as related to the distance between clips in a single track.

Results shows that smoothing of the data with a boltzmann machine tends to give better accuracy when tag data is sparse.

Results shows that smoothing of the data with a boltzmann machine tends to give better accuracy when tag data is sparse.

Sparse Multi-label Linear Embedding Within Nonnegative Tensor Factorization Applied to Music Tagging

Posted by Paul in events, ismir, music information retrieval, research on August 11, 2010

Sparse Multi-label Linear Embedding Within Nonnegative Tensor Factorization Applied to Music Tagging Yannis Panagakis, Constantine Kotropoulos and Gonzalo R. Arce

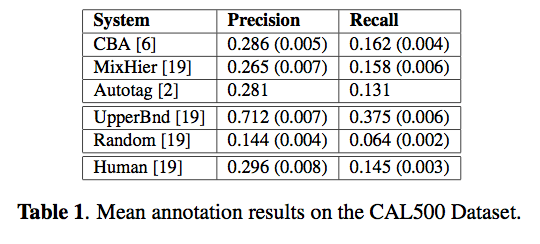

Abstract: A novel framework for music tagging is proposed. First, each music recording is represented by bio-inspired auditory temporal modulations. Then, a multilinear subspace learning algorithm based on sparse label coding is developed to effectively harness the multi-label information for dimensionality reduction. The proposed algorithm is referred to as Sparse Multi-label Linear Embedding Non- negative Tensor Factorization, whose convergence to a stationary point is guaranteed. Finally, a recently proposed method is employed to propagate the multiple labels of training auditory temporal modulations to auditory temporal modulations extracted from a test music recording by means of the sparse l1 reconstruction coefficients. The overall framework, that is described here, outperforms both humans and state-of-the-art computer audition systems in the music tagging task, when applied to the CAL500 dataset.

This paper gets the ‘Title that rolls off the tongue best’ award. I don’t understand all of the math for this one, but some notes – the wavelet-based features used, seem to be good at discriminating at the genre level. He compares the system to Doug Turnbull’s MixHier and to the system that we built at Sun labs with Thierry, Doug, Francois and myself (Autotagger: A model for predicting social tags from acoustic features on Large Music Databases)

.

MIREX 2010

Posted by Paul in events, ismir, music information retrieval, research on August 11, 2010

Dr. Downie gave a summary of MIREX 2010 – the evaluation track for MIR (it is like TREC for MIR). Results are here: MIREX-2010 results

Matthias Mauch makes a case for improving chord recognition by making the MIREX tasks harder. He’d like to gently increase the difficulty.

APPROXIMATE NOTE TRANSCRIPTION FOR THE IMPROVED IDENTIFICATION OF DIFFICULT CHORDS

Posted by Paul in events, ismir, music information retrieval, research on August 10, 2010

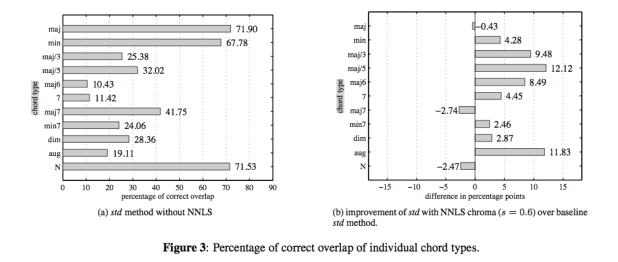

APPROXIMATE NOTE TRANSCRIPTION FOR THE IMPROVED IDENTIFICATION OF DIFFICULT CHORDS – Matthias Mauch and Simon Dixon

This is a new chroma extraction method using a non-negative least squares (NNLS) algorithm for prior approximate note transcription. Twelve different chroma methods were tested for chord transcription accuracy on popular music, using an existing high- level probabilistic model. The NNLS chroma features achieved top results of 80% accuracy that significantly exceed the state of the art by a large margin.

We have shown that the positive influence of the approximate transcription is particularly strong on chords whose harmonic structure causes ambiguities, and whose identification is therefore difficult in approaches without prior approximate transcription. The identification of these difficult chord types was substantially increased by up to twelve percentage points in the methods using NNLS transcription.

Matthias is an enthusiastic presenter who did not hesitate to jump onto the piano to demonstrate ‘difficult chords’. Very nice presentation.