Archive for category data

Reidentification of artists and genres in the KDD cup data

Posted by Paul in data, recommendation, research on June 21, 2011

Back in February I wrote a post about the KDD Cup ( an annual Data Mining and Knowledge Discovery competition), asking whether this year’s cup was really music recommendation since all the data identifying the music had been anonymized. The post received a number of really interesting comments about the nature of recommendation and whether or not context and content was really necessary for music recommendation, or was user behavior all you really needed. A few commenters suggested that it might be possible de-anonymize the data using a constraint propagation technique.

Many voiced an opinion that such de-anonymizing of the data to expose user listening habits would indeed be unethical. Malcolm Slaney, the researcher at Yahoo! who prepared the dataset offered the plea:

Many voiced an opinion that such de-anonymizing of the data to expose user listening habits would indeed be unethical. Malcolm Slaney, the researcher at Yahoo! who prepared the dataset offered the plea:

If you do de-anonymize the data please don’t tell anybody. We’ll NEVER be able to release data again.

As far as I know, no one has de-anonymized the KDD Cup dataset, however, researcher Matthew J. H. Rattigan of The University of Massachusetts at Amherst has done the next best thing. He has published a paper called Reidentification of artists and genres the KDD cup that shows that by analyzing at the relational structures within the dataset it is possible to identify the artists, albums, tracks and genres that are used in the anonymized dataset. Here’s an excerpt from the paper that gives an intuitive description of the approach:

For example, consider Artist 197656 from the Track 1 data. This artist has eight albums described by different combinations of ten genres. Each album is associated with several tracks, with track counts ranging from 1 to 69. We make the assumption that these albums and tracks were sampled without replacement from the discography of some real artist on the Yahoo! Music website. Furthermore, we assume that the connections between genres and albums are not sampled; that is, if an album in the KDD Cup dataset is attached to three genres, its real-world counterpart has exactly three genres (or “Categories”, as they are known on the Yahoo! Music site).

Under the above assumptions, we can compare the unlabeled KDD Cup artist with real-world Yahoo! Music artists in order to find a suitable match. The band Fischer Z, for example, is an unsuitable match, as their online discography only contains seven albums. An artist such as Meatloaf certainly has enough albums (56) to be a match, but none of those albums contain more than 31 tracks. The entry for Elvis Presley contains 109 albums, 17 of which boast 69 or more tracks; however, there is no consistent assignment of genres that satisfies our assumptions. The band Tool, however, is compatible with Artist 197656. The Tool discography contains 19 albums containing between 0 and 69 tracks. These albums are described by exactly 10 genres, which can be assigned to the unlabeled KDD Cup genres in a consistent manner. Furthermore, the match is unique: of the 134k artists in our labeled dataset, Tool is the only suitable match for Artist 197656.

Of course it is impossible for Matthew to evaluate his results directly, but he did create a number of synthetic, anonymized datasets draw from Yahoo and was able to demonstrate very high accuracy for the top artists and a 62% overall accuracy.

The motivation for this type of work is not to turn the KDD cup dataset into something that music recommendation researchers could use, but instead is to get a better understanding of data privacy issues. By understanding how large datasets can be de-anonymized, it will be easier for researchers in the future to create datasets that won’t be easily yield their hidden secrets. The paper is an interesting read – so since you are done doing all of your reviews for RecSys and ISMIR, go ahead and give it a read: https://www.cs.umass.edu/publication/docs/2011/UM-CS-2011-021.pdf. Thanks to @ocelma for the tip.

Finding music with pictures: Data visualization for discovery

Posted by Paul in data, Music, visualization on March 13, 2011

I just finished giving my talk at SXSW called – ‘Finding Music with Pictures”. A few people asked for the slides – I’ve posted them to Slideshare. Of course all the audio and video is gone, but you can follow the links to see the vids. Here are the slides:

Lots of good tweets from the audience. And Hugh Garry has Storify’d the talk.

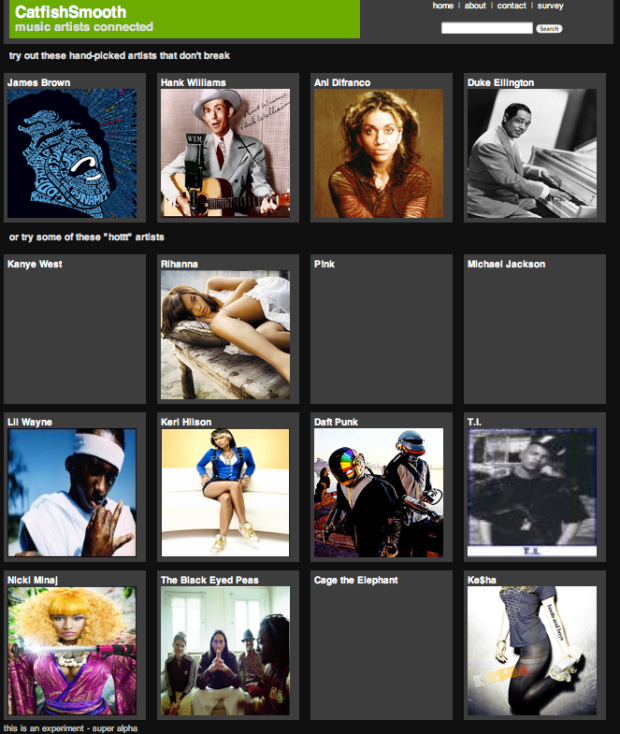

catfish smooth

Posted by Paul in data, Music, recommendation, research on January 20, 2011

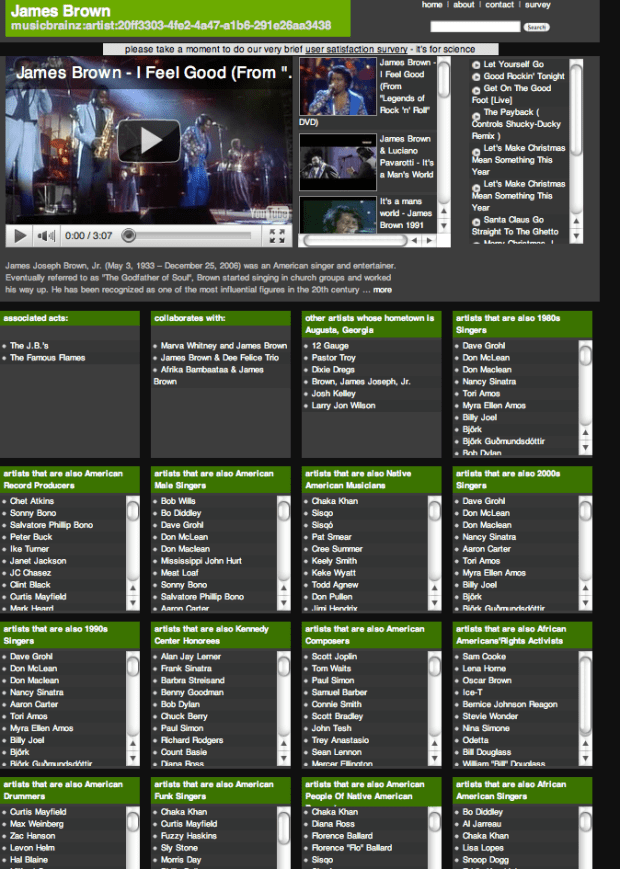

Kurt Jacobson is a recent additions to the staff here at The Echo Nest. Kurt has built a music exploration site called catfish smooth that allows you to explore the connections between artists. Kurt describes it as: all about connections between music artists. In a sense, it is a music artist recommendation system but more. For each artist, you will see the type of “similar artist” recommendations to which you are accustomed – we use last.fm and The Echo Nest to get these. But you will also see some other inter-artist connections catfish has discovered from the web of linked data. These include things like “artists that are also English Male Singers” or “artists that are also Converts To Islam” or “artists that are also People From St.Louis, Missouri”. And, hopefully, you’ll get some media for each artist so you can have a listen.

It’s a really interesting way to explore the music space, allowing you to stumble upon new artists based on a wide range of parameters.

For example take a look at the many categories and connections catfish smooth exposes for James Brown.

Kurt is currently conducting a usability survey for catfish smooth, so take a minute to kick the tires and then help Kurt finish his PhD and take the survey.

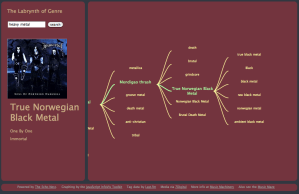

The Labyrinth of Genre

Posted by Paul in code, data, tags, The Echo Nest, visualization on January 16, 2011

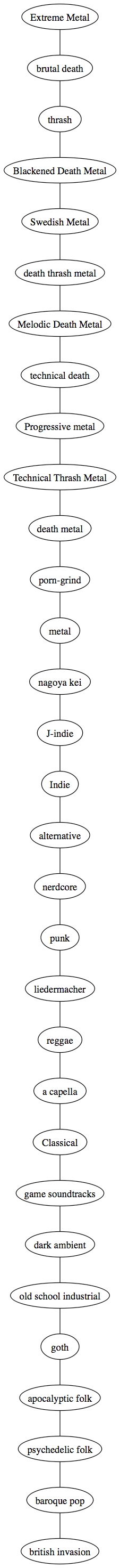

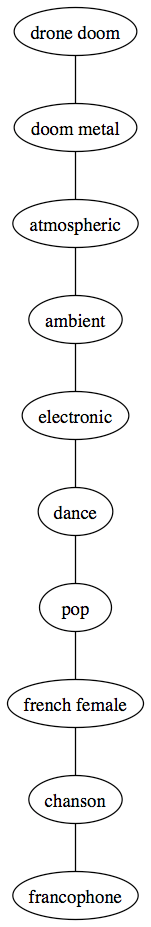

I’m fascinated with how music genres relate to each other, especially how one can use different genres as stepping stones as a guide through the vast complexities of music. There are thousands of genres, some like rock or pop represent thousands of artists, while some like Celtic Metal or Humppa may represent only a handful of artists. Building a map by hand that represents the relationships of all of these genres is a challenge. Is Thrash Metal more closely related to Speed Metal or to Power Metal? To sort this all out I’ve built a Labyrinth of Genre that lets you explore the many genres. The Labyrinth lets you wander though about a 1000 genres, listening to samples from representative artists.

Click on a genre and the labyrinth will be expanded to show similar half a dozen similar genres and you’ll hear songs in the genre.

I built the labyrinth by analyzing a large collection of last.fm tags. I used the cosine distance of tf-idf weighted tagged artists as a distance metric for tags. When you click on a node, I attach the six closest tags that haven’t already been attached to the graph. I then use the Echo Nest APIs to get all the media.

Even though it’s a pretty simple algorithm, it is quite effective in grouping similar genre. If you are interested in wandering around a maze of music, give the Labyrinth of Genre a try.

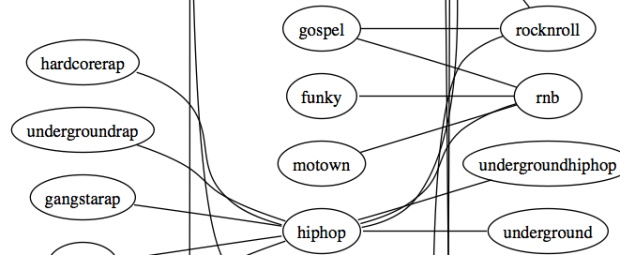

A Genre Map

Inspired by an email exchange with Samuel Richardson, creator of ‘Know your genre‘ I created a genre map that might serve as a basis for a visual music explorer (perhaps something to build at one of the upcoming music hack days). The map is big and beautiful (in a geeky way). Here’s an excerpt, click on it to see the whole thing.

Update – I’ve made an interactive exploration tool that lets you wander through the genre graph. See the Labyrinth of Genre

Update 2 – Colin asked the question “What’s the longest path between two genres?” – If I build the graph by using the 12 nearest neighbors to each genre, find the minimum spanning tree for that graph and then find the longest path, I find this 31 step wonder:

Of course there are lots of ways to skin this cat – if I build the graph with just the nearest 6 neighbors, and don’t extract the minimum spanning tree, the longest path through the graph is 10 steps:

LastFM-ArtistTags2007

A few years back I created a data set of social tags from Last.fm. RJ at Last.fm graciously gave permission for me to distribute the dataset for research use. I hosted the dataset on the media server at Sun Labs. However, with the Oracle acquisition, the media server is no longer serving up the data, so I thought I would post the data elsewhere.

The dataset is now available for download here: Lastfm-ArtistTags2007

Here are the details as told in the README file:

The LastFM-ArtistTags2007 Data set

Version 1.0

June 2008

What is this?

This is a set of artist tag data collected from Last.fm using

the Audioscrobbler webservice during the spring of 2007.

The data consists of the raw tag counts for the 100 most

frequently occuring tags that Last.fm listeners have applied

to over 20,000 artists.

An undocumented (and deprecated) option of the audioscrobbler

web service was used to bypass the Last.fm normalization of tag

counts. This data set provides raw tag counts.

Data Format:

The data is formatted one entry per line as follows:

musicbrainz-artist-id<sep>artist-name<sep>tag-name<sep>raw-tag-count

Example:

11eabe0c-2638-4808-92f9-1dbd9c453429<sep>Deerhoof<sep>american<sep>14

11eabe0c-2638-4808-92f9-1dbd9c453429<sep>Deerhoof<sep>animals<sep>5

11eabe0c-2638-4808-92f9-1dbd9c453429<sep>Deerhoof<sep>art punk<sep>21

11eabe0c-2638-4808-92f9-1dbd9c453429<sep>Deerhoof<sep>art rock<sep>18

11eabe0c-2638-4808-92f9-1dbd9c453429<sep>Deerhoof<sep>atmospheric<sep>4

11eabe0c-2638-4808-92f9-1dbd9c453429<sep>Deerhoof<sep>avantgarde<sep>3

Data Statistics:

Total Lines: 952810

Unique Artists: 20907

Unique Tags: 100784

Total Tags: 7178442

Filtering:

Some minor filtering has been applied to the tag data. Last.fm will

report tag with counts of zero or less on occasion. These tags have

been removed.

Artists with no tags have not been included in this data set.

Of the nearly quarter million artists that were inspected, 20,907

artists had 1 or more tags.

Files:

ArtistTags.dat - the tag data

README.txt - this file

artists.txt - artists ordered by tag count

tags.txt - tags ordered by tag count

License:

The data in LastFM-ArtistTags2007 is distributed with permission of

Last.fm. The data is made available for non-commercial use only under

the Creative Commons Attribution-NonCommercial-ShareAlike UK License.

Those interested in using the data or web services in a commercial

context should contact partners at last dot fm. For more information

see http://www.audioscrobbler.net/data/

Acknowledgements:

Thanks to Last.fm for providing the access to this tag data via their

web services

Contact:

This data was collected, filtered and by Paul Lamere of The Echo Nest. Send

questions or comments to Paul.Lamere@gmail.com

What’s the TTKP?

Posted by Paul in data, fun, Music, web services on November 9, 2010

Whenever Jennie and I are in the car together, we will listen to the local Top-40 radio station (KISS 108). One top-40 artist that i can recognize reliably is Katy Perry. It seems like we can’t drive very far before we are listening to Teenage Dreams, Firework or California Gurls. That got me wondering what the average Time To Katy Perry (TTKP) was on the station and how it compared to other radio stations. So I fired up my Python interpreter, wrote some code to pull the data from the fabulous YES api and answer this very important question. With the YES API I can get the timestamped song plays for a station for the last 7 days. I gathered this data from WXKS (Kiss 108), did some calculations to come up with this data:

Whenever Jennie and I are in the car together, we will listen to the local Top-40 radio station (KISS 108). One top-40 artist that i can recognize reliably is Katy Perry. It seems like we can’t drive very far before we are listening to Teenage Dreams, Firework or California Gurls. That got me wondering what the average Time To Katy Perry (TTKP) was on the station and how it compared to other radio stations. So I fired up my Python interpreter, wrote some code to pull the data from the fabulous YES api and answer this very important question. With the YES API I can get the timestamped song plays for a station for the last 7 days. I gathered this data from WXKS (Kiss 108), did some calculations to come up with this data:

- Total songs played per week: 1,336

- Total unique songs: 184

- Total unique artists: 107

- Average songs per hour: 7

- Number of Katy Perry plays: 76

- Median Time between Katy Perry songs: 1hour 18 minutes

That means the average Time to Katy Perry is about 39 minutes.

Katy Perry is only the fourth most played artist on KISS 108. Here are the stats for the top 10:

| Artist | Plays | Median time between plays |

Average time to next play |

|---|---|---|---|

| Taio Cruz | 84 | 1:07 | 0:34 |

| Rihanna | 80 | 1:27 | 0:44 |

| Usher | 79 | 1:20 | 0:40 |

| Katy Perry | 76 | 1:18 | 0:39 |

| Bruno Mars | 73 | 1:30 | 0:45 |

| Nelly | 56 | 1:44 | 0:52 |

| Mike Posner | 56 | 1:57 | 0:59 |

| Pink | 47 | 2:20 | 1:10 |

| Lady Gaga | 47 | 1:59 | 1:00 |

| Taylor Swift | 41 | 2:17 | 1:09 |

I took a look at some of the other top-40 stations around the country to see which has the lowest TTKP:

| Station | Songs Per Hour | TTKP |

|---|---|---|

| KIIS – LA’s #1 hit music station | 8 | 39 mins |

| WHTZ- New York’s #1 hit music station | 9 | 48 mins |

| WXKS- Boston’s #1 hit music station | 7 | 39 mins |

| WSTR- Atlanta – Always #1 for Today’s Hit Music | 8 | 38 mins |

| KAMP- 97.1 Amp Radio – Los Angeles | 11 | 38 mins |

| KCHZ- 95.7 – The Beat of Kansas City | 11 | 32 mins |

| WFLZ- 93.3 – Tampa Bay’s Hit Music channe | 9 | 39 mins |

| KREV- 92.7 – The Revolution – San Francisco | 11 | 36 mins |

So, no matter where you are, if you have a radio, you can tune into the local top-40 radio station, and you’ll need to wait, on average, only about 40 minutes until a Katy Perry song comes on. Good to know.

Visual Music

Posted by Paul in code, data, events, fun, Music, The Echo Nest, visualization on July 28, 2010

The week long Visual Music Collaborative Workshop held at the Eyebeam just finished up. This was an invite-only event where participants did a deep dive into sound analysis techniques, openGL programming, and interfacing with mobile control devices.

Here’s one project built during the week that uses The Echo Nest analysis output:

(Via Aaron Meyers)

Novelty playlist ordering

[tweetmeme source= ‘plamere’ only_single=false] We’ve been building a new playlisting engine here at the Echo Nest. The engine is really neat – it lets you apply a whole range of very flexible constraints and orderings to make all sorts of playlists that would be a challenge for even the most savvy DJ. Playlists like 15 songs with a tempo between 120 and 130 BPM ordered by how danceable they are by very popular female artists that sound similar to Lady Gaga, that live near London, but never ever include tracks by The Spice Girls.

I was playing with the engine this weekend, writing some rules to make novelty playlists to test the limits of the engine. I started with rules typical for a similar-artist playlist: 15 songs long, filled with songs by artists similar to a seed artist (in this case Weezer), the first and last song must be by the seed artist, and no two consecutive songs can be by the same artist. Simple enough, but then I added two more rules to turn this into a novelty playlist that would be very hard for a human to make. See if you can guess what the two rules are. I think one of the rules is pretty obvious, but the second is a bit more subtle. Post your guesses in the comments.

0 Tripping Down the Freeway - Weezer

1 Yer All I've Got Ttonight - The Smashing Pumpkins

2 The Most Beautiful Things - Jimmy Eat World

3 Someday You Will Be Loved - Death Cab For Cutie

4 Don't Make Me Prove It - Veruca Salt

5 The Sacred And Profane - Smashing Pumpkins, The

6 Everything Is Alright - Motion City Soundtrack

7 The Ego's Last Stand - The Flaming Lips

8 Don't Believe A Word - Third Eye Blind

9 Don's Gone Columbia - Teenage Fanclub

10 Alone + Easy Target - Foo Fighters

11 The Houses Of Roofs - Biffy Clyro

12 Santa Has a Mullet - Nerf Herder

13 Turtleneck Coverup - Ozma

14 Perfect Situation - Weezer

Here’s another playlist – with a different set of two novelty rules, with a seed artist of Led Zeppelin. Again, if you can guess the rules, post a comment.

0 El Niño - Jethro Tull

1 Cheater - Uriah Heep

2 Hot Dog - Led Zeppelin

3 One Thing - Lynyrd Skynyrd

4 Nightmare - Black Sabbath

5 Ezy Ryder - The Jimi Hendrix Experience

6 Soulshine - Govt Mule

7 The Gypsy - Deep Purple

8 I'll Wait - Van Halen

9 Slow Down - Ozzy Osbourne

10 Civil War - Guns N' Roses

11 One Rainy Wish - Jimi Hendrix

12 Overture (Live) - Grand Funk Railroad

13 Larger Than Life - Gov'T Mule

The Name Dropper

Posted by Paul in data, fun, Music, The Echo Nest, web services on July 10, 2010

[tweetmeme source= ‘plamere’ only_single=false]

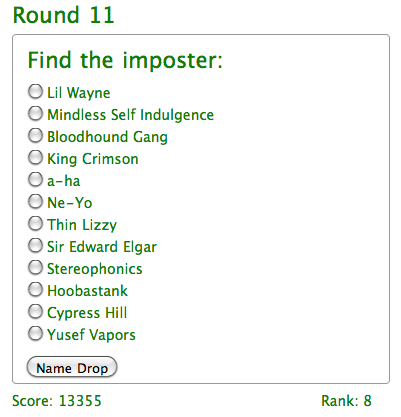

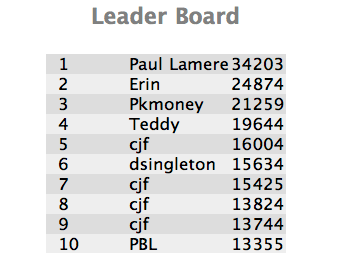

TL;DR; I built a game called Name Dropper that tests your knowledge of music artists.

One bit of data that we provide via our web APIs is Artist Familiarity. This is a number between 0 and 1 that indicates how likely it is that someone has heard of that artists. There’s no absolute right answer of course – who can really tell if Lady Gaga is more well known than Barbara Streisand or whether Elvis is more well known than Madonna. But we can certainly say that The Beatles are more well known, in general, than Justin Bieber.

To make sure our familiarity scores are good, we have a Q/A process where a person knowledgeable in music ranks our familiarity score by scanning through a list of artists ordered in descending familiarity until they start finding artists that they don’t recognize. The further they get into the list, the better the list is. We can use this scoring technique to rank multiple different familiarity algorithms quickly and accurately.

One thing I noticed, is that not only could we tell how good our familiarity score was with this technique, this also gives a good indication of how well the tester knows music. The further a tester gets into a list before they can’t recognize artists, the more they tend to know about music. This insight led me to create a new game: The Name Dropper.

The Name Dropper is a simple game. You are presented with a list of dozen artist names. One name is a fake, the rest are real.

If you find the fake, you go onto the next round, but if you get fooled, the game is over. At first, it is pretty easy to spot the fakes, but each round gets a little harder, and sooner or later you’ll reach the point where you are not sure, and you’ll have to guess. I think a person’s score is fairly representative of how broad their knowledge of music artists are.

The biggest technical challenge in building the application was coming up with a credible fake artist name generator. I could have used Brian’s list of fake names – but it was more fun trying to build one myself. I think it works pretty well. I really can’t share how it works since that could give folks a hint as to what a fake name might look like and skew scores (I’m sure it helps boost my own scores by a few points). The really nifty thing about this game is it is a game-with-a-purpose. With this game I can collect all sorts of data about artist familiarity and use the data to help improve our algorithms.

So go ahead, give the Name Dropper a try and see if you can push me out of the top spot on the leaderboard:

Play the Name Dropper