Posts Tagged remix

Rearranging the Machine

Posted by Paul in Music, remix, The Echo Nest on January 5, 2010

Last month I used Echo Nest remix to rearrange a Nickelback song (See From Nickelback to Bickelnack) by replacing each sound segment with another similar sounding segment. Since Nickelback is notorious for their self-similarity, a few commenters suggested that I try the remix with a different artist to see if the musicality stems from the remix algorithm or from Nickelback’s self-similarity. I also had a few tweaks to the algorithm that I wanted to try out, so I gave it go. Instead of remixing Nickelback I remixed the best selling Christmas song of 2009 Rage Against The Machine’s ‘Killing in the Name’.

Here’s the remix using the exact same algorithm that was used to make the Bickelnack remix:

Like the Bickelnack remix – this remix is still rather musical. (Source for this remix is here: vafroma.py)

A true shuffle: One thing that is a bit unsatisfying about this algorithm is that it is not a true reshuffling of the input. Since the remix algorithm is looking for the nearest match, it is possible for single segment to appear many times in the output while some segments may not appear at all. For instance, of the 1140 segments that make up the original RATM Killing in the Name, only 706 are used to create the final output (some segments are used as many as 9 times in the output). I wanted to make a version that was a true reshuffling, one that used every input segment exactly once in the output, so I changed the inner remix loop to only consider unused segments for insertion. The algorithm is a greedy one, so segments that occur early in the song have a bigger pool of replacement segments to draw on. The result is that as the song progresses, the similarity of replacement segments tends to drop off.

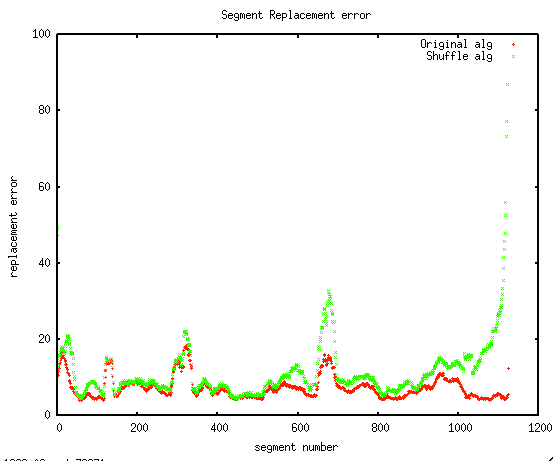

I was curious to see how much extra error there was in the later segments, so I plotted the segment fitting error. In this plot, the red line is the fitting error for the original algorithm and the green line is the fitting error for shuffling algorithm. I was happy to see that for most of the song, there is very little extra error in the shuffling algorithm, things only get bad in the last 10% of the song.

You can hear see and hear the song decay as the pool of replacement segments diminish. The last 30 seconds are quite chaotic. (Remix source for this version is here: vafroma2.py)

More coherence: Pulling song segments from any part of a song to build a new version yields fairly coherent audio, however, the remixed video can be rather chaotic as it seems to switch cameras every second or so. I wanted to make a version of the remix that would reduce the shifting of the camera. To do this, I gave slight preference to consecutive segments when picking the next segment. For example, if I’ve replaced segment 5 with segment 50, when it is time to replace segment 6, I’ll give segment 51 a little extra chance. The result is that the output contains longer sequences of contiguous segments. – nevertheless no segment is ever in its original spot in the song. Here’s the resulting version:

I find this version to be easier to watch. (Source is here: vafroma3.py).

Next experiments along these lines will be to draw segments from a large number of different songs by the same artist, to see if we can rebuild a song without using any audio from the source song. I suspect that Nickelback will again prove themselves to be the masters of self-simlarity:

Here’s the original, un-remixed version of RATM- Killing in the name:

Echo Nest analysis and visualization for Dopplereffekt – Scientist

Posted by Paul in fun, remix, The Echo Nest, visualization on December 20, 2009

From Nickelback to Bickelnack

I saw that Nickelback just received a Grammy nomination for Best Hard Rock Performance with their song ‘Burn it to the Ground’ and wanted to celebrate the event. Since Nickelback is known for their consistent sound, I thought I’d try to remix their Grammy-nominated performance to highlight their awesome self-similarity. So I wrote a little code to remix ‘Burning to the Ground’ with itself. The algorithm I used is pretty straightforward:

- Break the song down into smallest nuggets of sound (a.k.a segments)

- For each segment, replace it with a different segment that sounds most similar

I applied the algorithm to the music video. Here are the results:

Considering that none of the audio is in its original order, and 38% of the original segments are never used, the remix sounds quite musical and the corresponding video is quite watchable. Compare to the original (warning, it is Nickelback):

Feel free to browse the source code, download remix and try creating your own.

DJ API’s secret sauce

Last week at the Echo Nest 4 year anniversary party we had two renown DJs keeping the music flowing. DJ Rupture was the featured act – but opening the night was the Echo Nest’s own DJ API (a.k.a Ben Lacker) who put together a 30 minute set using the Echo Nest remix.

I was really quite astounded at the quality of the tracks Ben put together (and all of them apparently done on the afternoon before the gig). I asked Ben to explain how he created the tracks. Here’s what he said:

1. ‘One Thing’ – featuring Michael Jackson’s (dj api’s rip)

I found a half-dozen a cappella Michael Jackson songs as well as instrumental and a cappella recordings of Amerie’s “One Thing” on YouTube. To get Michael Jackson to sing “One Thing”, I stitched all his a cappella tracks together into a single track, then ran afromb: for each segment in the a cappella version of “One Thing”, I found the segment in the MJ a cappella medley that was closest in pitch, timbre, and loudness. The result sounded pretty convincing, but was heavy on the “uh”s and breath sounds. Using the pitch-shifting methods in modify.py, I shifted an a cappella version of “Ben” to be in the same key as “One Thing”, then ran afromb again. I edited together part of this result and part of the first result, then synced them up with the instrumental version of “One Thing.”

2. One Thing (dj api’s gamelan version)

I used afromb again here, this time resynthesizing the instrumental version of “One Thing” from the segments of a recording of a Balinese Gamelan Orchestra. I synced this with the a cappella version of “One Thing” and added some kick drums for a little extra punch

3. Billie Jean (dj api screwdown)

First I ran summary on an instrumental version of Beyoncé’s “Single Ladies (another YouTube find) to produce a version consisting only of the “ands” (every second eighth note). I then used modify.shiftRate to slow down an a cappella version of “Billie Jean” until its tempo matched that of the summarized “Single Ladies”. I synced the two, and repeated some of the final sections of “Single Ladies” to follow the form of “Billie Jean

Echo Nest Remix 1.2 has just been released

Posted by Paul in code, fun, The Echo Nest on July 10, 2009

Just in time for hack day … Quoting Brian:

Hi all, 1.2 just “released.” There are prebuilt binary installers for Mac and Windows, and pretty detailed instructions for Linux and other source installs.

The main features of Remix 1.2 are video processing and time/pitch stretching, along with a reliance of “pyechonest” to do the communication with EN. This lets you do more than just track API calls.

Some relevant examples included in the stretch and videx folders.

Thanks to all for the hard work.

This release ties together the video processing, time and pitch stretching, making it possible to do the video and the stretching remixes from the same install. Awesome!

You make me quantized Miss Lizzy!

Posted by Paul in code, Music, remix, The Echo Nest, web services on July 5, 2009

This week we plan to release a new version of the Echo Nest Python remix API that will add the ability to manipulate tempo and pitch along with all of the other manipulations supported by remix. I’ve played around with the new capabilities a bit this weekend, and its been a lot of fun. The new pitch and tempo shifting features are integrated well with the API, allowing you to adjust tempo and pitch at any level from the tatum on up to the beat or the bar. Here are some quick examples of using the new remix features to manipulate the tempo of a song.

A few months back I created some plots that showed the tempo variations for a number of drummers. One plot showed the tempo variation for The Beatles playing ‘Dizzy Miss Lizzy’. The peaks and valleys in the plot show the tempo variations over the length of the song.

You got me speeding Miss Lizzy

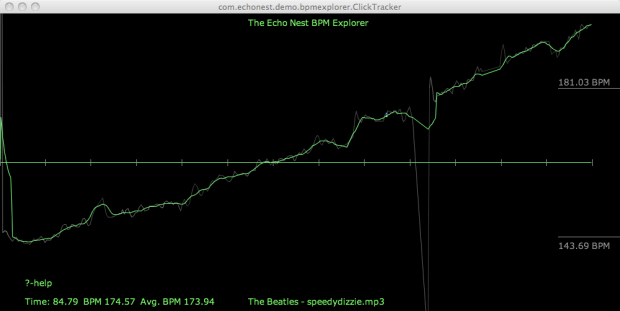

With the tempo shifting capabilities of the API we can now not only look at the detailed tempo information – we can manipulate it. For starters here’s an example where we use remix to gradually increase the tempo of Dizzy Ms. Lizzy so that by the end, its twice as fast as the beginning. Here’s the clickplot:

And here’s the audio

I don’t notice the acceleration until about 30 or 45 seconds into the song. I suspect that a good drummer would notice the tempo increase much earlier. I think it’d be pretty fun to watch the dancers if a DJ put this version on the turntable.

As usual with remix – the code is dead simple:

st = modify.Modify()

afile = audio.LocalAudioFile(in_filename)

beats = afile.analysis.beats

out_shape = (2*len(afile.data),)

out_data = audio.AudioData(shape=out_shape, numChannels=1, sampleRate=44100)

total = float(len(beats))

for i, beat in enumerate(beats):

delta = i / total

new_ad = st.shiftTempo(afile[beat], 1 + delta / 2)

out_data.append(new_ad)

out_data.encode(out_filename)

You got me Quantized Miss Lizzy!

For my next experiment, I thought it would be fun if we could turn Ringo into a more precise drummer – so I wrote a bit of code that shifted the tempo of each beat to match the average overall tempo – essentially applying a click track to Ringo. Here’s the resulting click plot, looking as flat as most drum machine plots look:

Compare that to the original click plot:

Despite the large changes to the timing of the song visibile in the click plot, it’s not so easy to tell by listening to the audio that Ringo has been turned into a robot – and yes there are a few glitches in there toward the end when the beat detector didn’t always find the right beat.

The code to do this is straightforward:

st = modify.Modify()

afile = audio.LocalAudioFile(in_filename)

tempo = afile.analysis.tempo;

idealBeatDuration = 60. / tempo[0];

beats = afile.analysis.beats

out_shape = (int(1.2 *len(afile.data)),)

out_data = audio.AudioData(shape=out_shape, numChannels=1, sampleRate=44100)

total = float(len(beats))

for i, beat in enumerate(beats):

data = afile[beat].data

ratio = idealBeatDuration / beat.duration

# if this beat is too far away from the tempo, leave it alone

if ratio < .8 and ratio > 1.2:

ratio = 1

new_ad = st.shiftTempoChange(afile[beat], 100 - 100 * ratio)

out_data.append(new_ad)

out_data.encode(out_filename)

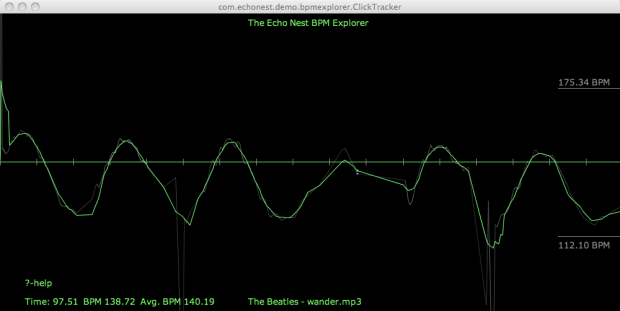

Is is Rock and Roll or Sines and Cosines?

Here’s another tempo manipulation – I applied a slowly undulating sine wave to the overall tempo – its interesting how more disconcerting it is to hear a song slow down than it does hearing it speed up.

I’ll skip the code for the rest of the examples as an exercise for the reader, but if you’d like to see it just wait for the upcoming release of remix – we’ll be sure to include these as code samples.

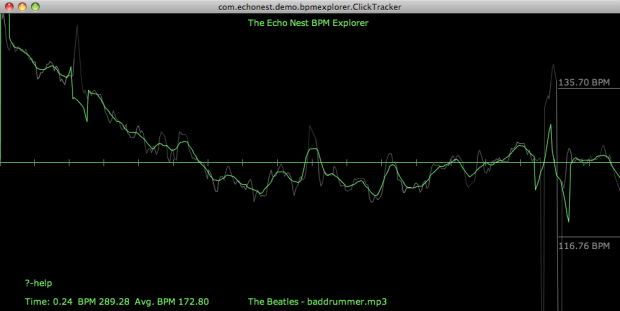

The Drunkard’s Beat

Here’s a random walk around the tempo – my goal here was an attempt to simulate an amateur drummer – small errors add up over time resulting in large deviations from the initial tempo:

Here’s the audio:

Higher and Higher

In addition to these tempo related shifts, you can also do interesting things to shift the pitch around. I’ll devote an upcoming post to this type of manipulation, but here’s something to whet your appetite. I took the code that gradually accelerated the tempo of a song and replaced the line:

new_ad = st.shiftTempo(afile[beat], 1 + delta / 2) with this:new_ad = st.shiftPitch(afile[beat], 1 + delta / 2)in order to give me tracks that rise in pitch over the course of the song.

Wrapup

Look for the release that supports time and pitch stretching in remix this week. I’ll be sure to post here when it is released. To use the remix API you’ll need an Echo Nest API key, which you can get for free from developer.echonest.com.The click plots were created with the Echo Nest BPM explorer – which is a Java webstart app that you can launch from your browser at The Echo Nest BPM Explorer site.

The Dissociated Mixes

Posted by Paul in fun, Music, remix, The Echo Nest, web services on June 30, 2009

Check out Adam Lindsay’s latest post on Dissociated Mixes. He’s got a pretty good collection of automatically shuffled songs that sound interesting and eerily different from the original. One example is this remixed audio/video of Beck’s Record Club cover of “Waiting for my Man” by The Velvet Underground and Nico:

Where’s the Pow?

This morning, while eating my Father’s day bagel, I got to play some more with the video aspects of the Echo Nest remix API. The video remix is pretty slick. You use all of the tools that you use in the audio remix, except that the object you are manipulating has a video component as well. This makes it easy to take an audio remix and turn it into a video remix. For instance, here’s the remix code to create a remix that includes the first beat of every bar:

audiofile = audio.LocalAudioFile(input_filename)

collect = audio.AudioQuantumList()

for bar in audiofile.analysis.bars:

collect.append(bar.children()[0])

out = audio.getpieces(audiofile, collect)

out.encode(output_filename)

To turn this into a video remix, just change the code to:

av = video.loadav(input_filename)

collect = audio.AudioQuantumList()

for bar in av.audio.analysis.bars:

collect.append(bar.children()[0])

out = video.getpieces(av, collect)

out.save(output_filename)

The code is nearly identical, differing in loading and saving, while the core remix logic stays the same.

To make a remix of a YouTube video, you need to save a local copy of the video. I’ve been using KeepVid to save local flv (flash video format) of any Youtube video.

Today I played with the track ‘Boom Boom Pow’ by the Black Eyed Peas. It’s a fun song for remix because it has a very strong beat, and already has a remix feel to it. And since the song is about digital transformation, it seems to be a good target for remix experiments. (and just maybe they won’t mind the liberties I’ve taken with their song).

Here’s the original (click through to YouTube to watch it since embedding is not allowed):

Just Boom

The first remix is to only include the first beat of every measure. The code is this:

for bar in av.audio.analysis.bars: collect.append(bar.children()[0])

Just Pow

Change the beat included from beat zero to beat three, and we get something that sounds very different:

Pow Boom Boom

Here’s a version with the beats reversed. The core logic for this transformation is one line of code:

av.audio.analysis.beats.reverse()

The 5/4 Version

Here’s a version that’s in 5/4 – to make this remix I duplicated the first beat and swapped beats 2 and 3. This is my favorite of the bunch.

These transformations are of the simplest variety, taking just a couple of minutes to code and try out. I’m sure some budding computational remixologist could do some really interesting things with this API.

Note that the latest video support is not in the main branch of remix. If you want to try some of this out you’ll need to check out the bl-video branch from the svn repository. But this is guaranteed to be rolled into the main branch before the upcoming Music Hackday. Update: the latest video support is now part of the main branch. If you want to try it out, check it out from the trunk of the SVN repository. So download the code, grab your API key and start remixing.

Update: As Brian pointed out in the comments there was some blocking on the remix renders. This has been fixed, so if you grab the latest code, the video output quality is as good as the input.

More confusing than Memento

Posted by Paul in code, fun, remix, The Echo Nest on June 20, 2009

Ben Lacker, one of our leading computational remixologists here at the Echo Nest has been improving the video remix capabilities of the Echo Nest remix API. On Friday, he remixed this mind blower. It’s Coldplay’s music video for ‘The Scientist’ – beat reversed, which means that song is played in reverse order beat by beat (but each beat is still played in forward order). Since Coldplay’s video is already shot in reverse order, the resulting video has a story that unfolds in proper chronological order, but where every second of video runs backwards, while the music unfolds in reverse chronological order while every beat runs forward. I get a little bit of a stomachache watching this video.

Ben has committed the code for this remix to the Echo Nest remix code samples so feel free to check it out and hack on it. I hope to see some more interesting music and video remixes coming out of the upcoming Music Hackday.

Remix 1.1 is released

Posted by Paul in fun, Music, remix, The Echo Nest on June 11, 2009

Version 1.1 of the Echo Nest remix has been released. Adam Lindsay, in his Remix Overview describes it thus:

Remix is a sophisticated tool to allow you to quickly, expressively, and intuitively chop up existing audio content and create new content based on the old. It allows you to reach inside the music, and let the music’s own musical qualities be your — or your computer’s — guide in finding something new in the old. By using Remix’s knowledge of a given song’s structure, you can render the familiar strange, or the strange slightly more familiar-sounding. You can create countless parameterized variations of a given song — or one of near-limitless length — that respect or desecrate the original, or land on any of countless steps in between.

This release as concentrated on making it easier to install. We now have install instructions for Linux, Mac and Windows. We also now use the FFMpeg encoder/decoder instead of mad and lame. This has a number of advantages; it makes it easier to install, it supports a larger number of file formats, and perhaps most importantly, it is the same decoder that the Echo nest Analyze uses. This ensures that audio segment boundaries fall exactly on zero-crossings.

Remix is really fun to play with, and the results are always interesting and sometimes even musical. Here’s an example of a song released in the last year (can you guess it?) that has been remixed to include only the first beat of each measure.